Because it’s important to know when adversaries are scanning the network searching for vulnerabilities, misconfigurations, unpatched systems or default passwords; but it’s more important to know when adversaries have already compromised a system. Aggregating this data into a unified cloud-based AI-native XDR platform like CrowdStrike Falcon may seem like an impossible task, so here is a step-by-step guide to help you avoid common pitfalls and succeed at feeding any events into it, with examples tailored specifically for SQL Server.

Pitfall # 1: the parser

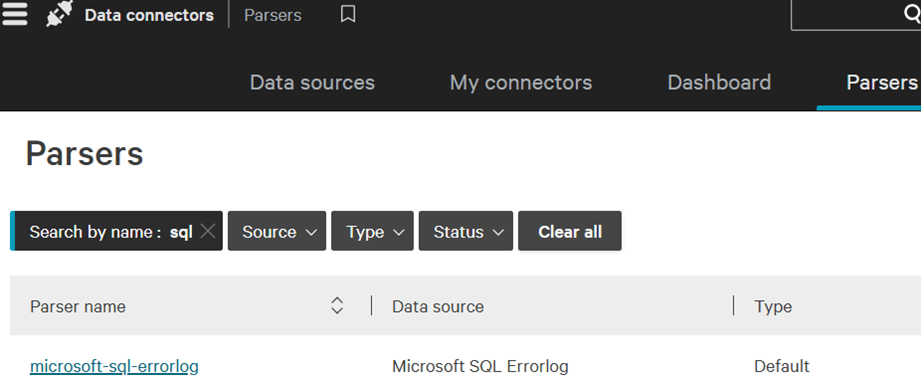

This is the piece responsible of translating text into actionable fields, for example, extracting error codes and IP addresses. If the parser is unable to do this task, then any information you send to CrowdStrike won't be usable, as it won't be able to be analyzed neither automate actions based on it. You may think, because there are so many parsers already installed, one of them may suit your needs. For example, this is from Microsoft for the SQL Server error log:

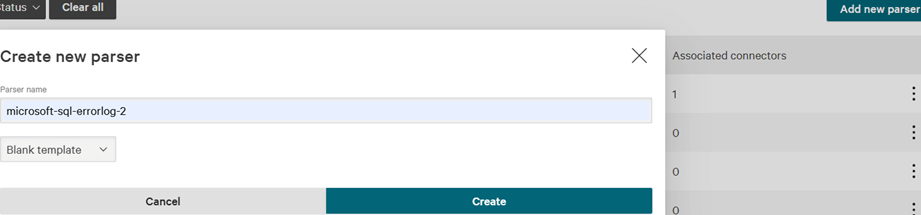

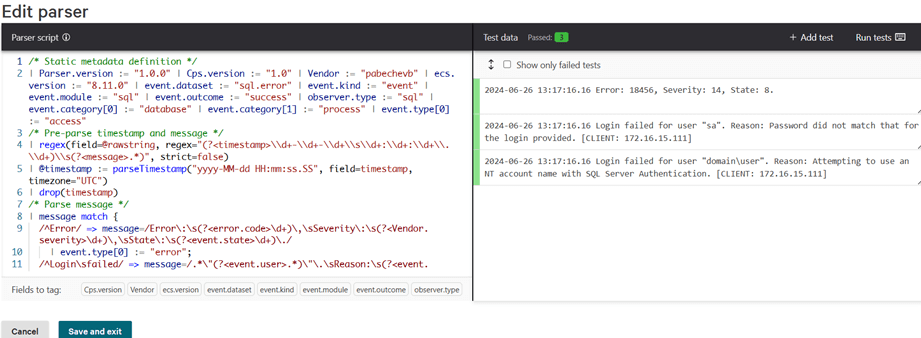

But maybe this parser was for earlier versions of CrowdStrike log management system, LogScale, because it doesn’t work with the events gathered for SQL Server, so you’re better off starting from scratch with a new parser. First, click on “Add new parser”, provide a name like “microsoft-sql-errorlog-2”, select “Blank template”, and click on “Create”:

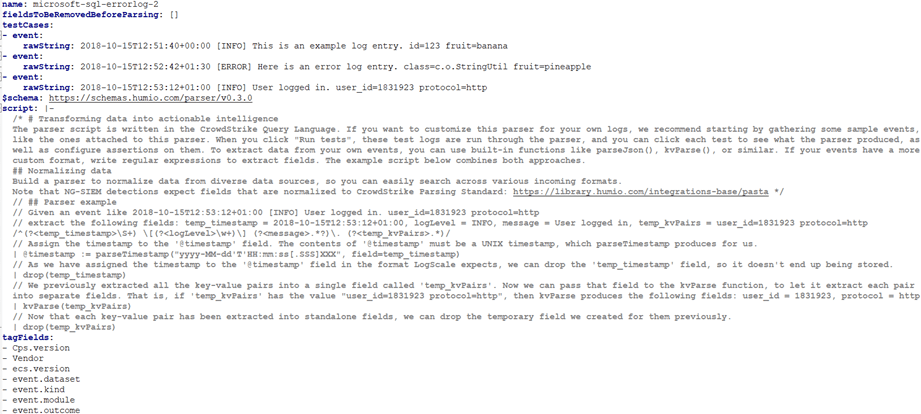

You will be redirected to the “Edit parser” page with three sample test cases; click on “Save and exit”, then in the upper-right corner select the three dots and select "Export parser”. This will let you edit the YAML file in a text editor:

The first thing to do is add your own test cases, so replace them with your own events. Instead of "[INFO] This is an example log entry. id=123 fruit=banana" you're going to add an event from SQL Server, "Error: 18456, Severity: 14, State: 8.".

Next, read the instructions clearly detailed in the "script" section and try to understand them, as they explain what are you trying to accomplish, which functions you can use, and tells you how to get started parsing the events. It's not necessary to write the script blindly, you can upload the parser as it is and in the graphical interface, start experimenting. But if you want to start with something already working, replace the script with the one below which is my own version:

name: microsoft-sql-errorlog-2

fieldsToBeRemovedBeforeParsing: []

testCases:

- event:

rawString: '2024-06-26 13:17:16.16 Error: 18456, Severity: 14, State: 8.'

- event:

rawString: '2024-06-26 13:17:16.16 Login failed for user "sa". Reason: Password did not match that for the login provided. [CLIENT: 172.16.15.111]'

- event:

rawString: '2024-06-26 13:17:16.16 Login failed for user "domain\user". Reason: Attempting to use an NT account name with SQL Server Authentication. [CLIENT: 172.16.15.111]'

$schema: https://schemas.humio.com/parser/v0.3.0

script: |-

/* Static metadata definition */ | Parser.version := "1.0.0" | Cps.version := "1.0" | Vendor := "pabechevb" | ecs.version := "8.11.0" | event.dataset := "sql.error" | event.kind := "event" | event.module := "sql" | event.outcome := "success" | observer.type := "sql" | event.category[0] := "database" | event.category[1] := "process" | event.type[0] := "access"

/* Pre-parse timestamp and message */ | regex(field=@rawstring, regex="(?<timestamp>\\d+-\\d+-\\d+\\s\\d+:\\d+:\\d+\\.\\d+)\\s(?<message>.*)", strict=false)

| timestamp := parseTimestamp("yyyy-MM-dd HH:mm:ss.SS", field=timestamp, timezone="UTC")

/* Parse message */ | message match {

/^Error/ => message=/Error\:\s(?<error.code>\d+)\,\sSeverity\:\s(?<Vendor.severity>\d+)\,\sState\:\s(?<event.state>\d+)\./

| event.type[0] := "error";

/^Login\sfailed/ => message=/.*\"(?<event.user>.*)\"\.\sReason:\s(?<event.reason>.*)\.\s\[CLIENT\:\s(?<event.IP>.*)\]/

| event.category[1] := "authentication"

| event.type[0] := "info"

| event.outcome := "failure";

* => *;

}

tagFields:

- Cps.version

- Vendor

- ecs.version

- event.dataset

- event.kind

- event.module

- event.outcome

- observer.type

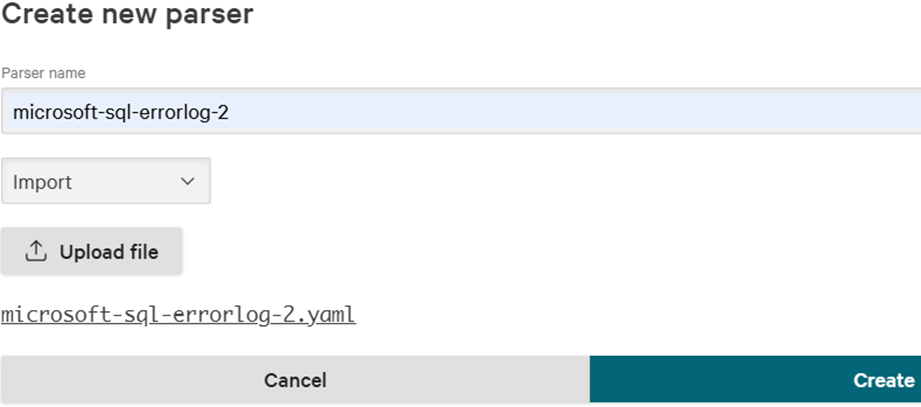

Once you save the changes to the file, delete the newly created parser from CrowdStrike, and click again on “Add new parser”. This time, select “Import” and click on “Upload file” choosing the file you edited locally, then click on “Create”:

Once you upload the parser, the system is going to run the script against the test cases you specified at the top of the script, and if everything is correct, you will see all the tests passed. If the tests don't pass, you can click on each test and see what is the error, this will allow you to fine-tune the script section on the left (remember to go back to the initial script which has information on how to get you started parsing events). Finally, re-run the tests with the "Run tests" button in the upper right corner until you can successfully extract the necessary information from all the events:

Pitfall # 2: the data source

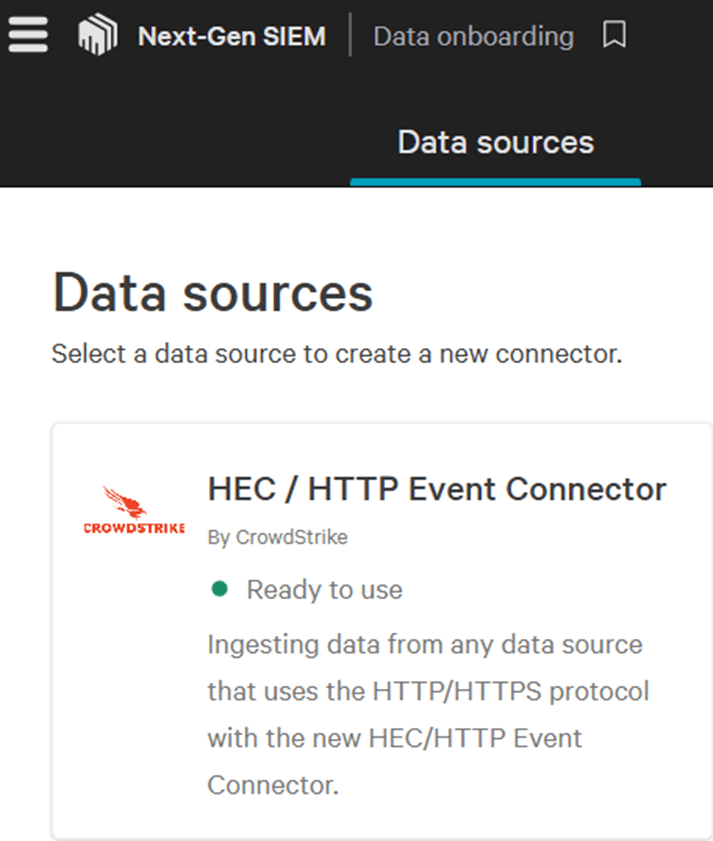

The data source is nothing but an API key and an URL that CrowdStrike will provide you for you to feed events into it, and having so many possible options can make you think one of them is suitable for your events. But if you search for something like "Windows" or "SQL Server" you will notice there isn't any. This is because the data source connectors are generic, not specific, and only two of them allow you to fine-tune your data ingestion: “Amazon S3 Data Connector” and “HEC / HTTP Event Connector”. This last one is the one we will use to feed SQL Server events, so head to "Next-Gen SIEM" and then to "Data onboarding", then search for it:

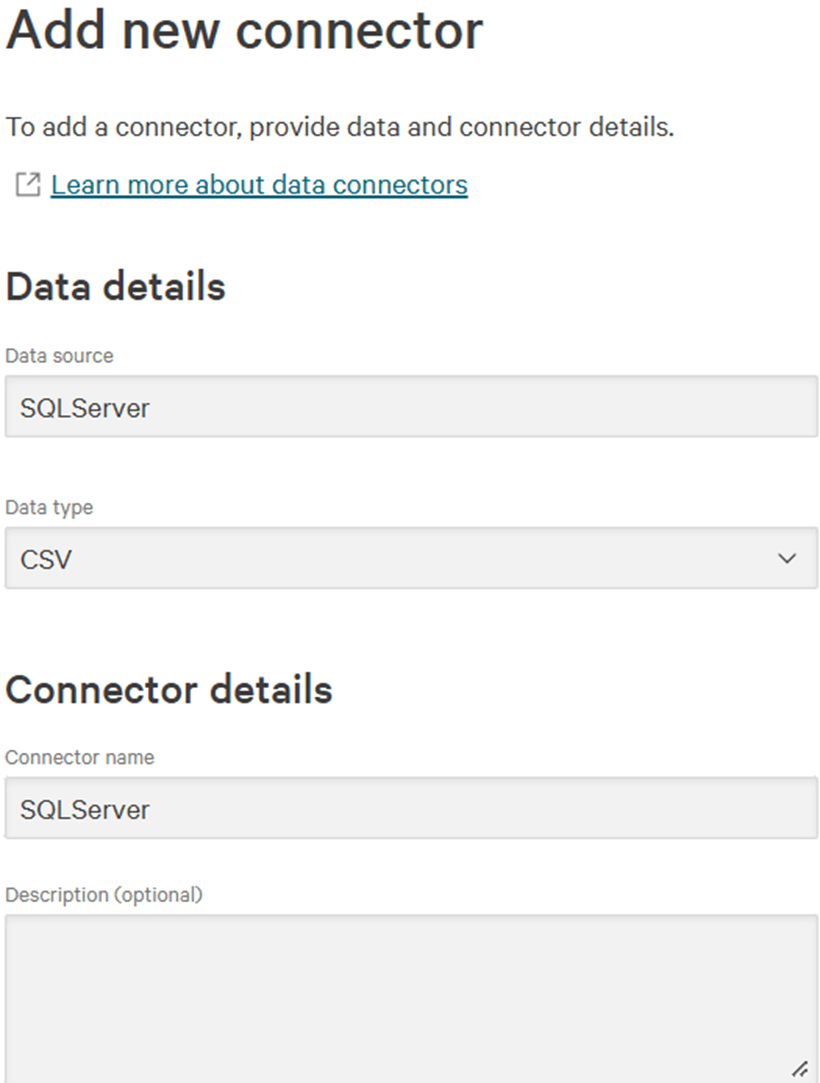

Click on it to use it as data source, provide a data source name, select “CSV” as data type, and provide a connector name:

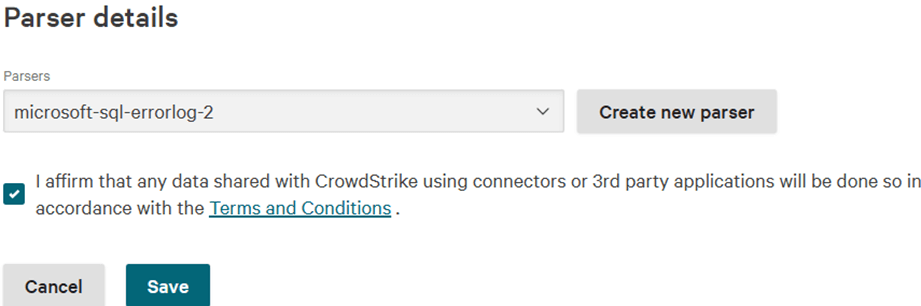

Then select the new parser you created in the previous step, check the checkbox of the agreement, and click “Save”:

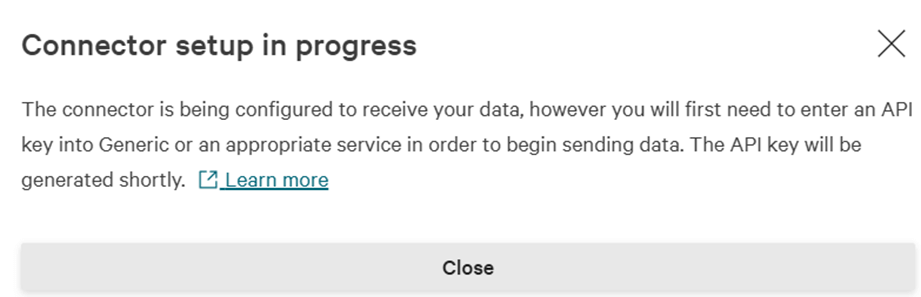

You will now see a message indicating you need to generate an API key:

Close that message, and in the upper section of the page, you will see the following message that allows you to generate the API key, click on the button:

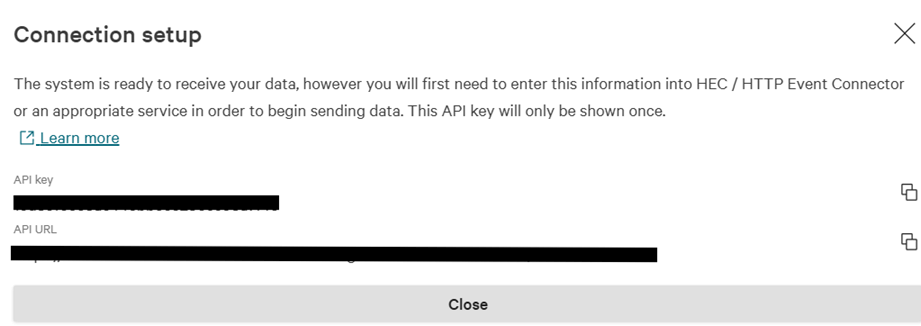

Then you will see the API key and the API URL, copy them as you will need them in the next step:

Pitfall # 3: data uploading

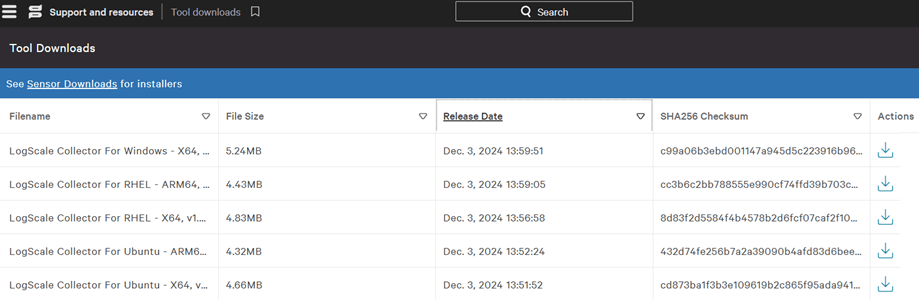

This is the place which sends events from your source into CrowdStrike, using the values generated earlier: the key and the URL where to send them. You can manually create an HTTP POST request to upload the events using a program like “curl”, but there are so many fields required, that creating your custom script and testing it is going to consume a lot of time and trial/error. The easiest way to upload the data is by using CrowdStrike’s LogScale Collectors, so head to “Support and resources”, then “Tool downloads”, and select the appropriate LogScale Collector. Once downloaded, check the version, for example the latest for Windows is 1.8.1 dated Dec 3, 2024:

Once downloaded, install it in the SQL Server; you don’t need to provide any details, just follow the instructions. Once installed, go to the install directory, which in my case it is “C:\Program Files (x86)\CrowdStrike\Humio Log Collector”, and edit the config.yaml file from this:

dataDirectory: C:\ProgramData\LogScale Collector\ sources: # windows_events: # type: wineventlog # channels: # - name: Application # - name: Security # - name: System # sink: humio sinks: # humio: # type: humio # token: <ingest-token> # url: https://cloud.humio.com

To this:

- source type=file: indicates you're feeding a plain text file

- include=filename: indicates the file the service is going to monitor for changes

- sink type=hec: indicates you will send the events through HTTP Event Collector (HEC)

dataDirectory: C:\ProgramData\LogScale Collector\

sources:

sqlserver:

type: file

include: C:\Program Files\Microsoft SQL Server\MSSQL13.MSSQLSERVER\MSSQL\Log\cs2.log

sink: humio

sinks:

humio:

type: hec

proxy: none

token: <API-key>

url: <API-URL>

Here in "token" you need to enter the API key and in "url" you need to enter the API URL, both were provided in the previous step. Note I’m not including the SQL Server ERRORLOG file directly, because it is encoded as UTF16, and you won’t be able to parse it. Once the changes are done, you need to start the Windows service named “Humio Log Collector”.

Pitfall # 4: events

These are the pieces of information you want CrowdStrike to have, so a SOC analyst will know when something is abnormal and can investigate or create automated responses based on it. Having said that, you don't want to overwhelm the SOC analyst with everything occurring inside SQL Server; you only want to feed them cybersecurity-related events.

In the article “Read all errors and warnings in the SQL Server Error Log for all versions” I show you how to read the SQL Server error and trace log files, you just need to convert it into a stored procedure to call it periodically. In the article “Standardized table based SQL Server monitoring email with PowerShell” I show you how to call the database from PowerShell, so it’s just a matter of calling the stored procedure you created. You need to read them both before continuing, because they detail what is going to occur when you read the error log from a T-SQL script, and what will be returned when called from PowerShell.

After you've read the two articles stated above, you will understand we need to filter the events to only select the ones we’re interested in:

$server = "sqlserver"

$database = "master"

$query = "EXEC [dbo].[usp_ErrorLogRead]"

$querytimeout = 0

$filter = { $_.Text.Contains("Error") -Or $_.Text.Contains("Login failed for user") }

$columns = '*'

$excludeColumns = 'RowError, RowState, Table, ItemArray, HasErrors' -split ", "

$result = (Invoke-Sqlcmd -ServerInstance $server -Database $database -Query $query -querytimeout $querytimeout)

$result2 = $result | where $filter | select $columns -ExcludeProperty $excludeColumns

$out = ""

foreach ($row in $result2) {

$out+="$($row.LogDate.ToString('yyyy-MM-dd HH:mm:ss.ff')) $($row.Text.ToString().Replace('''','"'))rn"

}

if ($out) {

$out = $out.Substring(0,$out.Length-2)

$out | Set-Content -Encoding utf8 "C:\Program Files\Microsoft SQL Server\MSSQL13.MSSQLSERVER\MSSQL\Log\cs2.log"

}

You can see in the variable “$out” we’re formatting the date as expected by our parser, we’re removing the last line ending, and we’re writing it as UTF8 to the file defined in the previous step, the one the collector is going to send to CrowdStrike.

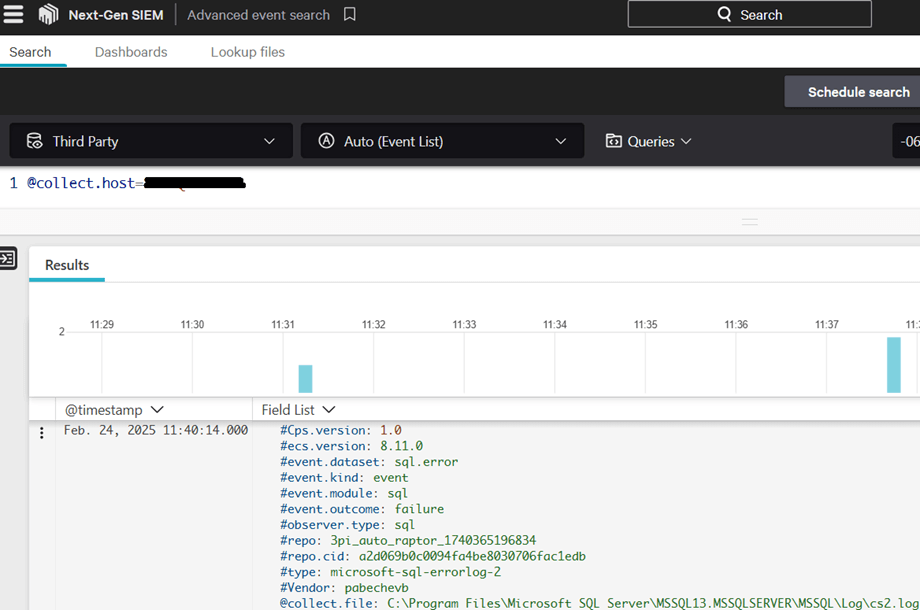

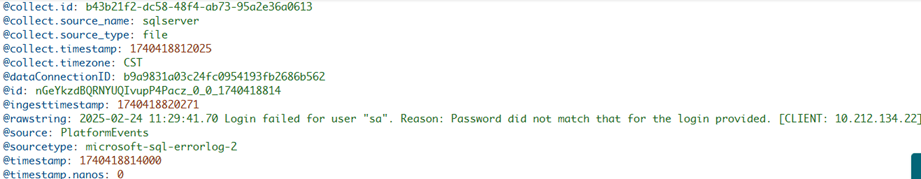

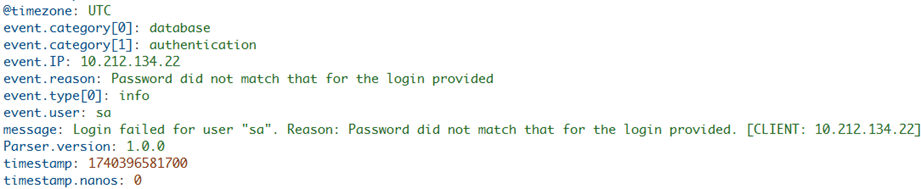

Finally, we can see the events in CrowdStrike’s Next-Gen SIEM Advanced event search, selecting the “Third-Party” logs and filtering for the @collect.host where the program is running:

Note: if you need any help regarding the solutions described here, you can contact me in my LinkedIn profile.