In the first article of this series, we started to deconstruct a stored procedure with the aim of improving the efficiency of it. Hopefully, you will have been able to absorb that information and are now eager to see more.

The number one reason I hear for creating a cursor routine is to allow for the conditional processing on a limited number of rows. In the original stored procedure, we have this simple condition:

IF(@CustomerType= 'I')BEGIN /* Individual */ SELECT @CustomerName =FirstName+' '+ LastName

FROM Person.Contact

JOIN Sales.Individual

ON Contact.ContactID= Individual.ContactID

WHERE Individual.CustomerID= @CustomerId

END ELSE BEGIN /* Store */ SELECT @CustomerName =Name

FROM Sales.Store

WHERE CustomerID =@CustomerId

END

The simplest way of replicating the above conditional logic within a set based statement is to use a LEFT JOIN. Then we can use a CASE statement to reproduce the IF condition if a complex series of conditions are required, or, as in this case, ISNULL/COALESCE. I will be first to admit that, perhaps, this is a very simplistic example and that it will not be possible in all cases. If, for instance, one branch of the code contains multiple complex and lengthy processes then you could be of the opinion that the cursor route is still the better option. Although it is very difficult, if not impossible, to speak for all scenarios on all systems, I would maintain that by using the above techniques and perhaps two or more UNION ALL’d statements would be a better option.

So our two our possible alternatives for the above code would be:

SELECT Customer.CustomerId,CustomerType,

ISNULL(Sales.Store.Name,Person.Contact.FirstName+' '+Person.Contact.LastName)

FROM Sales.Customer

LEFT JOIN Sales.Individual

on Sales.Customer.CustomerID = Sales.Individual.CustomerID

LEFT JOIN Person.Contact

ON Sales.Individual.ContactID = Person.Contact.ContactID

LEFT JOIN Sales.Store

on Sales.Store.CustomerID = Sales.Customer.CustomerID

or

SELECT Customer.CustomerId,CustomerType,

Person.Contact.FirstName+' '+Person.Contact.LastName as CustomerName

FROM Sales.Customer

JOIN Sales.Individual

on Sales.Customer.CustomerID = Sales.Individual.CustomerID

JOIN Person.Contact

ON Sales.Individual.ContactID = Person.Contact.ContactID

WHERE Sales.Customer.CustomerType = 'I'

UNION ALL

SELECT Customer.CustomerId,CustomerType,

Sales.Store.Name as CustomerName

FROM Sales.Customer

JOIN Sales.Store

on Sales.Store.CustomerID = Sales.Customer.CustomerID

WHERE Sales.Customer.CustomerType <> 'I'

As a side issue, your default choice when UNION’ing statements should be UNION ALL not UNION. The difference between the two is that UNION will give you a distinct result set, whereas UNION ALL will return all then data.

SELECT 1 as Col UNION SELECT 1 | SELECT 1 as Col UNION ALL SELECT 1 |

If you execute the two above queries, with the UNION you get one row, in the other two.

This is obviously a trivial example but I have seen, on many occasions, the errors that can be caused by this issue. Consider these two queries that return the total sales (including archived) from the AdventureWorks database :

with cteUnion as ( select ReferenceOrderID,Quantity,ActualCost from Production.TransactionHistory union select ReferenceOrderID,Quantity,ActualCost from Production.TransactionHistoryArchive ) select SUM(quantity * ActualCost) from cteUnion

The result is $168,556,857.0589

with cteUnion as ( select ReferenceOrderID,Quantity,ActualCost from Production.TransactionHistory union all select ReferenceOrderID,Quantity,ActualCost from Production.TransactionHistoryArchive ) select SUM(quantity * ActualCost) from cteUnion

the result is $190,019,067.0956

If you had used UNION and not UNION ALL , you have just under- reported AdventureWork’s total sales by $28million!!

Not only is it wrong, but if you were to compare the execution plans , then the UNION is more expensive by a substantial margin, as the engine is having to do a lot of work to create a distinct list of data.

As I asserted in the previous article, there are very good reasons for using ITVF’s :

Firstly, code re-use, something that SQL Server (and the SQL language in general) has been historically poor at, is greatly improved. Secondly, by using them in a statement with CROSS APPLY code, readability is also improved. They also help performance, a full discussion about the that is out of scope here, but I would advise you to take a look at Simon Sabin’s session ‘High performance functions’ from SQL Bits 6 at http://sqlbits.com/Sessions/Event6/High_performance_functions.

The below procedure GetSalesReport_Optimize2, demonstrates this and now, since all our logic is contained within functions, we have been able to remove the cursor as well.

CREATE PROCEDURE GetSalesReport_Optimize2

AS

DECLARE @Today DATE

SELECT @Today = '20040724'-- GETDATE()

CREATE TABLE #ReportResults

(

CustomerId INTEGER NOT NULL,

CustomerName NVARCHAR(255)NOT NULL,

TotalDue MONEY NULL,

ShippedTotal MONEY NULL,

OrderDate DATE NULL,

DueDate DATE NULL,

ShipDate DATE NULL,

CustomerMinOrderDate DATE NULL,

CustomerMaxOrderDate DATE NULL,

POrder1 VARCHAR(50)NULL,OrderTotal1 MONEY NULL,

POrder2 VARCHAR(50)NULL,OrderTotal2 MONEY NULL,

POrder3 VARCHAR(50)NULL,OrderTotal3 MONEY NULL,

POrder4 VARCHAR(50)NULL,OrderTotal4 MONEY NULL

)

INSERT INTO #ReportResults

SELECT customer.CustomerId,ISNULL(Store.Name,Contact.FirstName+' '+ Contact.LastName),

SumTotal.CustomerTotalDue,SumTotal.ShippedTotal,

OrderDate,DueDate,ShipDate,

MinMaxOrder.CustomerMinOrderDate,

MinMaxOrder.CustomerMaxOrderDate,

PurchOrders.POrder1, PurchOrders.TotalDue1,

PurchOrders.POrder2, PurchOrders.TotalDue2,

PurchOrders.POrder3, PurchOrders.TotalDue3,

PurchOrders.POrder4, PurchOrders.TotalDue4

FROM Sales.Customer

OUTER APPLY dbo.GetMinMaxOrderDates(Customer.CustomerID) MinMaxOrder

OUTER APPLY dbo.GetTop4DueOrders(Customer.CustomerID) PurchOrders

OUTER APPLY dbo.GetNextOrderDue(Customer.CustomerID,@ToDay) OrderDue

OUTER APPLY dbo.SumCustomerTotals(Customer.CustomerId) SumTotal

LEFT JOIN Sales.Individual

ON Individual.CustomerID= Customer.CustomerID

LEFT JOIN Person.Contact

ON Contact.ContactID= Individual.ContactID

LEFT JOIN Sales.Store

ON Store.CustomerID =Customer.CustomerID

SELECT * FROM #ReportResults

| Version | 1 | 2 | 3 | 4 | 5 | Average | % of Orig |

| 0 | 12,413 | 12,496 | 12,716 | 14,143 | 13,873 | 13,128 | |

| 1 | 10,496 | 10,643 | 10,656 | 11,340 | 11,540 | 10,935 | 22 |

| 2 | 2,170 | 2,230 | 2,296 | 2,340 | 5,120 | 2,831 | 83 |

You can see that we have dramatically improved performance, a drop from 13.1Secs to 2.8Secs which is a whopping 78% improvement. The overhead was entirely due to using a cursor and the effect that procedural programming has on performance. If you were to examine the query plans now, you would find that there are multiple scans against the child tables. There is a general attitude of "scans are bad" but, in our case, since 100% of the data in those scans are required, the scans are quite effective. It is also pretty obvious that the temporary table is now superfluous and can be removed. This is what I have done in GetSalesReport_Optimize3:

CREATE PROCEDURE GetSalesReport_Optimize3

AS

DECLARE @Today DATE

SELECT @Today = '20040724'-- GETDATE()

SELECT customer.CustomerId,

ISNULL(Store.Name,Contact.FirstName+' '+Contact.LastName) AS CustomerName,

SumTotal.CustomerTotalDue as TotalDue,

SumTotal.ShippedTotalas ShippedTotal,

OrderDate,DueDate,ShipDate,

MinMaxOrder.CustomerMinOrderDate,

MinMaxOrder.CustomerMaxOrderDate,

PurchOrders.POrder1, PurchOrders.TotalDue1,

PurchOrders.POrder2, PurchOrders.TotalDue2,

PurchOrders.POrder3, PurchOrders.TotalDue3,

PurchOrders.POrder4, PurchOrders.TotalDue4

FROM Sales.Customer

OUTER APPLY dbo.GetMinMaxOrderDates(Customer.CustomerID) MinMaxOrder

OUTER APPLY dbo.GetTop4DueOrders(Customer.CustomerID) PurchOrders

OUTER APPLY dbo.GetNextOrderDue(Customer.CustomerID,@ToDay) OrderDue

OUTER APPLY dbo.SumCustomerTotals(Customer.CustomerId) SumTotal

LEFT JOIN Sales.Individual

ON Individual.CustomerID= Customer.CustomerID

LEFT JOIN Person.Contact

ON Contact.ContactID= Individual.ContactID

LEFT JOIN Sales.Store

ON Store.CustomerID =Customer.CustomerID

| Version | 1 | 2 | 3 | 4 | 5 | Average | % of Orig |

| 0 | 12,413 | 12,496 | 12,716 | 14,143 | 13,873 | 13,128 | |

| 1 | 10,496 | 10,643 | 10,656 | 11,340 | 11,540 | 10,935 | 83 |

| 2 | 2,170 | 2,230 | 2,296 | 2,340 | 5,120 | 2,831 | 22 |

| 3 | 2,076 | 2,083 | 2,123 | 2,126 | 2,620 | 2,206 | 17 |

Another small improvement on before, but every little bit helps.

We are now getting to a point where we should be happy with the performance, but there are still some issues that can be resolved. If you look at the execution plan of GetSalesReport_Optimize3, you will notice that we have 4 scans of SalesOrderHeader occurring. Reading a page of data, even if it is in cache, is an expensive operation and should be minimized. Each of these 4 scans are looking at the same data and that is generating an overhead that we should look at optimizing out.

In the below function, I have merged the functionality of GetNextOrderDue, GetMinMaxOrderDates, GetTop4DueOrders and SumCustomerTotals together. In the CTE called cteOrders, I have used the ROW_NUMBER() ranking function to create some data that will allow us to ‘pick’ only the rows that are actually required to emulate the logic that was contained within the GetNextOrderDue function.

In the function below, the ‘isDue’ column, which will inform us if the order due date is after today will be set to a ‘Boolean’ value;1 when True , 0 when false. The same calculation is used in the ‘RowN’ column as the partition by value, so that we get an incrementing value in DueDate order for both ‘due’ and ‘non-due’ orders. If you have followed the logic, you will see that there will be only one row where isDue =1 and RowN =1, that being the ‘earliest’ row that ‘isDue’. Using this knowledge we can use the technique of wrapping a case statement inside an aggregate function, like the pivot example before, to return the OrderDate, ShipDate and DueDate of that one individual row.

CREATE FUNCTION GetTotalSumMinMaxAndNextOrderDates(@CustomerID INTEGER,@ToDay DATE)

RETURNS TABLE

AS

RETURN

(

WITH cteOrders

AS

(

SELECT OrderDate,

DueDate,

ShipDate,

TotalDue,

Status,

PurchaseOrderNumber,

CASE WHEN DueDate>= @Today THEN 1 ELSE 0 END AS isDue,

ROW_NUMBER() OVER (PARTITION BY CASE WHEN DueDate >= @Today

THEN 1 ELSE 0 END ORDER BY DueDate) AS Rown,

ROW_NUMBER() OVER (ORDER BY TotalDue DESC) AS RowNDue

FROM Sales.SalesOrderHeader

WHERE CustomerID =@CustomerID

)

SELECT MIN(OrderDate ) AS CustomerMinOrderDate,

MAX(OrderDate)AS CustomerMaxOrderDate,

MIN(CASE WHEN isDue = 1 AND Rown=1 THEN OrderDate END) AS OrderDate,

MIN(CASE WHEN isDue = 1 AND Rown=1 THEN DueDate END) AS DueDate,

MIN(CASE WHEN isDue = 1 AND Rown=1 THEN ShipDate END) AS ShipDate,

SUM(TotalDue) as CustomerTotalDue,

SUM(case when Status =5 then TotalDue else 0 end) as ShippedTotal,

MIN(CASE WHEN RowNDue = 1 THEN PurchaseOrderNumber END) AS POrder1,

MIN(CASE WHEN RowNDue = 1 THEN TotalDue END)AS TotalDue1,

MIN(CASE WHEN RowNDue = 2 THEN PurchaseOrderNumber END) AS POrder2,

MIN(CASE WHEN RowNDue = 2 THEN TotalDue END)AS TotalDue2,

MIN(CASE WHEN RowNDue = 3 THEN PurchaseOrderNumber END) AS POrder3,

MIN(CASE WHEN RowNDue = 3 THEN TotalDue END)AS TotalDue3,

MIN(CASE WHEN RowNDue = 4 THEN PurchaseOrderNumber END) AS POrder4,

MIN(CASE WHEN RowNDue = 4 THEN TotalDue END)AS TotalDue4

FROM cteOrders

)

Once the above function is used within the main procedure, we are left with a much cleaner procedure.

CREATE PROCEDURE GetSalesReport_Optimize4

AS

DECLARE @Today DATE

SELECT @Today = '20040724'-- GETDATE()

SELECT Customer.CustomerID,ISNULL(Store.Name,Person.FirstName+' '+ Person.LastName)AS CustomerName,

OrderDue.CustomerTotalDue as TotalDue,

OrderDue.ShippedTotal,

OrderDate,DueDate,ShipDate,

OrderDue.CustomerMinOrderDate,

OrderDue.CustomerMaxOrderDate,

OrderDue.POrder1, OrderDue.TotalDue1,

OrderDue.POrder2, OrderDue.TotalDue2,

OrderDue.POrder3, OrderDue.TotalDue3,

OrderDue.POrder4, OrderDue.TotalDue4

FROM Sales.Customer

OUTER APPLY dbo.GetTotalSumMinMaxAndNextOrderDates(Customer.CustomerID,@ToDay) OrderDue

LEFT JOIN Person.Person

ON Person.BusinessEntityID = Customer.PersonID

LEFT JOIN Sales.Store

on Store.BusinessEntityID = Customer.StoreID

GO

And the timings are looking pretty clean too.

| Version | 1 | 2 | 3 | 4 | 5 | Average | % of Orig |

| 0 | 12,413 | 12,496 | 12,716 | 14,143 | 13,873 | 13,128 | |

| 1 | 10,496 | 10,643 | 10,656 | 11,340 | 11,540 | 10,935 | 83 |

| 2 | 2,170 | 2,230 | 2,296 | 2,340 | 5,120 | 2,831 | 17 |

| 3 | 2,076 | 2,083 | 2,123 | 2,126 | 2,620 | 2,206 | 22 |

| 4 | 1,353 | 1,373 | 1,390 | 1,390 | 1,606 | 1,422 | 11 |

It is worth noting that, although this final step helped in this case, this is a classic case if “it depends”. There is no guarantee that a single read of a table will always be the more efficient over multiple index seeks at retrieving the data. Consider the following simple schema:

Create table Customer ( CustomerId integer not null primary key ) go Create table CustomerOrders ( CustomerId integer not null, TotalDue money not null ) go create index idxCustomerOrders on CustomerOrders(CustomerId,TotalDue)

and populate them with some sample random data

insert CustomerOrders(CustomerId,TotalDue)

Select top(1000000)

abs(checkSUM(newid() ))%1000,

abs(checkSUM(newid() ))%10000

from sys.columns a

cross join

sys.columns b

cross join

sys.databases c

go

insert into Customer(CustomerId) select distinct CustomerId from CustomerOrders

go

You may safely assume that the fastest way to select the min and max TotalDue for each CustomerId would be to :

select CustomerId,

MIN(TotalDue),

MAX(TotalDue)

from CustomerOrders

group by CustomerId

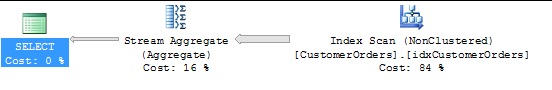

A single scan of data of the entire table has been used. Each row in the table has been considered as a possible value for both min and max aggregates.

Naturally, to resolve the query we are only interested in two of the one thousand(ish) rows per customer, in fact we want only need two thousand of our one million rows to answer the query.

We can drive SQL Server to look at the minimum amount of data required by rewriting the query as:

Select CustomerID,

MinTotalDue.TotalDue,

MaxTotalDue.TotalDue

From customer cross apply (Select top (1) TotalDue

From customerOrders

Where CustomerOrders.CustomerId = Customer.CustomerId order by TotalDue) as MinTotalDue

Cross apply (Select top(1) TotalDue

From customerOrders

Where CustomerOrders.CustomerId = Customer.CustomerId order by totalDue desc) as MaxTotalDue

After executing both queries and comparing the costs the cross-applied query takes a third of the duration (200ms as opposed to the 600ms required for the min/max query).

This, at first, may seem counter-intuitive. Not only are we reading from 2 tables but we are hitting one of those tables twice. The reason for the improvement is that now we can fully exploit the index to provide us with the answer and therefore only look at 2 rows per customer rather than have to pick the required values after scanning though all possible values.

I hope that, if nothing else, these articles have given you some food for thought and that you now have a few more ideas on how to optimize your own poorly performing code.

Many thanks must go to the SQL Server community in general, but more specifically to those who have given their free time to proof read these articles.