I've written several articles about jobs over the past few months, beginning

with Managing

Jobs - Part 1. In this final installment, I want to show you some ideas you

can use when deploying larger, more complex jobs.

At some point you reach a level that requires you to move beyond simply

putting TSQL or VBScript in a job step. It could be that your task requires more

than the 3200 characters allocated for a step, or that you need richer

functionality than either language provides, or maybe you need to have the job

under source control. In practice I think if you have more than a 1000

characters you should probably think about repacking your code - but it works,

so I'll leave that decision to you.

My language of choice is VB6 or VB.Net. The language itself isn't critical,

most languages will support features similar to those I'm about to discuss.

The first choice is whether to package your code as a DLL or an EXE. There

are two reasons to put your code in a DLL:

- You want to reuse the code in another job or application (or you are

already using it in another job/application). If you can reuse code, don't

pass up the opportunity!

- You want to leverage the very nice feature of VB6 that allows you to

suppress any dialogs that might pop 'accidentally' while the dll is running.

- DLL's are the container for objects, which in turn have properties. If you

need to be able to tweak various settings, calling the DLL using VBScript

let's you easily do the tweak by setting properties in the job step.

If you put the code in a DLL, there are headaches associated with that

decision:

- You'll have to terminal service to the server to unregister/re-register

updates to the DLL.

- In some cases you'll have to stop/start the SQL Agent to get the change to

take affect.

- If you want to call the code from a procedure, you'll have to use the

sp_oa~ series of procedures.

There aren't many downsides to EXE's. You compile, deploy by copying to the

server, run. One nice thing about this is that if you want to run it from a

procedure, you can just use xp_cmdshell (assuming you have permission to do so).

If you need to do some run time tweaking you either have to pass the information

in on the command line and parse it (doable, not always intuitive to user new to

the application), or put it someplace where the app can read it - registry,

table, or ini file maybe.

There are a few things to think about when you write code that will run as a

job, particularly if it will run via SQL Agent. I think the most important is

that while the application is running as a job, the UI is inaccessible! If your

application displays any type of modal dialog (including a message box), the

application is stalled. You have to find and kill the process, and/or stop SQL

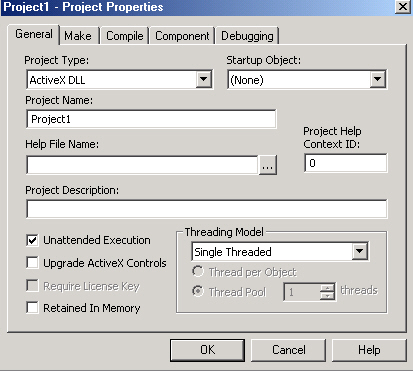

Agent. As I mentioned earlier, if you use VB6, you can avoid this by checking

the box shown below:

If you're not clear on this, build yourself a simple 'Hello, World' app that

displays a message box. Run it on a test server via the Agent and see

what happens.

Just because the UI isn't visible when the application is running doesn't

mean you can't have a UI. If your application will occasionally need to be

reconfigured, a built it UI for making the changes is a very nice feature. The

trick is to make sure that the application runs in the correct 'mode' when you

start it. I control the mode of EXE's using command line switches as follows:

- /RUN This means run the process

and exit automatically when done.

- /CONFIG Displays any available configuration settings

via a built in form.

If no switch is passed, basically nothing happens. The app just immediately

exits.

In VB.Net, I typically build my jobs as console apps. Console apps are meant

to mainly run without a UI (though they can have one) and have the ability to

write to the 'console' - the old DOS command screen type display. The nice part

is that if no command parameters were passed, I write all the possible options

to the console - and they show up in the job history. It's possible to write to

the console using VB6, but it requires several API calls.

VB.Net also supports setting an errorlevel (remember DOS batch files) using

the system.environment namespace. Anything besides a zero will cause the job to

indicate it failed. If no valid switch is passed to the command line or

something else goes wrong during a valid run, I set the errorlevel to -1 so that

the job will fail and I can use the built in notification of SQL jobs to let me

know it did fail. Again you can do this with VB6, but it requires an API call.

Logging can be a big deal in a job. For simple jobs you can just run it and

rely on sysjobhistory. For intermediate level processes, you might want to log

to a standard error/info type table shared by all jobs, something you can scan

easily to see what went wrong. At the highest level you might need a separate

logging system so that if a process fails, you can figure out what happened and

how to get it started again.

Not every process can run as one big transaction, sometimes it's better to

get half done and fail, or fail a particular step/segment and continue, than it

is to have all or nothing. That's a decision you have to make for each job.

You also need to consider how you'll handle versioning and deployment. Let's

assume that the code is under source control - fair assumption? For DLL's,

versioning concerns include whether you'll break the interface, do you have

other jobs that use the original interface? Even with code under source control,

I typically rename the existing dll/exe (process1.exe becomes

process1_0701.exe), then copy the new build to the server. Even with source

control there is no guarantee that you can recreate the exact build requirement

if you had to do so in a hurry, better to have the original binary. You may need

to copy various dll/ocx/other miscellaneous files to the server. You can do it

manually, but I recommend that you take the time to package every application

job into a real installable msi package.

Jobs are one place where patterns pay off in a big way. Build your jobs the

same way each time, standardize how they run, how they fail, how they log, and

how you deploy. What doesn't seem like a big deal when you write the first job

or two suddenly gets to be a very big deal when you have several hundred.