Large Analysis Database Migration

The aim of this report is to share lessons learned when migrating a large SQL Server Analysis Database with hundreds of gigabytes in size, with hundreds of users, and thousands of user queries from version 2000 to 2005.

It describes how:

- MDX syntax and design of the analytical database changed

- ProClarity repository with user queries was updated

- Analytical Processing was designed

- Utilities were useful in the migration process

Source system description

The system is composed of data warehouse users accessing data through the ProClarity Analytics Server 6.3 (PAS) which stores about 20,000 user queries, or, via the ProClarity Desktop Professional. PAS is a web application that enables advanced ad-hoc queries (such as slice & dice, filtering, top, decision trees) and supports some publishing features. Its repository is stored in a SQL Server 2000 database.

Microsoft Analysis Services (SSAS) 2000 is the server component for OLAP queries. Some MDX reports are accessed through Reporting Services 2000. Only a few users access the Oracle relational layer via SQL or report on Reporting Services.

The source system reached its maximum capability in both data processing and data querying. There are certain queries which take more time than would be optimal. Data processing is based on partitions (yearly, quarterly, monthly and weekly). Processing of aggregations continue during querying time as a lazy process, ultimately degrading query performance.

From the hardware point of view, Analysis Services was run on a 32bit Windows 2003 Server EE with 16 GB RAM and 8 processors.

Target system

The end client applications did not change, but the analytical database has been subject to many changes which are not visible from the business users' side but were necessary when the server platform changed to the Microsoft Analysis Services 2005.

The main drivers of the changes featured enhanced monitoring, new processing capabilities (e.g. new ProcessIndex method), supportability and scalability. Tracing, which proved to be quite challenging with SSAS 2000, has become easier as we now use Profiler or Activity Viewer. We should also mention that the support of the 2000 SQL server ended in July, 2007, and that there will be easier migration to 2008 version from 2005 than from 2000 version.

In the near future the target system will use more MDX reports in Reporting Services than the source system. More complicated queries could be optimized under the Reporting Services control.

The target server for Analysis Services is Windows x64 EE with 32 GB RAM and 16 processors.

Migration tasks

Current migration differs from our other migration projects due to its OLAP complexity (type of calculations, strong usage of virtual cubes), PAS component and processing design.

The paradigm change of Analysis Services 2005, for example, does not offer virtual cubes. Analysis Services perspectives are not fully compatible and we often miss the security functionality of virtual cubes.

Calculation complexity is derived primarily from a special dimension type (calculation modifier) which is used in measures calculations to change the meaning of a measure. For example, the modifier member called [Month To Date] changes the values of presented measures from [Standard] to the its MTD. Currently we have about 160 modifier members (Time functions from primitive to rolling averages, Indexes and differences, Balance functions that give you the Closing/Opening balance etc.)

With user queries there is often no single requirement during the migration task - users simply recreate their ProClarity views from the ground. However in this case the PAS source system contains 20,000 user queries and its migration is one of the goals of the project.

Analysis database processing in the target system must be able to process more than once per day. The object model of SSAS 2005 changed from SMO to AMO and offers close to full control of the data processing.

Model of PAS entities

From wide functionalities of PAS our efforts were focused on the so-called Briefing Book Server. To better understand the solution approach we need to describe some its components.

A user query in PAS is not a simple MDX statement - it is classified as a Page organized under a Book and Library. The Superclass for a page is a Briefing Book Element. In addition to this there are BookElements, Books, Libraries and MDStores tables in the relational repository database.

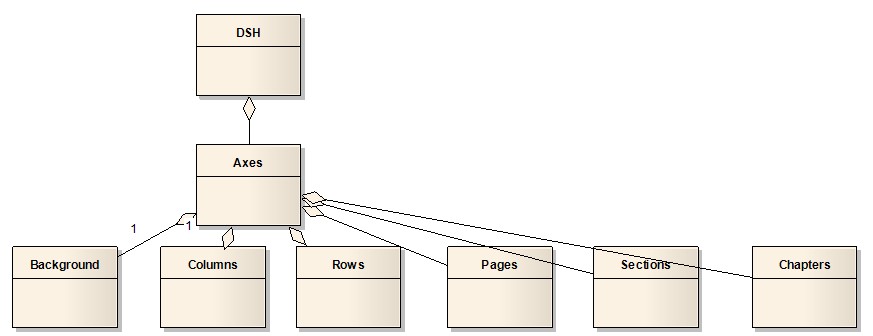

This structure is illustrated below:

Figure 1 - High-level structure of the PAS Briefing Book Server elements

Pages and books have a more detailed structure as can be seen from the PAS documentation. These structures can be accessed either via the PAS object model or via XML which is stored in the tables mentioned above.

MDStore objects should contain metadata from all pages within a book. Our experience shows that MDStore can occasionally be ignored as we did with the BookCreator application described later.

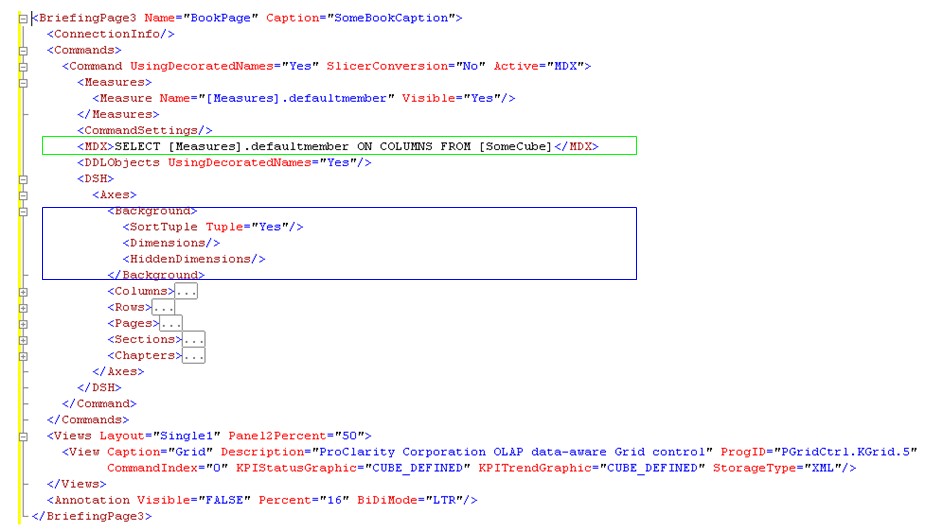

When exploring a BriefingPage (or simply a page) there is a high priority section called Commands which always holds one command. Each command is defined via an MDX query (green rectangle in figure 3) and has some additional attributes that are used in the PAS web pages, such as when showing which dimensions are combined in the background (blue rectangle in figure 3). These attributes are visible under the tag DSH (Data Source Hub) and its cardinality is always 1:1. So each page has exactly one command object (with MDX as a property), one object referenced to the Axes class and one object referenced to the Background class, but it can have many dimensions lying under it. The basic illustration is shown in figure 2.

Figure 2 - DSH container of MDX and its attributes.

Figure 3 - A very simplified BriefingPage XML

Marking of user queries

Neither our customer nor Adastra believed that all 20,000 queries on PAS have equal importance. Together we defined a rule for an active user which we applied on the SSAS query log. Inactive user queries were not migrated.

The next step was to validate the remaining MDX queries. For this, we developed a small C# utility (called ASCMD2000). We could not allow a linked server to the SSAS because many queries were longer than the SQL Server's maximum length (longer queries than 8000 characters returned Msg 103 error).

The ASCMD2000 utility supports running on both the 2005 and 2000 SSAS versions (MSOLAP.3 or MSOLAP.2 provider) by measuring the duration of a query, exporting its result into a text file and producing a checksum.

The ASCMD2000 job was scheduled in an SQL Server agent and its results were stored in a SQL server table. It turned out to be a time-consuming task (when not running in parallel we were able test about 50 MDX commands per hour) and some successful commands in ASCMD2000 were not similarly successful in ProClarity. The main error issues we faced were connection problems or missing member errors. The older the query, the higher the probability of quoting a non-existing (existing with a different parent when using a composite key) dimension member.

Finally we filtered out 40 percent of the queries as being obsolete or invalid. The extra output of this marking step was the OlapQuery log which we used for Usage-Based-Optimization on the target system.

MDX syntax changes

The aim of a PAS queries migration can be broken down into an MDX queries migration with their upload returning back to the PAS server. Once again, it didn't turn out to be a simple task and what was more surprising was the lack of comments on the MDX syntax changes.

There are 2 major changes in the MDX syntax between the SSAS 2000 and 2005 implementation:

- Format of the date key columns

- Notation in composite key columns in dimensions.

Date time formatting in MDX 2000 is national characters specific but MDX 2005 is international. Consider the following examples:

--2000:

[Time].[Monthly].[Day].&[16.7.2006]

--2005:

[Time].[Monthly].[Day].&[2006-07-16T00:00:00]

To successfully migrate the date time issue, you need to take a regular expression replacement. We had to develop a quite sophisticated utility on the basis of regular expressions that was configured via XML (for composite key syntax shown later). The regular expressions in TSQL must be implemented via .Net assembly because it is not implemented in TSQL. We also found that the .Net deserialization is somehow not supported in the SQL Server 2005 hosting environment.

To demonstrate the composite key notation issue, let's consider a dimension name [Dimension] with a hierarchy name [Hierarchy] that has the following structure: [Level1], [Level2], [Level3] where each level has its LevelXField key column. When referring to a dimension member we could (this is a general case of ProClarity) use this 2000 key column syntax:

[Dimension].[Level1].&[Level1Field].&[Level2Field].&[Level3Field]

When referring to the same member in 2005 syntax you should use the following key column path:

[Dimension].[Hierarchy].[Level3].&[Level1Field]&[Level2Field]&[Level3Field]

Note that the key values are in 2005 quoted with its level in 2005 (in our example the Level3), and the composite values in the path are not separated with a full stop - it is not a typo in the above example.

Key columns (the attribute that determines the level) are handled in a different way in both versions. In the 2000 version, a column has only one database attribute as a key column but in the 2005 version the whole collection is supported. During dimension design you have to carefully take composite keys design into consideration as you get different results in your queries across a dimension with a composite key. In case of duplicity, SSAS 2005 takes the first occurrence of the key value (in fact not a key if it leads to multiple values based on name columns) and all members with that level key are its descendants. SSAS 2000 takes as the composite key the whole path across all levels.

Performance recommendations are against the usage of composite keys so be forewarned that the issue with the composite notation can be more complex - you can, for example, replace composite keys with an integer representation. For instance, 200712 could be represented as YYYYMM.

Testing approach

During the migration we developed and frequently ran a set of tests which always included scripts: one for the source (production) environment and the second for the target (migrated) environment. Test frequency depended on data consistency - we ran tests after we had comparable data on both environments with the ASCMD2000.

The first set of test cases was for dimension compatibility. The results for an AllMembers script for a production database should be the same as the database for the development server with exceptions occurring when a dimension design has changed. As mentioned earlier, the dimension tests showed some interesting issues regarding key columns.

The second set of test cases was developed for measures results.

Time tests confirmed the correct SSAS and hardware design. Even without a special aggregation design, the target system querying time was almost 3 times shorter than with the source system - of course the source system was impacted with some production users.

Structural changes in analytical database

Most structural changes were technically driven. For example, virtual dimensions can now be bypassed using dimension attributes, however, when a dimension has more than one hierarchy or additional attribute (behaves as an additional hierarchy) each member or level must quote a hierarchy. This aspect certainly has a significant impact on existing user queries.

We subsequently developed a replacement facility using a combination of an old text and a new one. A priority can be specified when some replacements must happen before another one.

The greatest effort required in SSAS redesign concerned calculated members due to the aforementioned modifier dimension. For these modifiers we decided to use the SCOPE statement whenever possible. Our findings showed that SCOPE is sometimes difficult to predict or is simply unreliable. For example, we wanted the modifier dimension to have all physical members coming from a table, but during the implementation it turned out to be all calculated members.

Our other note concerns the position of the Scope statement in the whole script. There seems to be several stereotypes when a functioning Scope stops working. These problems are often solved by reordering calculations.

We fine grained some measure group partitions on the target system, and, of course, their time slice attributes. The processing performance of SSAS 2005 is brilliant. We did not even use the ProcessAdd partition method, instead we combined ProcessData with ProcessIndex as recommended in Microsoft Processing Best Practices http://www.microsoft.com/technet/prodtechnol/sql/bestpractice/ssaspbpr.mspx

Functions of perspectives could be changed in future versions of SSAS. The source system has many virtual cubes and these were entry points for user access. On the other hand, user access on physical cubes was not allowed. Perspectives have no security functions so we only used their projections with default measures as set by source system. We solved the missing functionality of calculated measures like default member with a new physical measures overridden with a calculated value in a SCOPE statement.

Source system used virtual cubes also for security restriction to some calculated measures. Users with access to the virtual cube can see its restricted (private) measures. We used a workaround instead of a Cell data security feature of SSAS. The restricted calculated measure is checking whether a user has access to a dimension member restricted with Dimension security which works fine.

PAS queries update

Our task was to change the user queries (about 12 000 BriefingPages) with minimal impact on user side.

At the beginning we wanted to use replacement facility not only to MDX queries (property of the Command object in the BriefingPage object) but on the whole BriefingPage and MDStored object (via its XML interface). It turned out to be too complex and our understanding of XML interface was not 100 %.

A solution based fully on the PAS object model approach was as time consuming as when running all queries during the Marking phase mentioned previously.

The combination of direct database modifications with PAS object model modification proved to be the most suitable. We developed another utility called BookCreator, based on the ProClarity desktop components (ActiveX) which we used to connect to the PAS server, create books, amongst other things. On the whole it enabled us to:

- Create flat BriefingBooks with folder-free pages

- Add BriefingPages and MDStores into a flat BriefingBook from a set of MDX queries belonging to one book

For long running MDX we used a fake query that was updated on the SQL Server repository with the target 2005 MDX. For this replacement we had to develop another utility that could replace the BookElements.Xmldata attribute which uses NTEXT data type on an MS SQL Server. Our test with UPDATETEXT and WRITETEXT commands failed due to time and disk space consumption: a change of 1000 records took 3 hours and 50 GB. Our final solution resulted in another utility (BlobUpdator) based on ADO 2.8 with the ADODB.Stream object. This modification required 20 minutes and the repository grew by 300 MB!

The flat structure of newly created books was changed via a TSQL stored procedure that created BookElements of folder type and did other updates (author, library etc.) all based on the production version of the Books.

Processing workflow application

Specific requirements led to the development of another utility in C# (OlapProcessor) where data changes come from special Oracle tables. These interface tables contain data about what type of change occurred in a dimension table, or, for which date the fact table records were added.

Data change types in a dimension table lead to a dimension processing either with the ProcessUpdate or ProcessAdd method. Partitions are reprocessed in parallel with ProcessData and ProcessIndex as mentioned earlier. Whenever there is not an existing partition for the required date, a new partition is created (with the correct where and slice condition). Currently we have daily, weekly, monthly and yearly partitions. During the idle time aggregations reprocessing is made.

The processing is heavily based on AMO and it uses CaptureLog object. We introduced a workaround in setting the MaxParallel tag that is accessible only as XMLA and in detecting which aggregations were invalidated during a dimension ProcessUpdate method.

Conclusion

SSAS 2005 is worth the change. The processing performance of MOLAP is brilliant and it is almost fully under the control of the AMO with the exception of MaxParallel or invalid aggregations detection. Queries with poor performance can be optimized (e.g. for well designed calculated measures with SCOPE) but it occasionally cannot be done on ad hoc client side (ProClarity, Excel) - you can use great Nonempty or subselects in Reporting services.

You should expect a significant effort during MDX upgrade whose syntax was slightly changed and it is not under the migration wizard control. This is particularly difficult if you have a repository of queries such as PAS. Also be aware of a few changes in composite keys or missing features like security restriction via virtual cubes.

Robert Srna, Adastra Corporation, 2009

With special thanks to Andrea Uggetti from Microsoft and Steve Jones from Sql Server Central.

Here are some useful links to follow:

Analysis Services Processing Best Practices

http://www.microsoft.com/technet/prodtechnol/sql/bestpractice/ssaspbpr.mspx

Partition Slicing in Analysis Services

http://sqlcat.com/technicalnotes/archive/2007/09/11/ssas-partition-slicing.aspx

Great SSAS Performance Guide

http://www.microsoft.com/technet/prodtechnol/sql/2005/ssas2005perfguide.mspx