Problem

Your SQL Server environment has millions of obsolete rows that need to be removed but you can’t impact the business and there is no maintenance window. How do you remove those rows with no business impact and avoid getting your boss on a tirade? What little known SQL tricks can you leverage to ensure a quick and non disruptive cleanup?

Solution

Building upon my earlier article published in SQLServerCentral, Large Table Clearnup with Minimal Locks, I have added to the small batch concept discussed there one key improvement: A coding trick to allow very efficient deletions by ordered primary key. Read on to discover how this works.

Background

For this article consider a 400 million row table called Event with 330 million obsolete rows. The primary index is on identity column EventID, figure 1.

CREATE TABLE [dbo].[Event](

[EventId] [bigint] IDENTITY(1,1) NOT NULL,

[EventStatusId] [int] NOT NULL,

[EventDuration] [float] NULL,

[EventCloseDate] [datetime] NULL,

CONSTRAINT [CUPK_Event_EventId] PRIMARY KEY CLUSTERED

( [EventId] ASC ) ) Figure 1

Since the column, EventId, is of type identity, it always increases in value upon insert so the oldest rows are the first ones inserted. If you select top 10 * from Event order by EventId you always get the ten oldest rows. This will come in handy.

How can you remove 330 million rows with very little impact? If you attempt

DELETE FROM EVENT WHERE EventCloseDate < ‘yyyy-mm-dd’

the result will most likely be angry users due to all the lock contention. Operations won’t like you much with this approach since EventCloseDate could take up significant IO and CPU since has no index defined on it. Wouldn’t it be great if we could delete by the primary key, EventId, and use its index? Is this valid SQL?

DELETE TOP 1000000 FROM Event

Regrettably no. However using a hidden trick and a quick three step process you can accomplish the same thing.

The process is:

- Create a view to force row deletion by ordered primary key

- Create a store procedure to loop through the table deleting in small batches

- Setup a SQL job for nonstop execution.

Step One: Begin by using a hidden trick. This trick is to create a view to selected the top 2000 rows ordered by the primary key identity column (see figure 2). This view will be the target of the delete statement. The ‘order by’ ensures the rows are the oldest for the delete to follow and lets us add an order by to a delete, something SQL will not allow.

CREATE VIEW [dbo].[VEventCleanup] AS (SELECT TOP(2000) FROM EventTracking.dbo.Event ORDER BY Eventid )

Figure 2

I keep the number of rows selected small (2000) for each transaction. You should experiment with this number to find the best fit for your shop. Remember the probability of contention issues increase as this number increases.

Step Two: Create a store procedure to loop through the table deleting rows in small batches, as shown in figure 3. As described in my previously mentioned article, take a large number of rows to delete, say 330 million, and delete them in small batches. Keeping the number of rows to delete below 5000 avoids lock escalations and limits the amount of time exclusive locks are held. This is the key to avoiding contention.

First set the maximum number of rows to delete. In this case a count of rows with column EventCloseDate older than 6 days. The 6 days is an arbitrary value, so substitute a value which works for you.

The code loops through the table deleting 2,000 rows in each transaction. This continues until all the table rows are deleted that are less than the maximum value.

USE [EventTracking]

GO

/****** Object: StoredProcedure [dbo].[usp_VTrickleDeleteEvent]

Script Date: 12/15/2012 22:23:44 ******/SET ANSI_NULLS ON

GO

SET QUOTED_IDENTIFIER ON

GO

/********************************************************************

File Name: usp_TrickleDeleteEvent.sql

Applies to: SQL Server 2008 R2

Purpose: To remove obsolete rows with minimal impact

Returns: 0 = Succesful, 1 = Errors.

Author: Edward A. Polley

********************************************************************/

ALTER PROCEDURE [dbo].[usp_VTrickleDeleteEvent]

AS

SET NOCOUNT ON

SET QUOTED_IDENTIFIER OFF

DECLARE @N INT -- Row count for each transaction

DECLARE @cnt INT -- Total row count for this run, can be hardcoded

DECLARE @loops INT -- Number of transactions

BEGIN

SET @N = 2000 —-must be same value as rows selected in view

SELECT @cnt = COUNT(*)

FROM [EventTracking].[DBO].[Event]

WHERE EventCloseDate < GETDATE() - 6 -- remove rows older than 6 days

SET @loops = CEILING(@cnt/@N) -- transactionHow many times to run

WHILE @loops > 0

BEGIN

BEGIN TRAN RemoveEvent

DELETE FROM VEventCleanup –- delete from view with order by

SET @loops = @loops – 1 -- decrement @loop

COMMIT TRAN -- COMMIT to release locks

END

END Step Three: Create a SQL batch job. At 330 million rows this job will take days to complete, but the good news is there is very little contention, I run cleanup jobs right through the busiest processing window with no issues. Try experimenting with the @N variable. The larger this value is, the fewer loops, but the higher the probability of timeouts. Remember this @N value must match the Top x statement in the view.

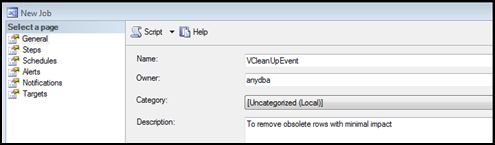

The wasiest way to create the new job in Management Studio is by expanding SQLServer Agent folder and then right clicking Jobs folder. The General tab might look like this:

Figure 4

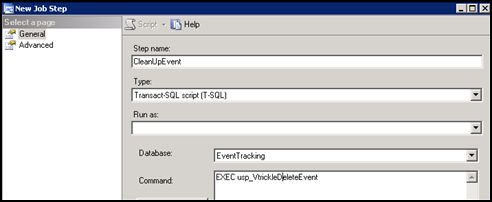

Then click Steps tab and enter the name of the stored procedure from step 2 above. Set the Type of job to TSQL and the Database to EventTracking. The New Job Step tab might look like this:

Figure 5

Save this and you are done.

In three steps you’ve created a job to remove obsolete rows with very low contention. Give it a try, I think you’ll find is removes rows with so little contention even your cranky boss won’t notice.