Ever wanted to INSERT and SELECT the same set of rows at the same time? Or maybe DELETE a bunch of rows and back those same rows up to another table in the same command? Turns out it's not that hard.

The OUTPUT clause can be used with INSERT, UPDATE, DELETE and MERGE to pull back any data modified. It does this by giving us access to the INSERTED and DELETED system views. If you haven't ever worked with them before, you typically see them when working with a trigger. INSERTED is all new data, either inserted or the updated version of the data after an UPDATE statement. DELETED is all of the old data either the data removed by a DELETE or the old data from before it was updated by an UPDATE statement.

Let's try an example. Say you want to archive a few hundred rows from one table into another.

-- Create an archive table

SELECT TOP 0 SalesOrderId,

CAST(SalesOrderDetailID AS Int) AS SalesOrderDetailID,

CarrierTrackingNumber,

OrderQty,

ProductID,

SpecialOfferID,

UnitPrice,

UnitPriceDiscount,

LineTotal,

rowguid,

ModifiedDate,

getdate() AS ArchiveDate

INTO Sales.SalesOrderDetail_Archive

FROM Sales.SalesOrderDetail;Typically we would first insert the data into the archive table and then delete it from the source table. There are a couple of issues with this. First, if anything changes between the two commands we could have rows that are removed but not archived. The simplest way to resolve this is to wrap both commands in a transaction.

That brings up the second problem. Each individual command is wrapped in an implicit transaction, but by using an explicit transaction we have doubled the transaction length.

SET STATISTICS IO, TIME ON; BEGIN TRANSACTION; INSERT INTO Sales.SalesOrderDetail_Archive SELECT *, GetDate() FROM Sales.SalesOrderDetail WHERE ModifiedDate < '2011/07/01'; DELETE FROM Sales.SalesOrderDetail WHERE ModifiedDate < '2011/07/01'; COMMIT;

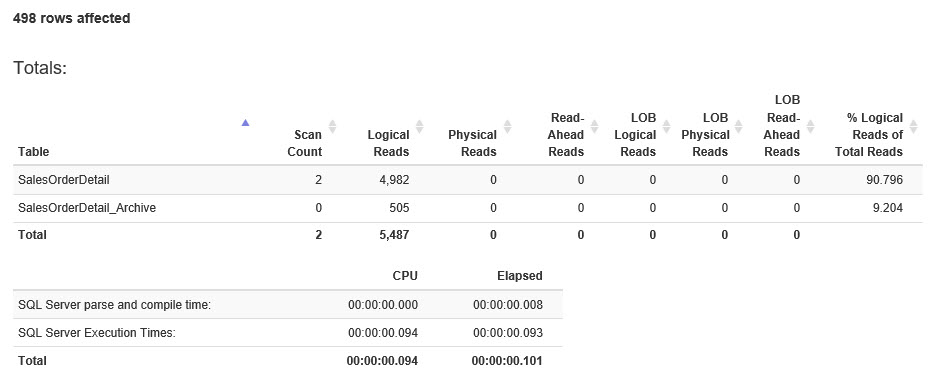

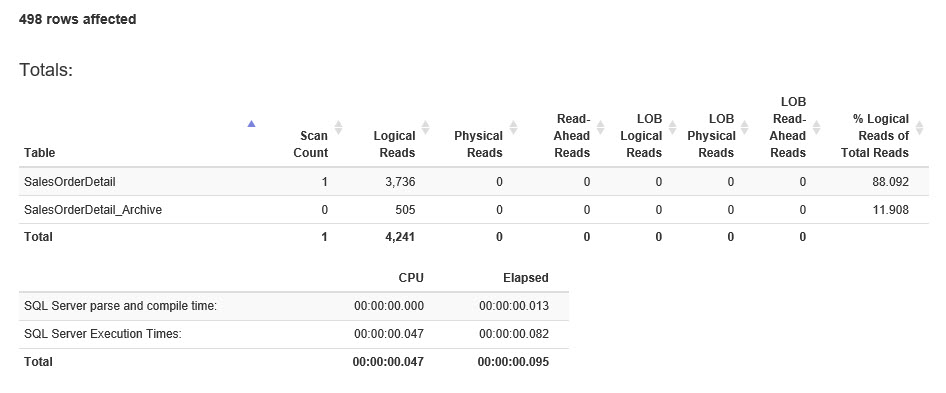

I'm using www.statisticsparser.com to display the statistics output from the commands. One of the nice things about StatisticsParser is that has a summary option. Because statistics output is fairly long, particularly when you have multiple commands, I'm just showing the summary information.

Now let's try it using OUTPUT.

SET STATISTICS IO, TIME ON;

BEGIN TRANSACTION;

DELETE FROM Sales.SalesOrderDetail

OUTPUT deleted.SalesOrderId,

deleted.SalesOrderDetailID,

deleted.CarrierTrackingNumber,

deleted.OrderQty,

deleted.ProductID,

deleted.SpecialOfferID,

deleted.UnitPrice,

deleted.UnitPriceDiscount,

deleted.LineTotal,

deleted.rowguid,

deleted.ModifiedDate,

getdate()

INTO Sales.SalesOrderDetail_Archive

WHERE ModifiedDate < '2011/07/01';

COMMIT;

If you do a little looking you'll see that the OUTPUT version took a bit less IO (reads) and was slightly faster. The total difference in the IO is 1246 pages. This is in fact the number of pages read from Sales.SalesOrderDetail in order to do the initial INSERT, or the SELECT statement. Adding a bit of logic we can reasonably assume that the OUTPUT version will always be less the IO required for a SELECT of the data. So if it's a large query with a lot of IO there will be a big savings. The run time was pretty close but we can also logically assume that the longer the overall query the larger the time savings will be as well.