Problem

We often have complex data requirements that seem to defy an elegant set-based approach. One specific case that often causes difficulty is having to perform calculations between rows of data. For example, we have a data set such as this one:

InvID ActivityCode Date OldLocationID NewLocationID ***** ************ ********* ************* ************ 1 1 1/1/2014 NULL 10 1 3 2/1/2014 10 13 1 3 2/5/2014 13 10 1 3 2/10/2014 10 13 1 3 2/15/2014 13 10 1 3 2/20/2014 10 13 1 3 2/21/2014 10 10 1 3 2/24/2014 10 13 1 3 2/28/2014 10 10 1 3 3/10/2014 10 13 2 1 1/1/2014 NULL 10 2 3 2/1/2014 10 13 2 3 2/5/2014 13 10 2 3 2/10/2014 10 13 2 3 2/15/2014 13 10

We are interested in calculating the days that an inventory item is located in a given location, but want to do so efficiently in a single select statement.

How do we write a query to do this without a WHILE loop or a cursor so that we can retrieve this data quickly and efficiently? What is the best way to relate data in one row to another row before or after it?

Solution

We somehow need to link any given row to the next row and calculate the number of days in between. There are two set-based approaches that we will look at here; the first will work on any version of SQL Server since 2005 whereas the second will only work on SQL Server 2012 and later.

The first solution takes the problem and provides a literal approach. If we can perform a join between one row and the next in the table, then we can easily calculate the result we are looking for.

SELECT

*

FROM history HISTORY_CURRENT_ROW

INNER JOIN history HISTORY_NEXT_ROW

ON ?????Since this data set doesn’t have an identity or reliable primary key, filling in those question marks will require a bit more work. If we use the ROW_NUMBER window function, we can create a surrogate key on the fly, and then use it to solve our problem:

The CTE creates a row number set for each inventory item, ordering by date so that each move is chronological order. We then join the CTE to itself based on InventoryItemID and our newly created row_num to link one row to the next. A LEFT JOIN is used since the last row for each inventory item will not have a next row to join to, and we do not want to inadvertently filter these out. Finally, the DATEDIFF finishes the job, calculating the days in between when our item arrived in its current location and when it arrives in its next location.

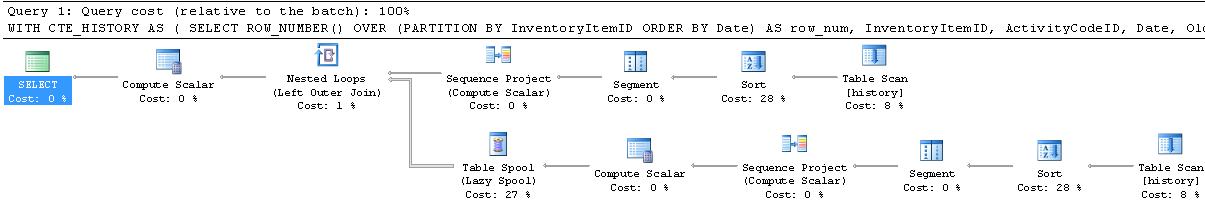

This solution returns the results we were looking for, but it isn’t terribly efficient. A quick look at our execution plan reveals 2 table scans:

IO statistics confirms this and shows the need for a worktable in TempDB to support our table spool operations. For a large data set, this could be a very slow query.

Table 'Worktable'. Scan count 1, logical reads 39, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Table 'history'. Scan count 2, logical reads 2, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

If you are using SQL Server 2012, you can take advantage of one of the new window functions to get the same results as above far more efficiently. LEAD and LAG can be used to retrieve a column from a previous or upcoming row using these standard window functions. You can implement LEAD like this:

SELECT

LEAD(Date) OVER (PARTITION BY InventoryItemID ORDER BY Date) AS next_location_date,

InventoryItemID,

ActivityCodeID,

Date,

OldLocationID,

NewLocationID

FROM historyNote that the PARTITION BY and ORDER BY are exactly the same as before. Since LEAD and LAG are treated like standard functions within a SELECT query, you can manipulate them at will to get our days in each location by wrapping a DATEDIFF around the date in the current row and our LEAD date:

SELECT

InventoryItemID,

ActivityCodeID,

Date,

OldLocationID,

NewLocationID,

DATEDIFF(DAY, history.Date, LEAD(Date) OVER (PARTITION BY InventoryItemID ORDER BY Date)) AS days_in_current_location

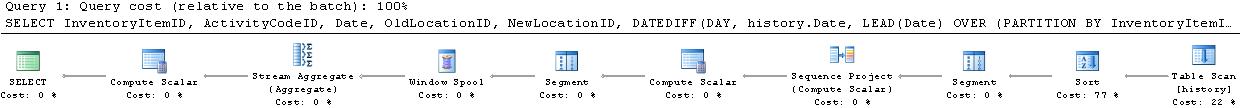

FROM historyBy using LEAD, we avoid the need for a self-join, which greatly improves performance. The result is a much cleaner execution plan and statistics that confirm the reduced reads necessary to calculate our results. The worktable used by the window spool is far more efficient than the table spool required previously.

The query statistics:

Table 'Worktable'. Scan count 0, logical reads 0, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Table 'history'. Scan count 1, logical reads 1, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

As an additional bit of trivia, you can add an offset to LEAD, which will allow you to access data from a row in any given span from the current row. For example, LEAD(Date, 2) would not get the date from the next row, but from 2 rows ahead. LAG(Date, 5) would get the date from 5 rows prior to the current row.

Conclusion

Calculating date between different rows can be done efficiently without resorting to an iterative solution. By utilizing a few SQL Server window functions and DATEDIFF, we can turn a complex TSQL challenge into an 8 line solution. When confronted with a situation where it seems as though no set-based solution is available, consider experimenting with CTEs and window functions as both can allow you to restructure or enhance your data set to include additional useful information.