OneLake Storage

What is a Lakehouse in Azure Fabric? Borrowing a quote from MS Learn - "Microsoft Fabric Lakehouse is a data architecture platform for storing, managing, and analyzing structured and unstructured data in a single location". I think of it as a shared Apache Spark virtual space. I can upload, download, and manage files. I can run Spark notebooks to read, transform and write data. I can create tables and/or views on top of the files. Of course, the delta file format is preferred since it has ACID properties that we Data Architects love.

Business Problem

Our manager at adventure works has a team of data engineers that already know how to use Azure Storage Explorer. How can we manage files in the one lake using this tool?

Technical Solution

Previously, I talked about how to manage files with OneLake Explorer. The problem with this tool is that data is manipulated either on the client machine or in Microsoft Fabric. At a given point, the data is synched between the two. There is no granular control on what files or folders are manipulated. The same steps can't be easily repeated.

Today, we are going to investigate two tools. First, Azure Storage Explorer is a graphical user interface that reminds me of the Cute FTP tool that I used early in my career. Second, the AzCopy executable is the worker that Azure Storage Explorer (manager) calls to get the job done. This program reminds me of the command line version of FTP. The executable is available on both Windows and Linux systems. This opens the door for different operating systems to upload files into Microsoft Fabric.

The following topics will be covered in this article in regard to Azure Storage Explorer.

- connect to fabric

- upload files

- rename files

- rename folders

- download files

- delete files

- delete folder

Because AzCopy is at the heart of the storage explorer, a smaller subset of commands will be executed from the command line.

- connect to fabric

- upload files

- list files

- download files

Azure vs Fabric

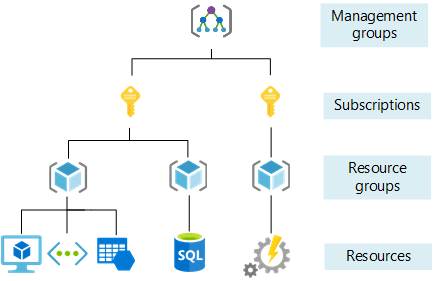

Azure Storage Explorer was built to connect to Azure subscriptions and storage accounts that are owed by your company. In Microsoft Azure, you managed how to deploy and configure storage accounts/containers within your tenant either through the Azure Portal or using Infrastructure As Code. To allow a services like Azure Databricks (Apache Spark) to connect to Azure Data Lake Storage, you must deploy and configure networking and security. The high-level image below shows the relationship between tenants, subscriptions, resource groups and resources.

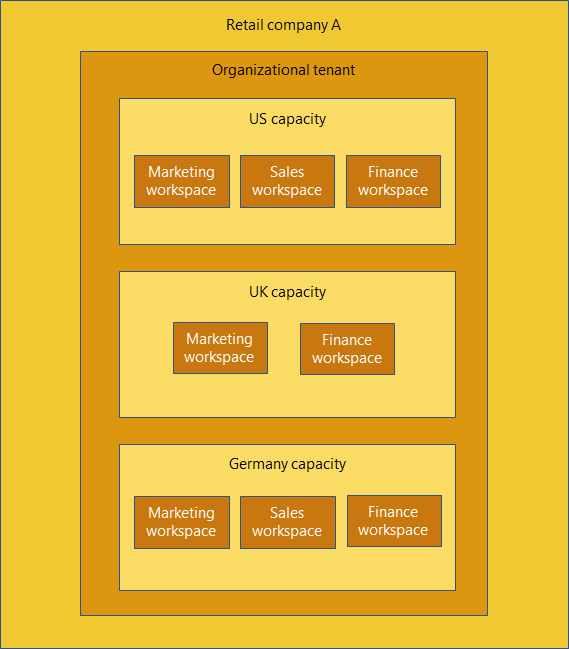

This is a different paradigm compared to Microsoft Fabric which is a service that is mostly configured ahead of time. You can start solving Data Engineering and Analytical problems right away. The three key concepts are: tenant is associated with your company's Microsoft Entra (Active Directory); capacity is reserved computing power for your objects; and a workspace is where objects like Lakehouse or Warehouse are created. The high-level image below shows a company with capacity assigned to three different geographical regions.

Understanding how Azure differs from Fabric is important. This knowledge will be used when we setup a connection to Fabric.

Connect with Storage Explorer

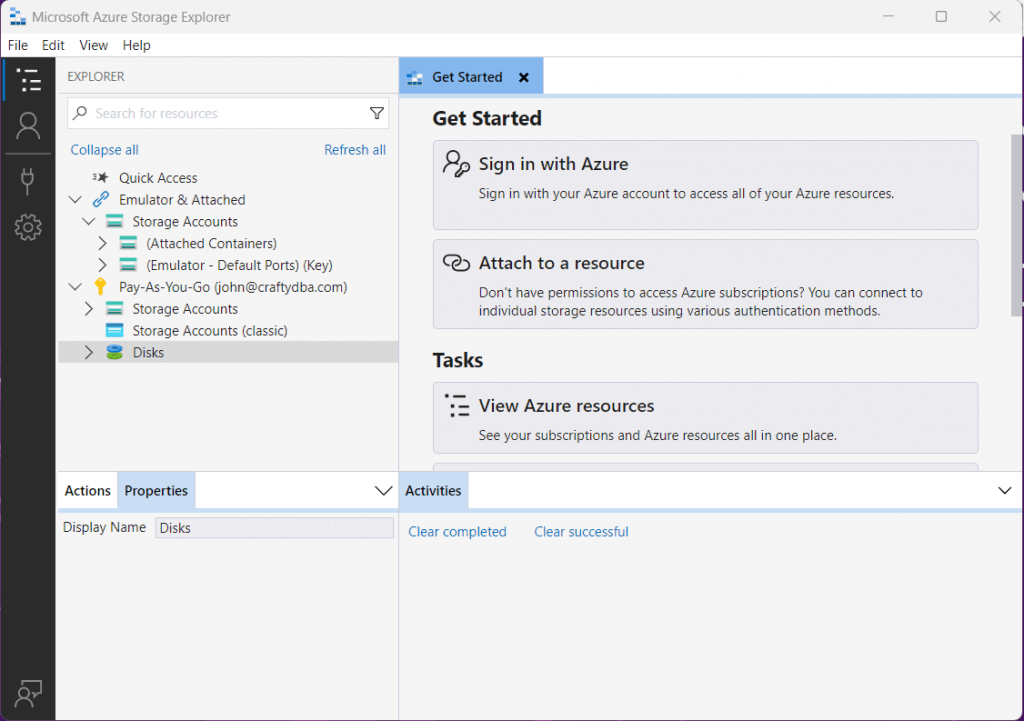

Azure Storage Explorer can be downloaded from Microsoft using this link. The person icon allows you to sign into your Azure Tennant using a given user account. The image below shows the john@craftydba.com logged into Azure. The storage explore can work with both Storage Accounts and/or Disks (virtual hard drives).

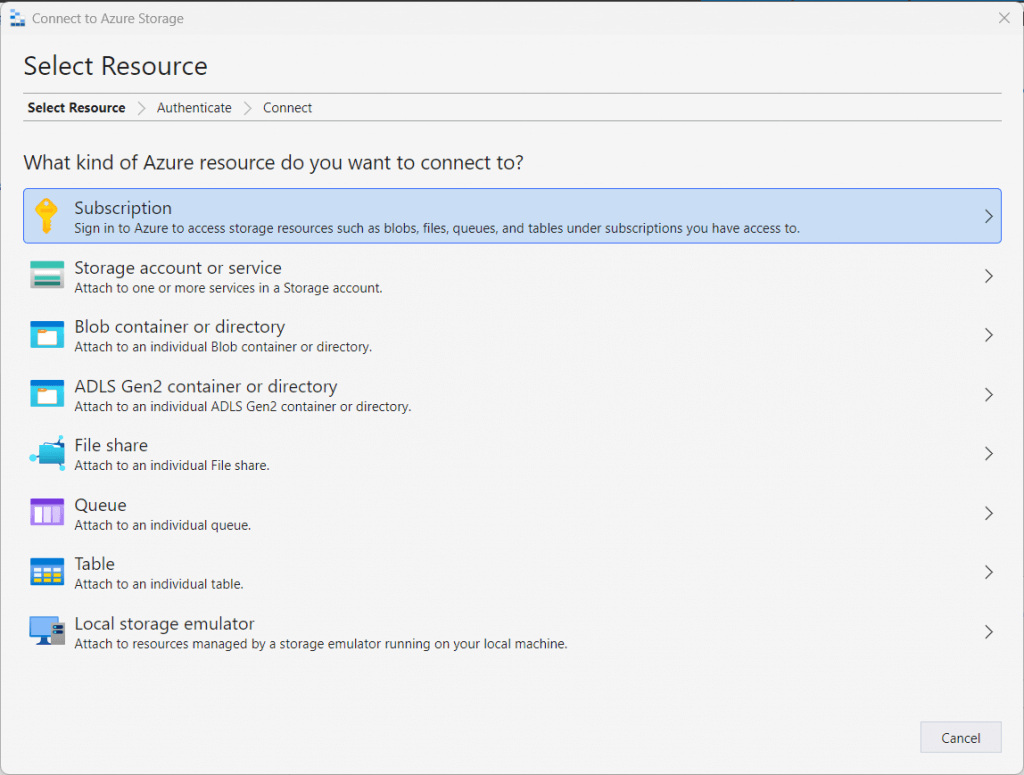

Unfortunately, this type of connection does not work with Microsoft Fabric. Use the attach to a resource button to get started. Choose the ADLS Gen2 Storage container as the resource we want to connect to.

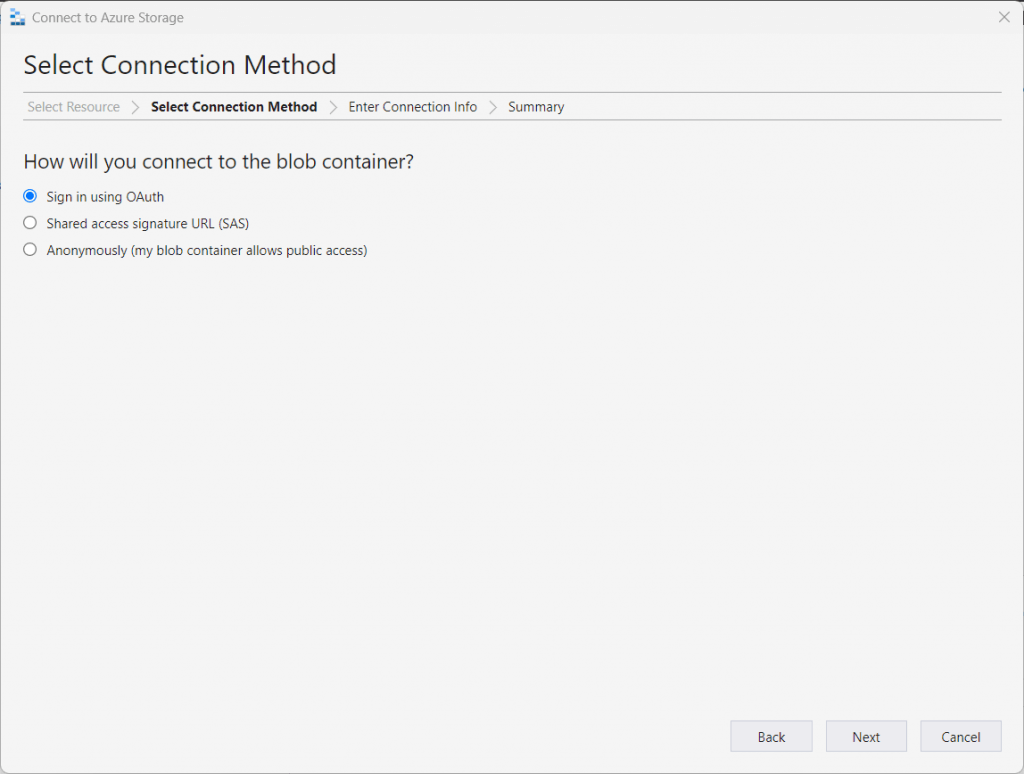

Both shared access signatures and anonymous connects are less secure than OAuth. Please pick OAuth as the connection method.

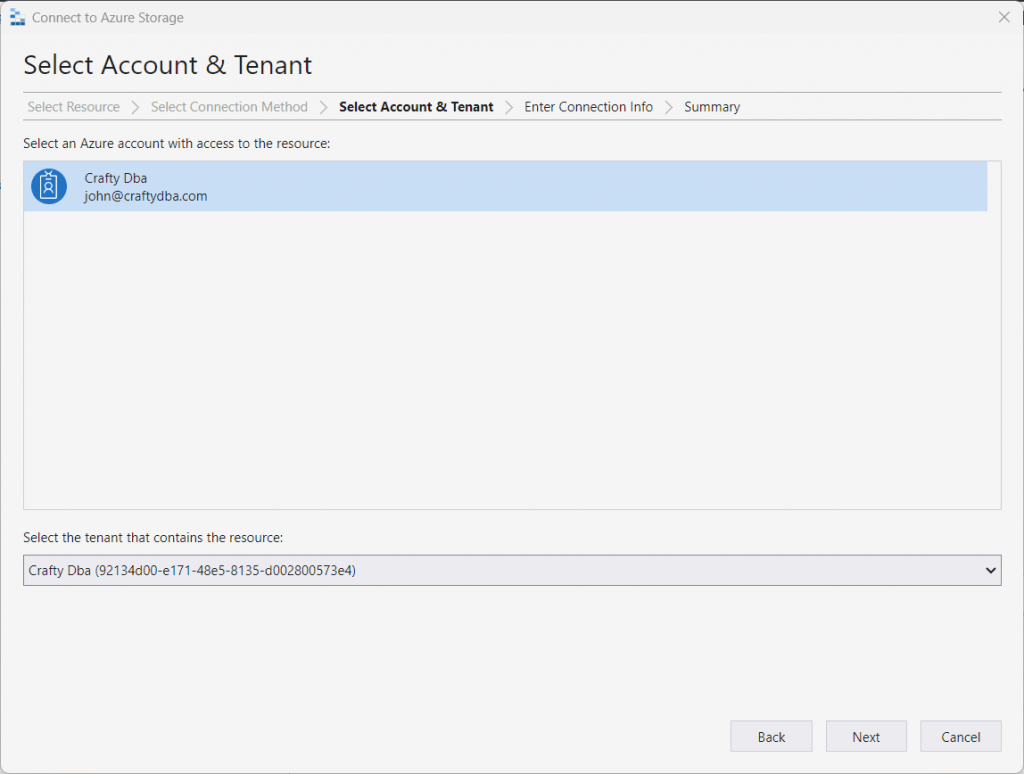

Since I was signed in as john@craftydba.com already, the tool is asking me to use this account.

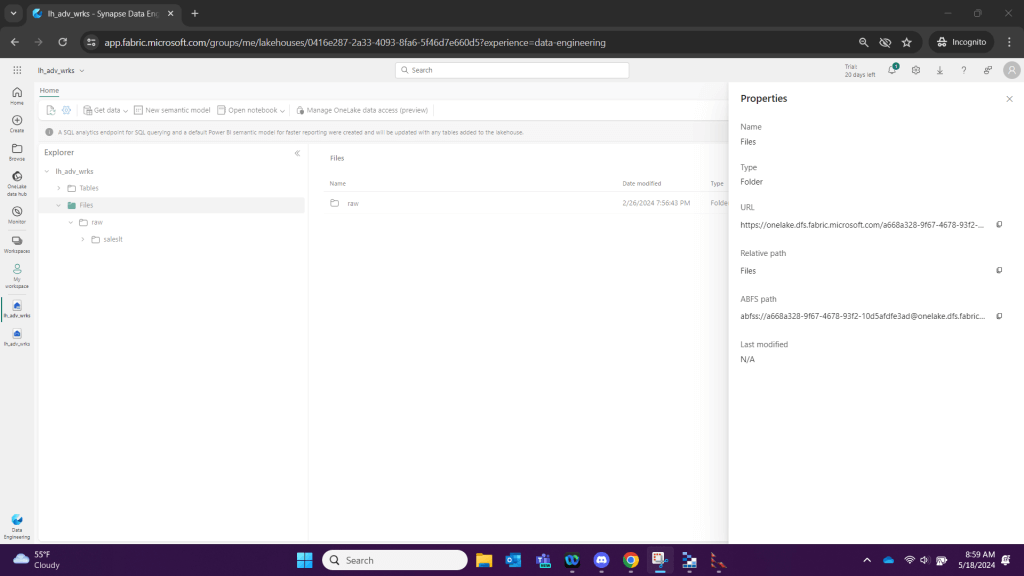

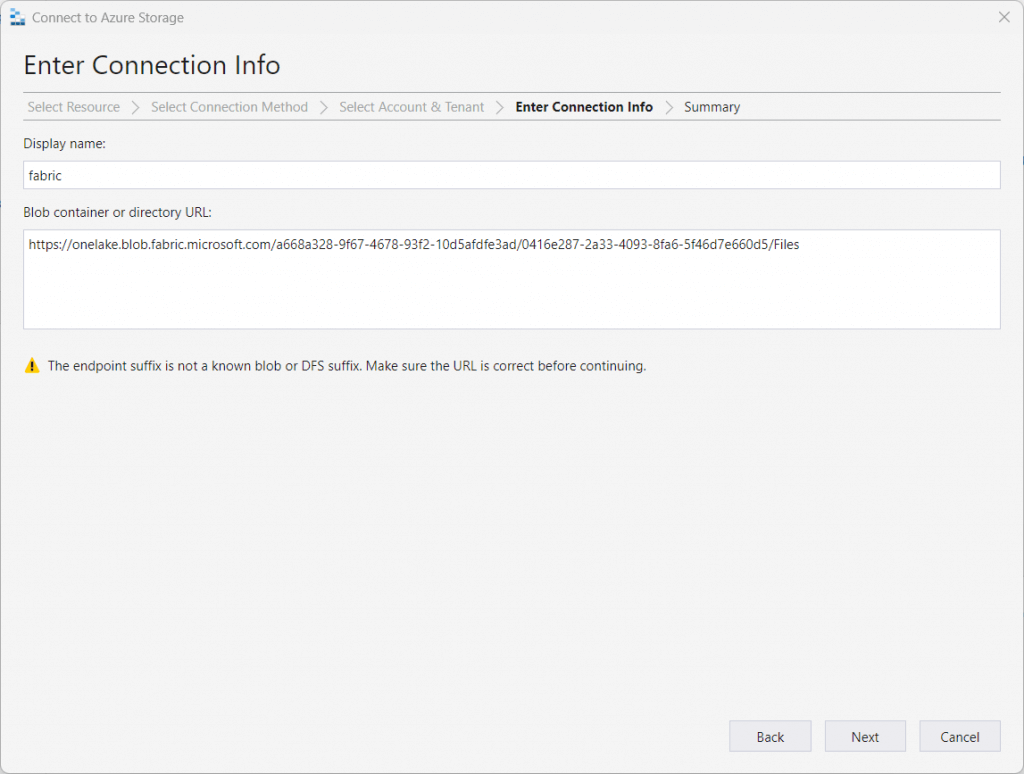

While Microsoft Fabric is new, the objects within Fabric are based upon existing building blocks in Azure. The properties section of the Files folder in the Lakehouse explorer will allow you to retrieve the URL to the Azure Data Lake Storage account.

The summary section allows you to name the connection. Place the URL in the correct text box to complete the action. Here is a spoiler alert: the first GUID (a668a328-9f67-4678-93f2-10d5afdfe3ad) in the URL is the storage account id and the second GUID (0416e287-2a33-4093-8fa6-5f46d7e660d5) in the URL is the Lakehouse id. This information will be important when interacting with the Fabric OneLake using the REST API.

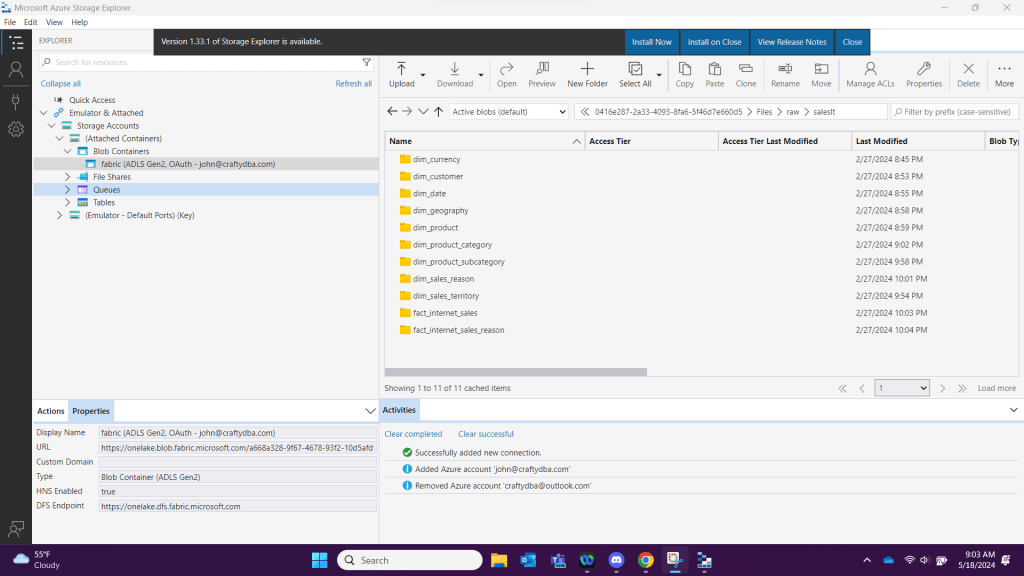

After the connection to Lakehouse storage is established, we can now manage files in the OneLake.

Manage OneLake with Storage Explorer

Cloud services like Azure Data Lake Storage remind me of using File Transfer Protocol (FTP) in the good old days. In this section, we will go over the following tasks that most people use on a regular basis: create directory (folder), upload files, rename files, rename directory (folder), download files, delete files, and delete directory (folder). These actions can be accessed via the ribbon at the top.

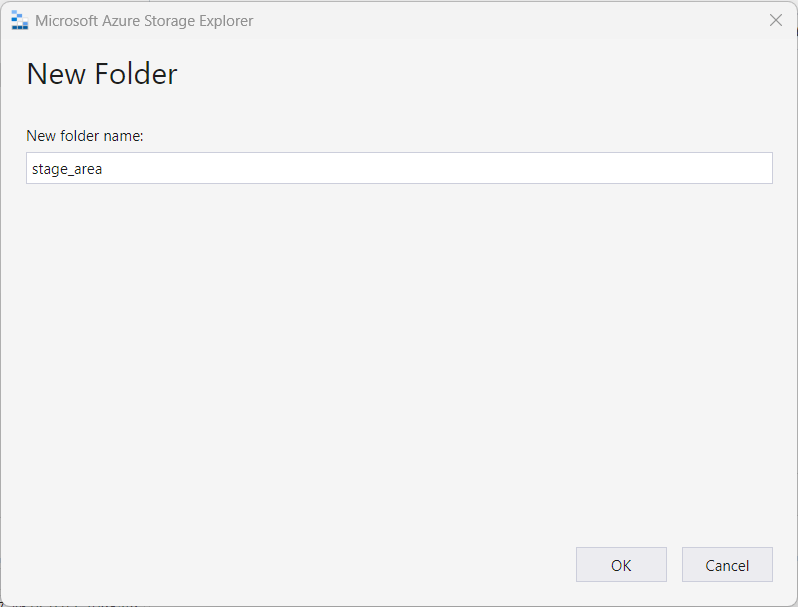

Our manager at adventure works wants us to upload some weather data for the sales team. The first task is to create a new folder named "stage_area" under the "saleslt" folder.

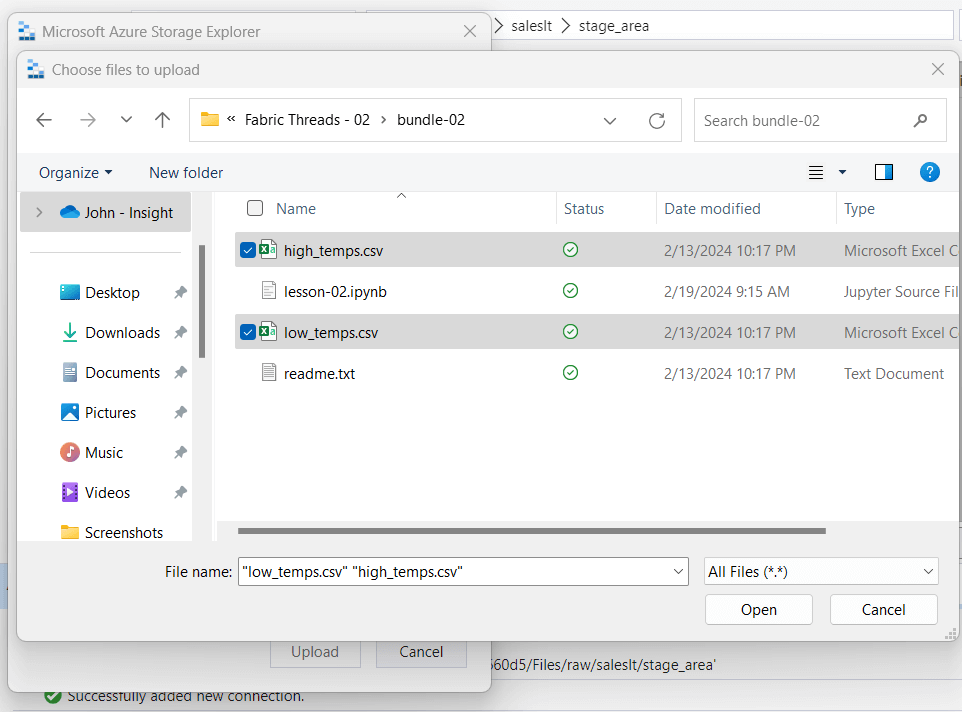

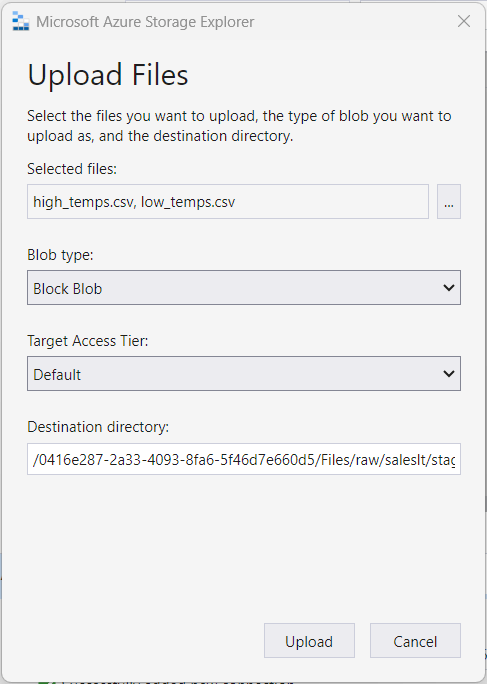

Please change to the new directory and then click the upload button on the ribbon. This action will allow you to browse the files you want to upload. I am using the two weather data files from my second Fabric article.

After the file selection dialog box is closed, we can see a high and low temperature file are ready to upload. Click okay to start the process.

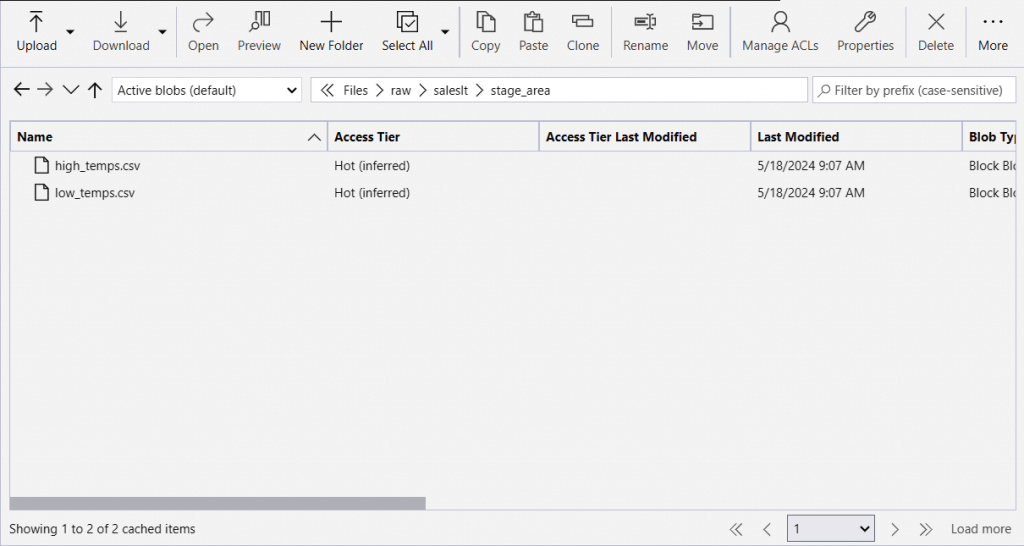

After the action is complete, we can see the two files in the correct folder. We always have the refresh button under the more section if the listing does not refresh automatically.

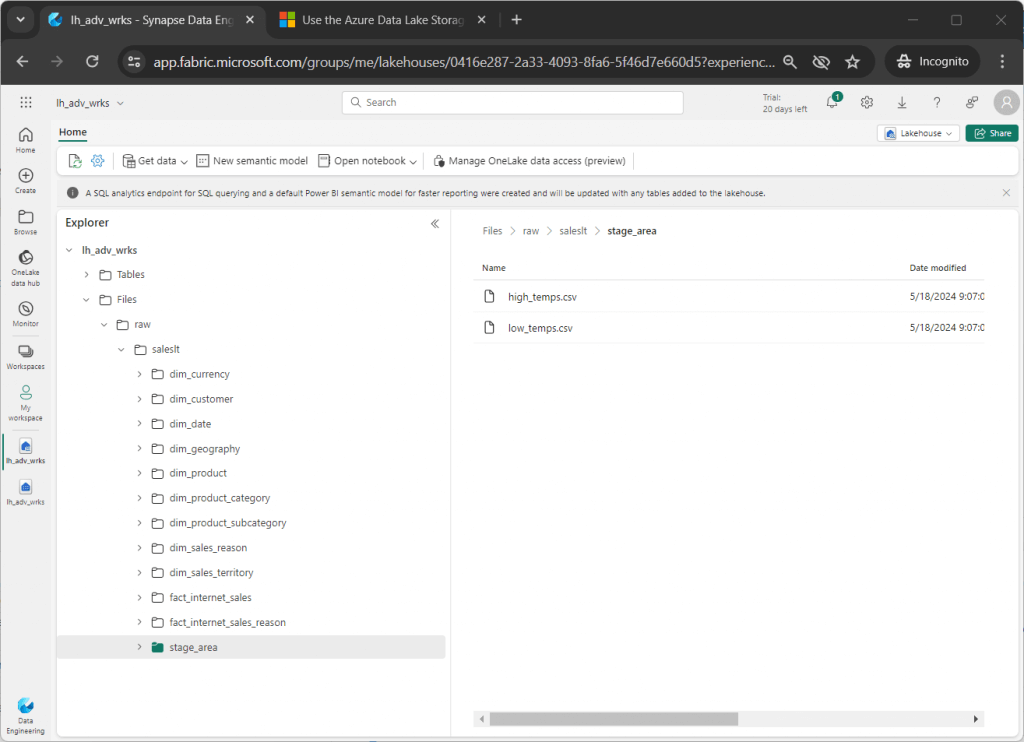

We can verify that the files have been uploaded by using the Lakehouse Explorer in Fabric to locate them. The image below shows the two files in the staging area.

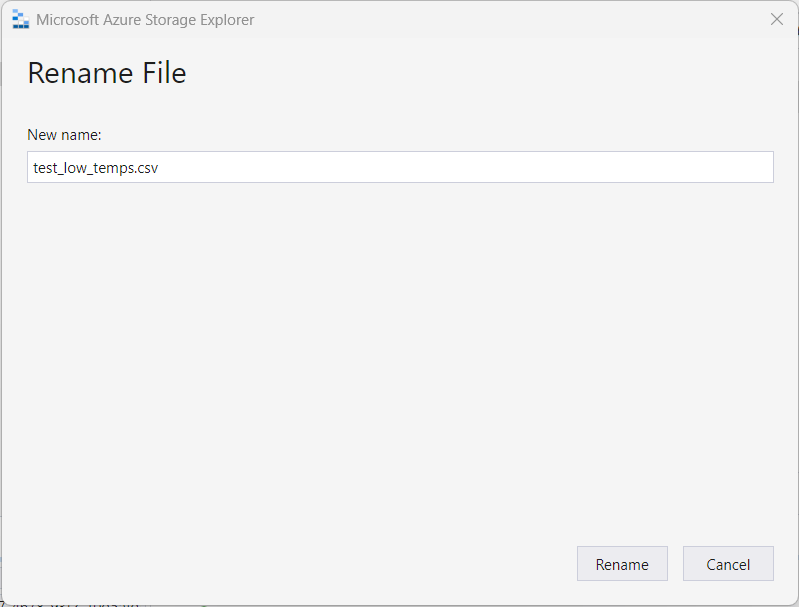

Computer users make mistakes. Therefore, it is not uncommon to rename files or folders. Our teammate wanted us to rename the low temperatures file with the prefix of "test_". Click the rename button on the ribbon after selecting a file in the Azure Storage Explorer. Enter the new name for the file and hit the rename button.

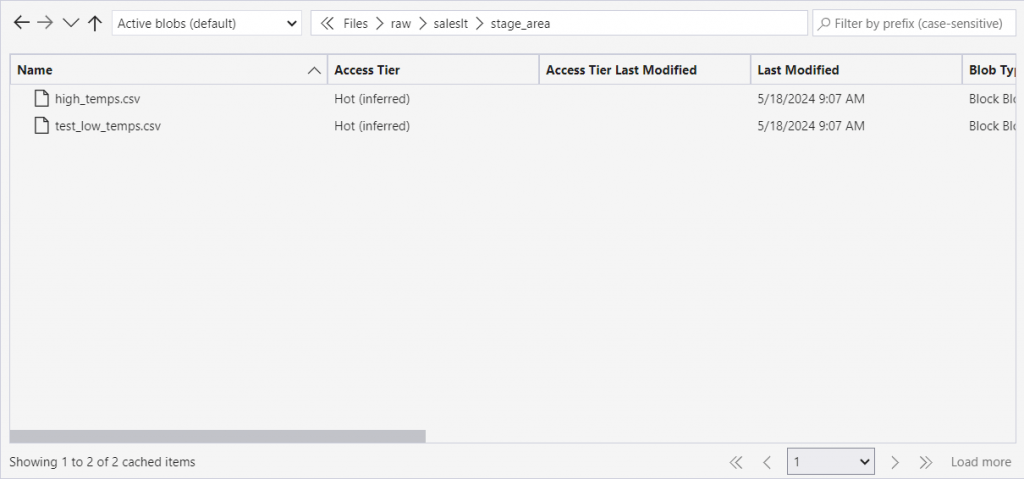

Again, we can view the changes in the explorer.

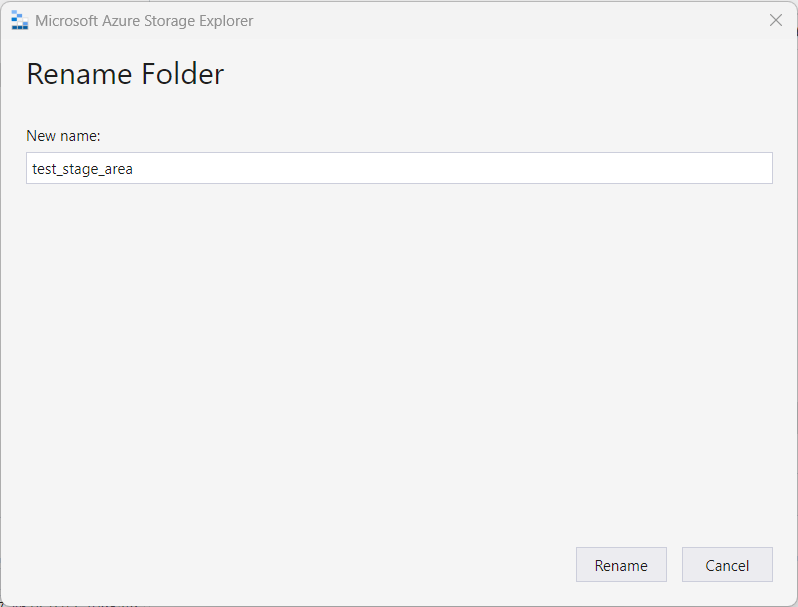

On a similar note, we want to change the name of the folder. Use the up arrow to change directories (folders). Select the folder that we want to rename. In our case, we are adding the prefix "test_" to the "stage_area" folder.

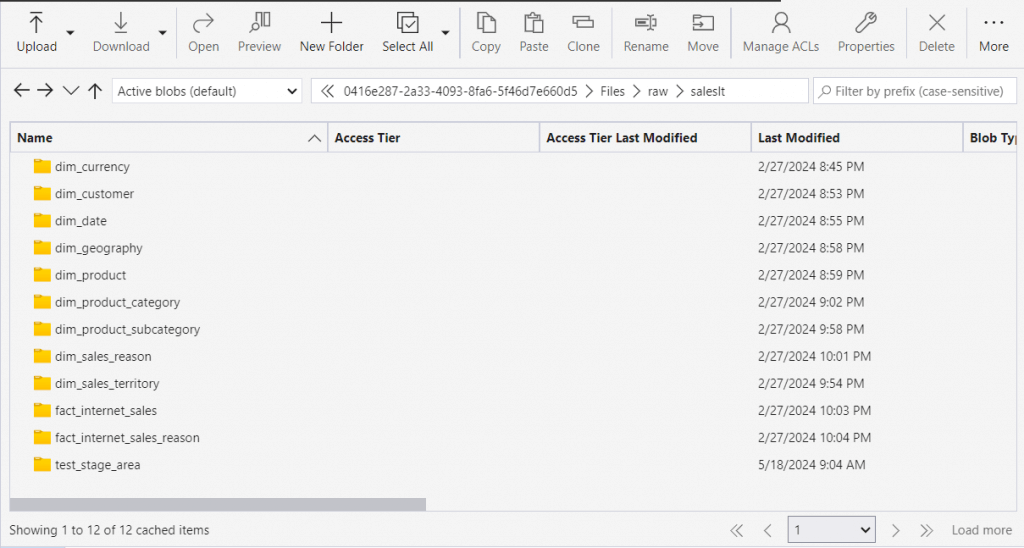

The image below shows the folder correctly renamed.

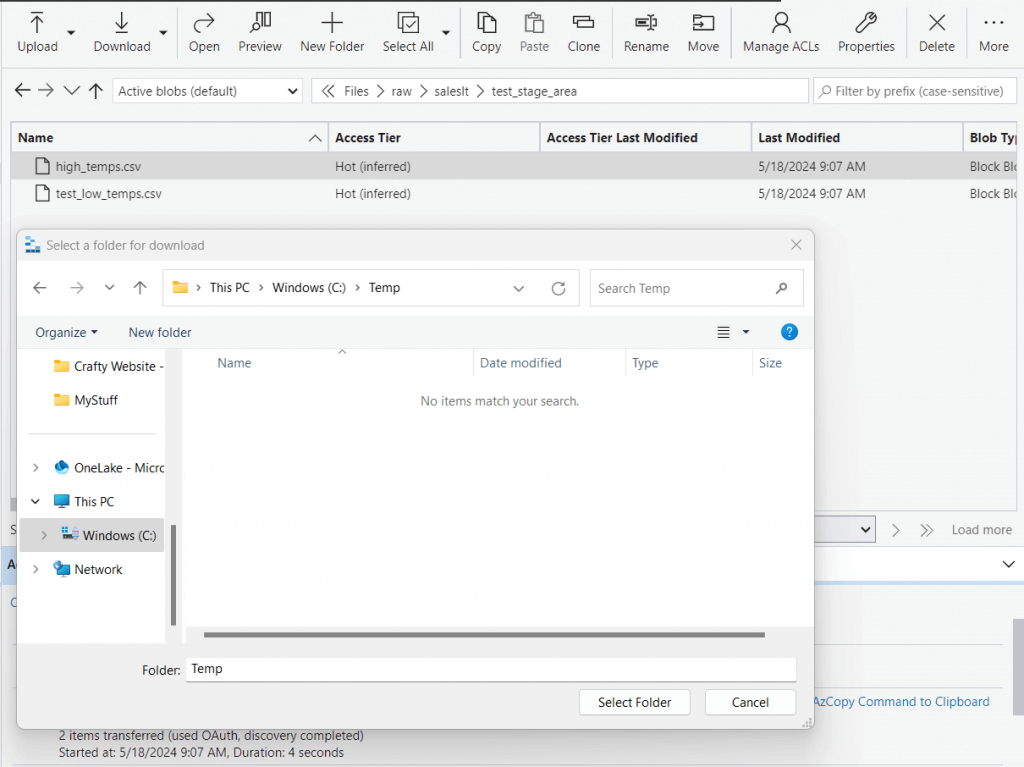

Storage explorer supports both uploading and downloading of files and/or folders. To download the high temperature file, highlight the file and click the download file option. Of course, we have to choose a location on our laptop to store the file. Since we are making a quick change to the contents of the file, I decided to place the file in the "C:\TEMP" directory.

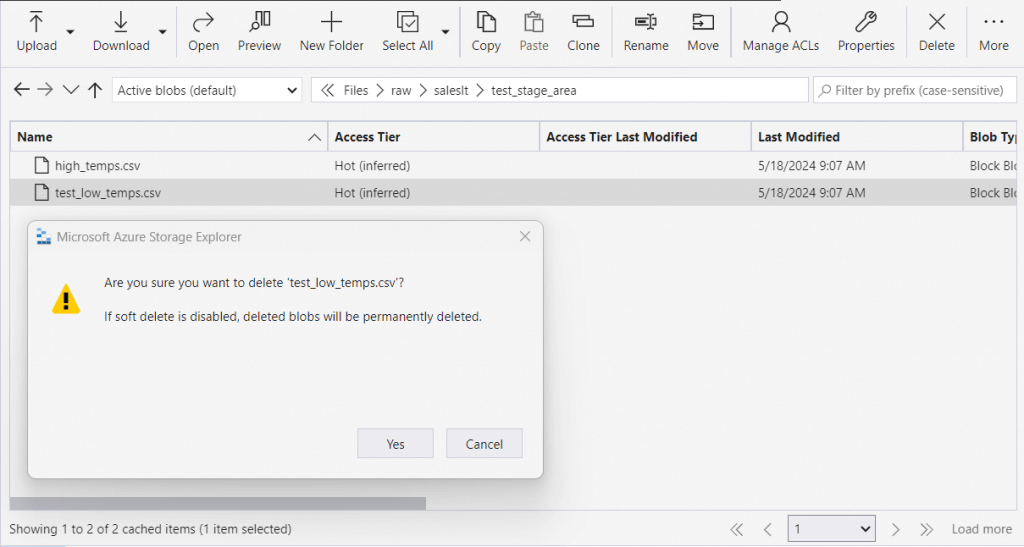

It is always wise to clean up the OneLake if you have temporary testing files. First, we can highlight the "test_low_temps.csv" file and click the delete button to remove it from Fabric storage.

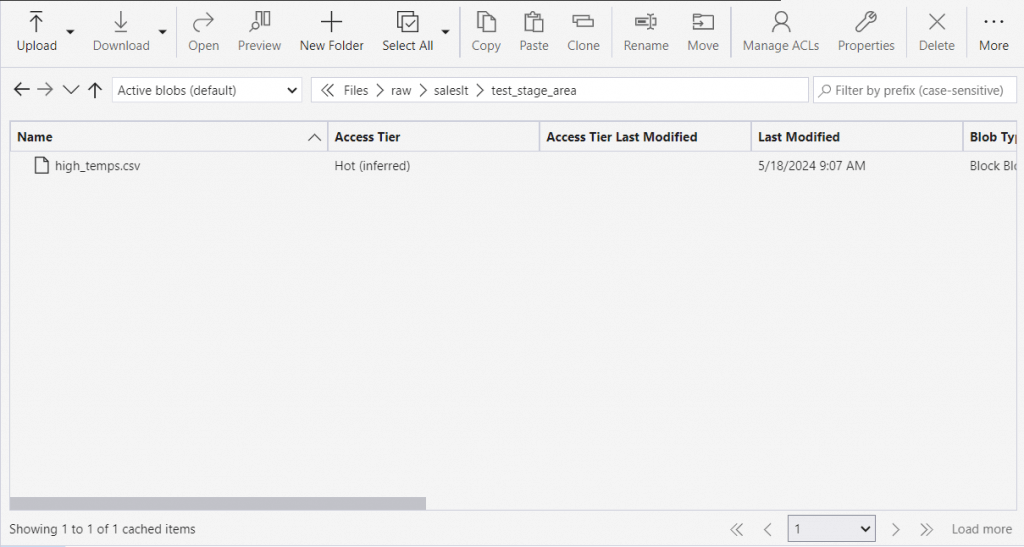

The updated directory listing only shows one file.

We can also delete folders (directories). Use the up arrow to change folders. Select the "test_stage_area" folder and hit the delete button.

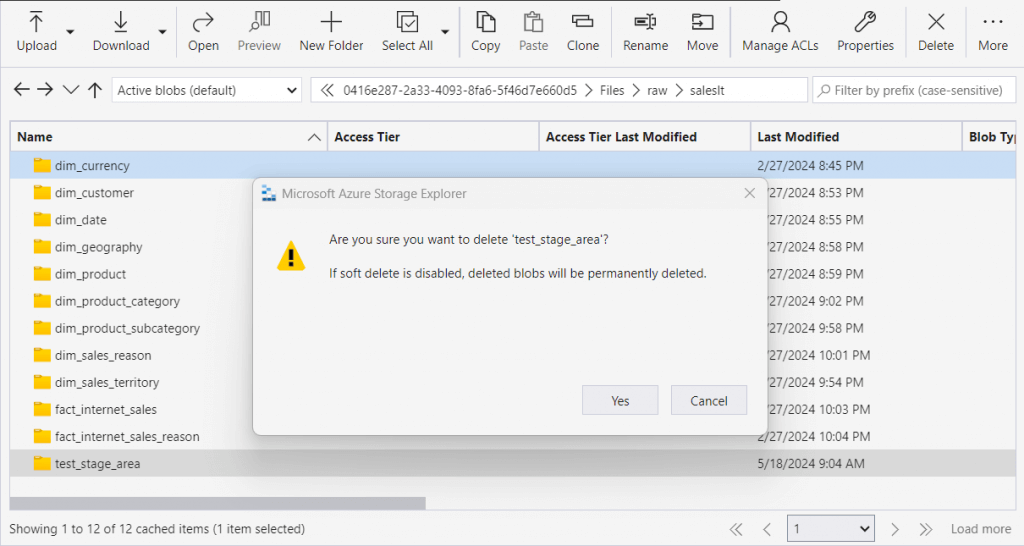

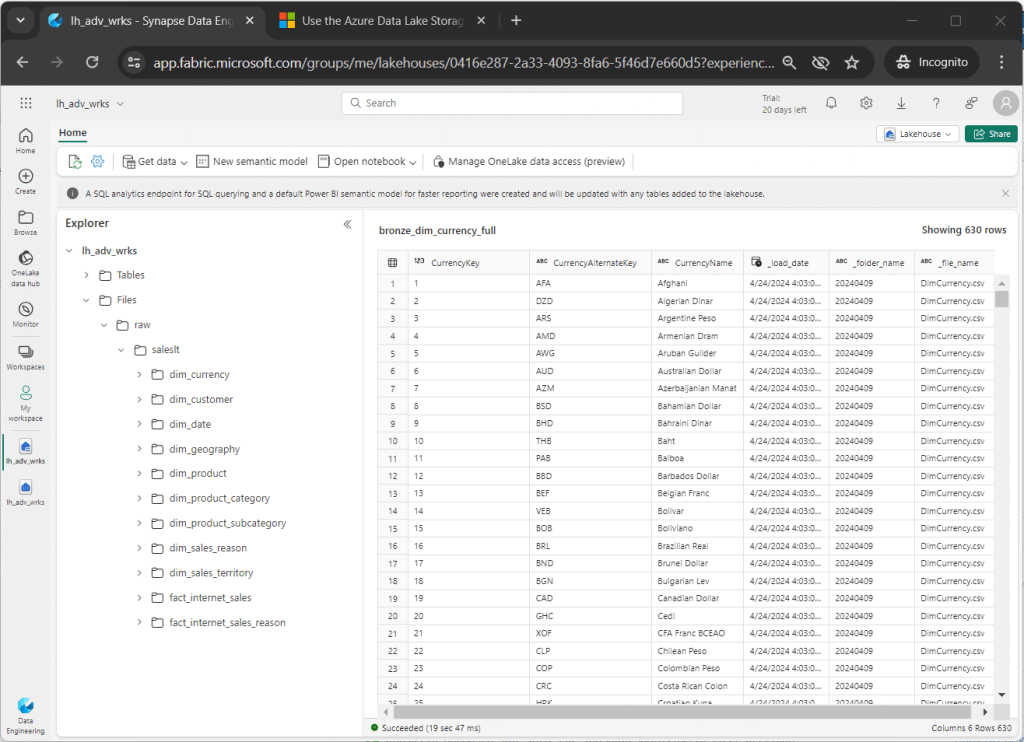

One last step is to check out our work in Lakehouse explorer. We can see that all traces of our testing have been removed from the raw quality zone in the OneLake.

To recap, we can use Azure Storage Explorer to manage both files and folders in our OneLake. This tool has more explicit control than the OneLake Explorer which synchronizes folders between your laptop and OneLake. However, all the actions are manual in nature. Is there any way to script out file and/or folder actions using OneLake storage?

Connect with AzCopy

AzCopy executable is automatically downloaded with Azure Storage Explorer. However, you might want to use this tool with Windows, Linux or the MacOS. Use the following link to download the executable and learn more.

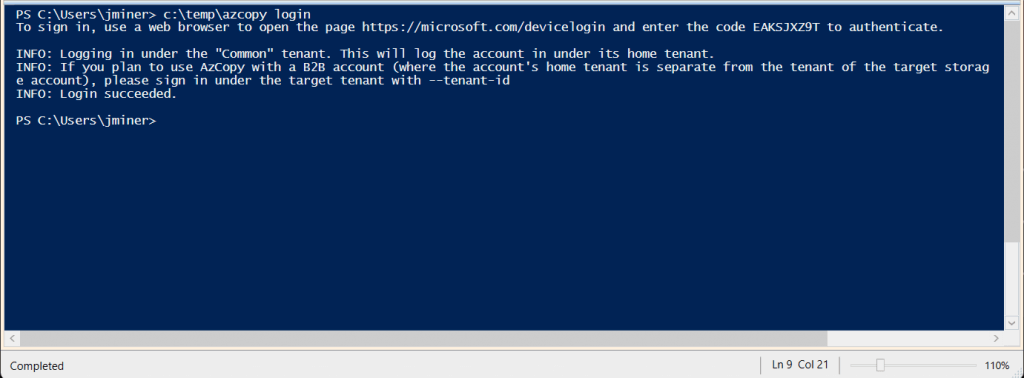

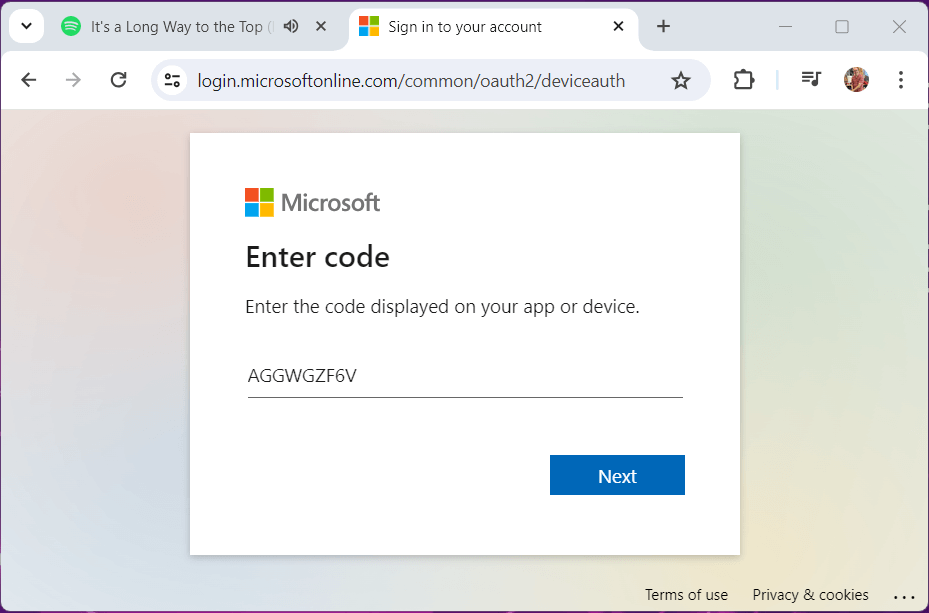

The first action we need to complete is a valid login sequence. The image below shows that I am running the DOS commands via the PowerShell Integrated Scripting Environment (ISE). Since Azure is secure, we need to use the device login website to authenticate.

User a web browser to bring up the authentication page. Please enter the code you were given at the command line.

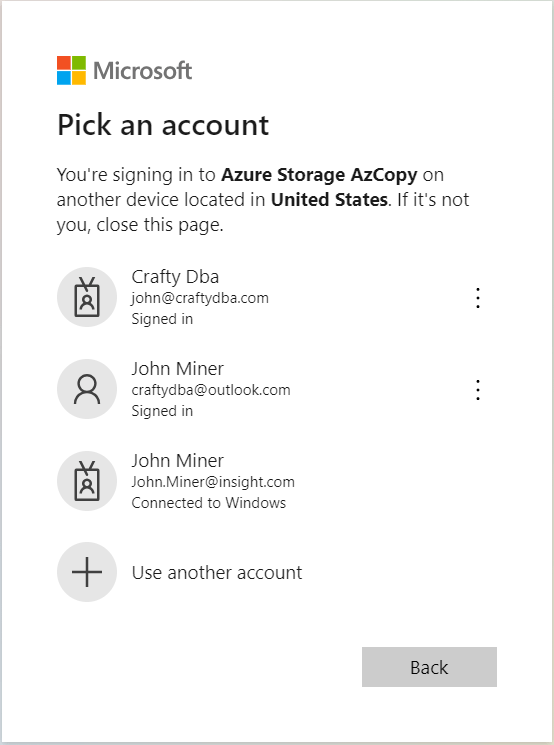

The website remembered a bunch of accounts that have been logged in the past as well as the current. I will be using the john@craftydba.com account to authenticate with Azure.

The website is confirming that it is john who want to use the AzCopy executable. Click the continue button.

The last message from the device login URL is a confirmation of our sign in.

Please note that the login process requires manual intervention. This is not ideal for creating a script that can upload hundreds of files automatically.

Manage OneLake with AzCopy

Users typically want to upload, list and/or download files. In this section, we will go over how to execute these actions with the AzCopy utility.

I placed the weather files in the c:\temp\data directory. How can I upload these files n to my Lakehouse storage?

The script below contains the following AzCopy actions: login, upload files, and logout. I added extra carriage returns for readability. These should be removed before executing the script. The most import part of the script is the trusted Microsoft suffixes option which allows use to work with the OneLake storage in Microsoft Fabric.

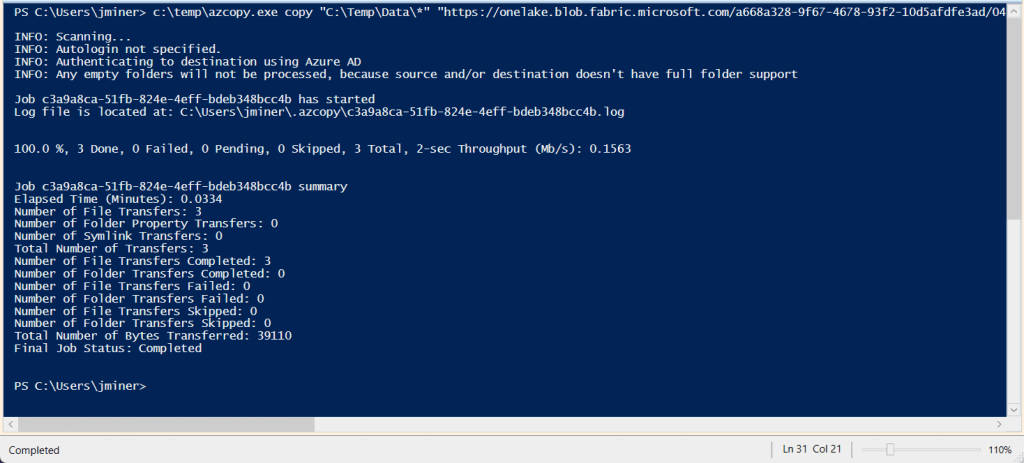

# # Upload script # c:\temp\azcopy login c:\Temp\azcopy.exe copy "C:\Temp\Data\*" "https://onelake.blob.fabric.microsoft.com/a668a328-9f67-4678-93f2-10d5afdfe3ad/0416e287-2a33-4093-8fa6-5f46d7e660d5/Files/raw/saleslt/stage_area/" --overwrite=prompt --from-to=LocalBlob --blob-type BlockBlob --recursive --trusted-microsoft-suffixes=onelake.blob.fabric.microsoft.com c:\temp\azcopy logout

The image below shows three files that were uploaded to the staging area folder.

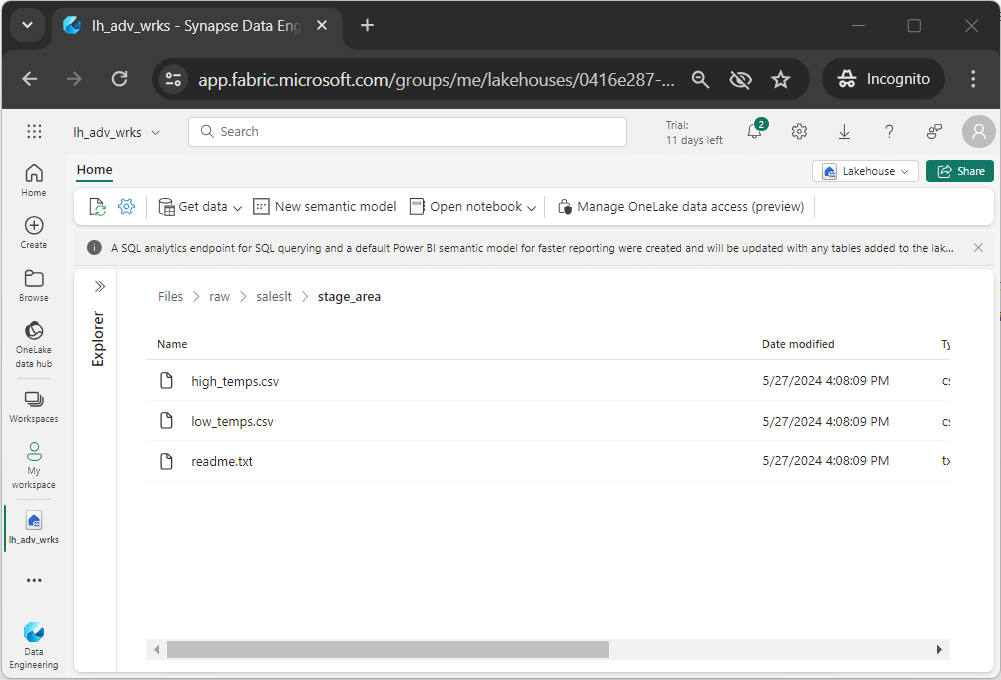

A quick look at the Lakehouse file directory in Fabric confirms the file transfer.

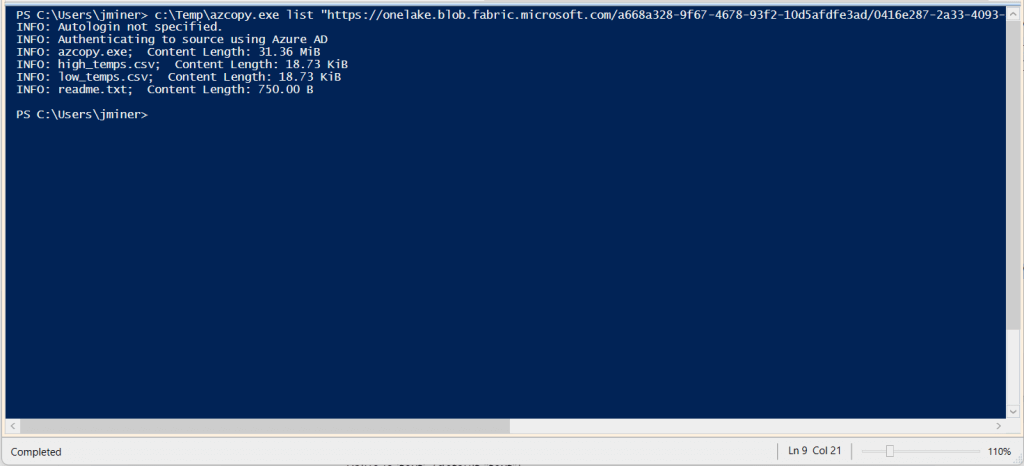

How can we list files using the AzCopy utility? The script below performs the following actions: connect to Azure, list files in the OneLake, and disconnect from Azure.

# # list script # c:\temp\azcopy login c:\Temp\azcopy.exe list "https://onelake.blob.fabric.microsoft.com/a668a328-9f67-4678-93f2-10d5afdfe3ad/0416e287-2a33-4093-8fa6-5f46d7e660d5/Files/raw/saleslt/stage_area/" --trusted-microsoft-suffixes=onelake.blob.fabric.microsoft.com c:\temp\azcopy logout

The image below shows the output list of files in the staging area folder. It is not that pretty.

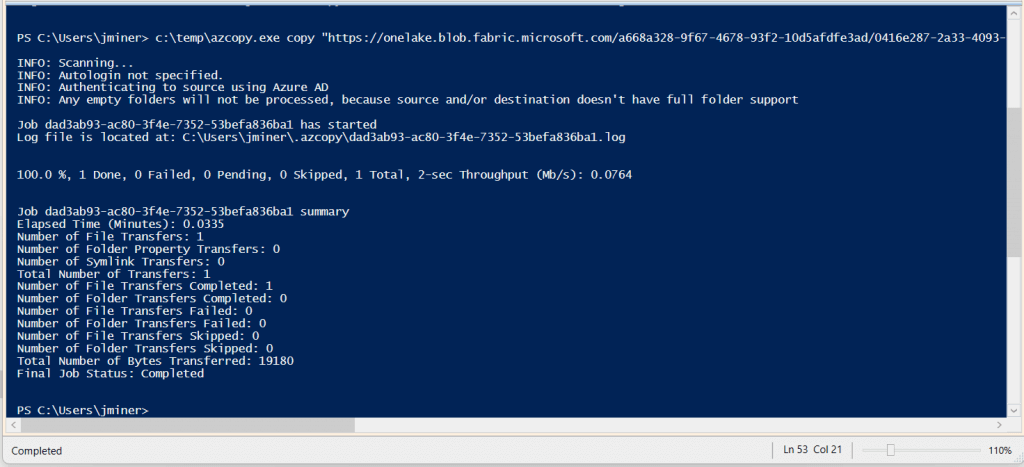

The last action to verify is downloading. How can we download files using the AzCopy utility? The script below performs the following actions: connect to Azure, list files in the OneLake, and disconnect from Azure.

# # Download script # c:\temp\azcopy login c:\temp\azcopy.exe copy "https://onelake.blob.fabric.microsoft.com/a668a328-9f67-4678-93f2-10d5afdfe3ad/0416e287-2a33-4093-8fa6-5f46d7e660d5/Files/raw/saleslt/stage_area/high_temps.csv" "C:\Temp\high_temps.csv" --overwrite=prompt --check-md5 FailIfDifferent --from-to=BlobLocal --recursive --trusted-microsoft-suffixes=onelake.blob.fabric.microsoft.com c:\temp\azcopy logout

The image below shows that the high temperate comma separated values file was downloaded to the c:\temp directory.

To recap, the AzCopy utility requires manual action to sign into Azure. In this section, we uploaded, listed and downloaded files. However, I could have easily generated dynamic code to upload or download multiple directories using PowerShell.

Summary

Microsoft Fabric has been built on top of services such as Azure Data Lake Storage. This means the tools you are familiar with can be used to manage files and/or folders in the OneLake.

Today, we started off showing how Azure Storage Explorer allows the developer more control on what is being done in the OneLake. There is a one-time setup process that needs to be followed to connect to the storage account. Once that is done, you can use the tool like normal. I currently use this tool daily at my current client.

While Azure Storage Explorer orchestrates the tasks, AzCopy performs the work underneath the covers. Please look at the activities window that shows the actual calls to the AzCopy utility. Because this execution does not use a graphical user interface, we need to use the device login website to connect to Azure. One advantage of the utility is the fact that it is supported by the Windows, Linux and Mac Operating Systems. Make sure you use the "trust Microsoft suffixes" as an command line option.

While these tools are great, they are still need manual intervention to sign into Azure. How can we write an automated script that is scheduled by Windows Scheduler?

Next time, I will talk about how to use a Service Principle to connect to Microsoft Fabric and how to use Azure Storage cmdlets to manage both files and folders in the OneLake.