tl;dr

In the following code, "DateValue" and "TimeValue" can be replaced by either variables or columns of the DATE and TIME datatypes, respectively. Also note that the CONVERT functionality can be replaced with CAST, if that's your preference.

Correct Fast Methods

These are the correct formulas that will result in the best performance without the rounding errors that are present in many other common methods found on the internet.

CONVERT(DATETIME2,CONVERT(VARBINARY(6),TimeValue)+CONVERT(BINARY(3),DateValue)) --Fastest DATEADD(dd,DATEDIFF(dd,'1900',DateValue),CONVERT(DATETIME2,TimeValue)) --2nd Fastest

Correct but Slow Methods

These formulas work correctly but they’re at least twice as slow as the correct, non-character-based methods above. I don’t recommend using these. They’re included here as a handy reference of what not to use.

CONVERT(DATETIME2,CONVERT(CHAR(9),DateValue,112)+CONVERT(CHAR(16),TimeValue)) CONVERT(DATETIME2,CONCAT(DateValue,' ',TimeValue)) --Slowest of them all

The WRONG Methods

Again, these formulas are included here as a handy reference for what not to use. The first two formulas below can produce a sometimes serious "hidden" rounding error without failing. The third formula fails for dates outside of the DATETIME datatype range.

--===== Explicit Conversion, causes "hidden" rounding errors. CAST(DateValue AS DATETIME) + CAST(TimeValue AS DATETIME) --===== Explicit Conversion, causes "hidden" rounding errors. CONVERT(DATETIME,DateValue) + CONVERT(DATETIME,TimeValue) --===== Implicit Conversion, fails for dates prior to 1753-01-01. DATEADD(dd,DATEDIFF(dd,0,DateValue),CONVERT(DATETIME2,TimeValue))

Don't use the FORMAT() function in any way. The performance is relatively ponderous even when compared to the slowest method cited above. Please see the following article for proof of that: https://www.sqlservercentral.com/articles/how-to-format-dates-in-sql-server-hint-dont-use-format

A Better Way

In the future or possibly as a fix for existing tables, consider not splitting a perfectly good column that contains both a date and a time to separate DATE and TIME columns. Instead, consider keeping the original column as it is and create just a persisted computed column that contains only the date as a DATE datatype. That will eliminate the need to combine DATE and TIME columns in the future.

Unfortunately, when dealing with OPS (“Other People’s Stuff” to be politically correct and SFW 😊), we still need to know how to do such a combination in an accurate and performant manner.

Prologue

Columns that contain both dates and times take some special considerations when trying to process data for date ranges of even just one whole day. For example, to find all of the rows for a single day, we can't use the simplicity of the following code because it will only find those rows that have the correct date AND a midnight time (also referred to as “whole dates”). That means that all other rows that contain the desired date but have times other than exactly midnight will be missing from the results..

--===== COUNTs only those rows for exactly 2023-06-03 00:00:00.0000000 -- and misses all other rows that occur at different times on the same date. SELECT COUNT(*) FROM dbo.SomeTable WHERE SomeDateTimeColumn = '2023-06-03' ;

To find all of the rows for the given date, using the "Closed/Open" (inclusive/exclusive) method, like the following, will always work correctly:

--===== COUNTs all rows for the given date.

-- This is VERY fast but the most complicated.

-- It’s known as the “Closed/Open” method similar to what’s found in GANT charts.

SELECT COUNT(*)

FROM dbo.SomeTable

WHERE SomeDateTimeColumn >= '2023-06-03' AND SomeDateColumn < '2023-06-04'

;There is a SARGable (click to view Wikipedia definition) optimization that Microsoft made years ago to help us handle such an issue. It will do an index seek but it's twice as slow and uses twice the number of logical reads as the "Closed/Open" method above:

--===== COUNTs all rows for the given date but… -- takes twice as long and twice the number of logical reads. SELECT COUNT(*) FROM dbo.SomeTable WHERE CONVERT(DATE,SomeDateTimeColumn) = '2023-06-03' ;

If a column with just dates is available, the code to find all rows for a given day is simple, fast, and efficient in the presence of an index without the bit of additional complexity found in the "Open/Closed" method.

--===== COUNTs all rows for the given date. -- This is VERY fast and very simple. SELECT COUNT(*) FROM dbo.SomeTable WHERE SomeDateColumn = '2023-06-03' ;

All of that is just to explain why some people split out separate DATE and TIME columns. Whether that's actually a good idea or not is well beyond the scope of this article.

Purpose of This Article

The key is that, just like accidentally shaving off a pimple or getting a wicked leg cramp, we'll run into tables where there are separate DATE and TIME columns and we'll need to deal with them properly when we have been given the task to combine them into a single temporal column.

If you search the internet for “Combine Date and Time Columns in SQL SERVER”, you’ll find many methods with supposed "proof" that the methods work. "Proof" is in quotes because many of the "proofs" fail to reveal a hidden danger to the data and that’s mostly what this article is about.

We’ll discover what that danger is and a few methods to do the recombination safely and accurately. We’ll also test those methods for performance.

Just to keep things simple, this article demonstrates how to combine the separate DATE and TIME data to a DATETIME2 datatype. Combining the columns to a DATETIME datatype will be done in a separate article because the DATETIME datatype has even more issues (like wicked cramps in both legs while shaving 😊).

Sidebar: A Better Idea

While it’s a given that having a DATE column will make temporal queries simpler, consider NOT splitting date/time columns into separate DATE and TIME columns. Instead, keep the original DATETIME2 (or whatever) column and make a separate DATE column (if you must 😊) . In most cases, no TIME column is required.

Here’s a simple example:

--===== Keep the combined column and make just a separate DATE column.

CREATE TABLE dbo.SomeTable

(

ColA INT NOT NULL

,ColB INT NOT NULL

,SomeDateTime DATETIME2 NOT NULL DEFAULT SYSUTCDATETIME()

,JustTheDate AS CONVERT(DATE,SomeDateTime) PERSISTED --<-----<<< LOOK HERE!

)

;

The “JustTheDate” column in the example table above is a computed column that’s always auto-magically up to date. It’s also deterministic so it can be both PERSISTED and indexed. It also does NOT use a Scalar UDF and so it will still allow parallelism to occur. Here's a link to a great collection of articles about the computational travesty of Scalar Functions killing parallelism just about everywhere (including DBCC and Index Rebuilds, etc, etc) in T-SQL.

Doing it this way means does mean that it will cost an extra 3 bytes per row but it also means not having to combine DATE and TIME columns into a single temporal column because that column always exists.

Read on because we still need to deal with data that’s not under our control.

A Common but Incorrect Method

Spoiler Alert: This section of the article covers the most common method on the internet for combining DATE and TIME datatype columns. NEVER USE IT! PERIOD! The discussion in this section is to understand why the word "NEVER" is included in that warning.

Ok… so you’ve run into a table where the DATE and TIME is stored in two separate columns and you’ve just been given a task that requires you to combine them back into a single column. How do we combine them back into a single DATETIME2 column?

One of the most common methods to combine DATE and TIME columns is known amongst the old-timers as the “Classic Method”. It used to work perfectly when all we had was the DATETIME datatype (ignoring the SMALLDATETIME leg-cramp here). Without going into the deep proof, it also kind-of worked prior to SQL Server 2016. They did something to the underlying code in SQL Server that messed that up.

But, let’s forget about that, for now, and get to what the “Classic Method” is and how it works… and doesn’t work.

The “Classic Method”

The “Classic Method” is simple. Just CONVERT (or CAST) the DATE and TIME columns to the DATETIME datatype and then add them together. The reason this works is because, although the DATETIME datatype is not ISO 8601 compliant, it has a lot of the capabilities expressed in ISO 8601, including the ability to simply add dates and times together to produce a single DATETIME value.

The reason why we need to do the CAST or CONVERT to the DATETIME datatype is because you can’t simply add DATE and TIME datatype columns together (yep... AI is wrong again!) like you can with DATETIME. SQL will give you the following error if you try.

Msg 8117, Level 16, State 1, Line 42 Operand data type date is invalid for add operator.

So, the “addition” method is relegated to the “Classic Method” of using the DATETIME datatype. Here’s an example of how that can be done (the formula in the last line of the code is one of the most common examples on the internet).

--===== Declare some obviously named variables DECLARE @OriginalDT DATETIME2 = '2023-06-03 23:48:21.027' ,@DateValue DATE --This will hold only a "date" ,@TimeValue TIME --This will hold only a "time" ; --===== Split out the "date" to a DATE datatype and the "time" to a TIME datatype for testing. SELECT @DateValue = @OriginalDT --Strips the time off because of the DATE datatype ,@TimeValue = @OriginalDT --Strips the date off because of the TIME datatype ; --===== Display the content of the DATE and TIME values and "Classic Method" of recombining them. -- Simply CAST or CONVERT the DATE and TIME to a DATETIME and add them together. -- You'll find that this method is very common on the internet, but very wrong. SELECT OriginalDT = @OriginalDT ,DateValue = @DateValue ,TimeValue = @TimeValue ,DateTimeValue = CAST(@DateValue AS DATETIME) + CAST(@TimeValue AS DATETIME) ;

That code produces the following output:

From left to right in those results, we see the original combined date and time value, the split-out DATE and TIME value, and the recombined value, which has the “same” date and time as the original. Yay!!! It works! We can all go home now, right?

Not so fast. There’s a reason why I have “same” in quotes. The result (in the Red box) isn’t quite the same. It’s lost a lot of resolution because it’s a DATETIME, which only has a 3.3333 ms resolution (1/300th of a second, try it on a calculator) and is rounded to whole milliseconds. That means that the right most digit will always be a “0”, “3”, or “7”.

References: (Note that Microsoft can change these at any time but were current as of 2023-06-11)

- https://learn.microsoft.com/en-us/sql/t-sql/functions/date-and-time-data-types-and-functions-transact-sql#DateandTimeDataTypes

- https://learn.microsoft.com/en-us/sql/t-sql/data-types/datetime-transact-sql?view=sql-server-ver16#description

Bah! Who cares? What’s a couple of milliseconds going to matter? Just like so many do on the internet, we just proved that it works. Right?

Read on to see just how flimsy and incorrect that “proof” actually is.

The “Classic Silent Failure”

Let’s see what the “proof” found in the previous section misses. We can easily destroy that “proof”. We’ll change just the value of “@OriginalDT”, run the code again, and see what happens.

Here’s the code.

--===== Declare some obviously named variables DECLARE @OriginalDT DATETIME2 = '2023-12-31 23:59:59.9983334' --<-----<<< Changed only this literal ,@DateValue DATE --This will hold only a "date" ,@TimeValue TIME --This will hold only a "time" ; --===== Split out the "date" to a DATE datatype and the "time" to a TIME datatype for testing. SELECT @DateValue = @OriginalDT --Strips the time off because of the DATE datatype ,@TimeValue = @OriginalDT --Strips the date off because of the TIME datatype ; --===== Display the content of the DATE and TIME values and "Classic Method" of recombining them. -- Simply CAST or CONVERT the DATE and TIME to a DATETIME and add them together. -- You'll find that this method is very common on the internet, but very wrong. SELECT OriginalDT = @OriginalDT ,DateValue = @DateValue ,TimeValue = @TimeValue ,DateTimeValue = CAST(@DateValue AS DATETIME) + CAST(@TimeValue AS DATETIME) ;

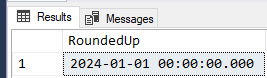

Here are the results:

Again, viewing the results from left to right, we see the original combined date and time. To the right of that, the split-out DATE and TIME values are correct. But, look at the results in the Red box. Not only did it round up the milliseconds, it also rounded up the seconds, minutes, hours, days, weeks (inherently), month, and even the year!

And that’s just the worst case. This “Silent Failure” can mess up your calculations at any temporal level. Is it common? Out of a million rows, how many times will this happen?

The answer is “not many”… but it only takes one to be wrong.

The bottom line is, DON’T USE ANY METHOD WHERE AN EXPLICIT OR IMPLICIT CONVERSION TO DATETIME IS POSSIBLE. Heh… especially if you’re calculating whether your Grandma’s mortgage payment is going to be late or not. This will cause her to take you out of her will. 😊

I’ll also tell you that converting to any less precise datatype (DATETIME2(3), for example) can also cause rounding-up issues. It won’t cause problems as frequently as the “Classic Method” but, again, it only has to be wrong once to be wrong.

Here’s the proof of that.

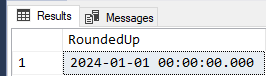

--===== Conversion to lower resolution ROUNDS UP instead of TRUNCATING. DECLARE @OriginalDT DATETIME2 = '2023-12-31 23:59:59.9995000'; SELECT RoundedUp = CONVERT(DATETIME2(3),@OriginalDT);

Results are, again, in the next year!

I believe that’s one of the reasons why Microsoft introduced the DATETRUNC() function in SQL Server 2022. Here’s the link for that: https://learn.microsoft.com/en-us/sql/t-sql/functions/datetrunc-transact-sql

Even that won't save us if there's any conversion to DATETIME, though.

--===== Any conversion to DATETIME will can still cause rounding. DECLARE @OriginalDT DATETIME2 = '2023-12-31 23:59:59.9995000'; SELECT RoundedUp = CONVERT(DATETIME,DATETRUNC(ms,@OriginalDT));

Results:

Like I said, we'll cover how to combine DATE and TIME datatype columns to DATETIME in a separate article.

Four Correct Methods

There are many methods to correctly combine a DATE and TIME column to a single DATETIME2 column. These are the 4 basic methods. All of the other methods I’ve seen are typically a longer variation of these 4.

Test for Accuracy

Here’s the code to test the 4 correct methods for accuracy. The fractional seconds have been maxed out to .9999999 to make sure there’s not the slightest bit of rounding. The date being used in the test is also a “negative date” (prior to 1900-01-01) just to prove that it works there, as well. The year 1700 is also well before the range of the DATETIME datatype, which starts at 1753-01-01, to further prove there are no implicit conversions to the DATETIME datatype, which would cause the code to fail with an “I’m sorry Dave but I can’t do that” type of error.

Here’s the code:

--===== Declare some obviously named variables DECLARE @OriginalDT DATETIME2 = '1700-12-31 23:59:59.9999999' ,@DateValue DATE --This will hold only a "date" ,@TimeValue TIME --This will hold only a "time" ; --===== Split out the "date" to a DATE datatype value and the "time" to a TIME datatype. SELECT @DateValue = @OriginalDT --Strips the time off because of the DATE datatype ,@TimeValue = @OriginalDT --Strips the date off because of the TIME datatype ; --===== Display the content of the DATE and TIME values and 4 methods of recombining them. SELECT OriginalDT = @OriginalDT ,DateValue = @DateValue ,TimeValue = @TimeValue ,v.Method ,v.DateTimeValue FROM (VALUES (1,DATEADD(dd,DATEDIFF(dd,'1900',@DateValue),CONVERT(DATETIME2,@TimeValue))) ,(2,CONVERT(DATETIME2,CONVERT(CHAR(9),@DateValue,112)+CONVERT(CHAR(16),@TimeValue))) ,(3,CONVERT(DATETIME2,CONCAT(@DateValue,' ',@TimeValue))) ,(4,CONVERT(DATETIME2,CONVERT(VARBINARY(6),@TimeValue)+CONVERT(BINARY(3),@DateValue))) )v(Method,DateTimeValue) ;

And here are the results:

Notice that all 4 methods faithfully reproduced (in the Red box) the original combined date and time column with no loss of resolution or rounding even at the 100 ns level.

Here’s how the individual methods work…

Method 1

DATEADD(dd,DATEDIFF(dd,'1900',@DateValue),CONVERT(DATETIME2,@TimeValue))

First, the TIME value is converted to DATETIME 2, which inherently has a default date of 1900-01-01.

Then, the DATEDIFF calculates the number of days @DateValue is from the 1st of January, 1900. It’s important to use ‘1900’ here instead of the classic “0” Date Serial Number because, if you use “0”, it will do an implicit conversion to DATETIME. While that won’t hurt the accuracy here because we’re just counting the number of days, it will prevent the conversion of dates prior to the range of DATETIME, which starts at 1753-01-01.

Finally, the DATEADD adds those number of days to the converted TIME value and we end up with a DATETIME2 combined date and time.

Method 2

CONVERT(DATETIME2,CONVERT(CHAR(9),@DateValue,112)+CONVERT(CHAR(16),@TimeValue))

This method converts both the DATE and TIME values to strings, concatenates them using the old "+" method of concatenation, and then converts that combined string to a DATETIME 2.

The conversion of the DATE to CHAR(9) contains the 8 digit ISO date and implicitly provides the required space between the DATE and the TIME as the 9th character.

Method 3

CONVERT(DATETIME2,CONCAT(@DateValue,' ',@TimeValue))

This is the modern method of concatenation. It inherently converts the DATE and TIME to strings and concatenates them and the required space to together, and then converts that string to a DATETIME2.

A lot of people really like this method because it’s simple and easy to remember. As we’ll soon see, though, “shorter” doesn’t always mean “faster”.

Method 4

CONVERT(DATETIME2,CONVERT(VARBINARY(6),@TimeValue)+CONVERT(BINARY(3),@DateValue))

We won’t go into how DATETIME2 is stored if you convert it to binary but this properly creates and concatenates the binary versions of TIME and DATE to produce a DATETIME2 binary, and then converts that to a DATETIME2.

As we previously discovered, all 4 methods (formulas) work correctly. I’ll tell you they’re all pretty fast but, which one is the fastest? Let’s find out.

The Test Data

With the speed of today’s machines, we need a lot of test data to do performance tests with. Find, review, and run the following named script, which is contained in the attached ZIP file.

0100 - Create the DateTime Test Table - All supporting objects - 10 million row test table.sql

As the name says, it creates everything we need to do our tests with. The database is created using only defaults according to your existing configuration. Change the code to do otherwise if you need it to. Depending on how your Model database is configured, the MDF file will be less than or equal to 300 MB and the log file will be much smaller due to the “Minimal Logging” built into the code. YMMV.

As a bit of a sidebar, substituting the new GENERATE_SERIES() system function (available starting in SQL Server 2022) for the dbo.fnTally function in the code isn’t a good idea because it denies us of “Minimal Logging”. You can bet that will be in a forthcoming article. 😉

The owner of the database is not changed by the code. It will be whatever your default is.

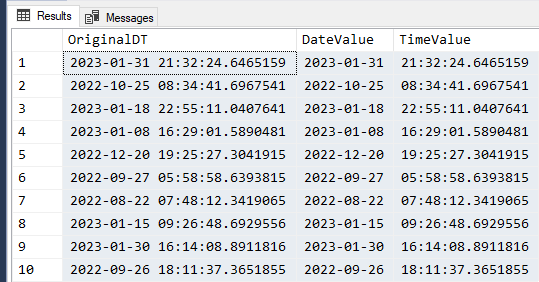

Here are the first 10 rows of the table that it creates. It’s not unlike what we’ve already been testing with, the exception being that it’s a 10 million row table (HEAP) instead of a handful of variables. The dates and times are randomly created, so expect them to be different when you review and then run the code.

And, not to worry… On my laptop, it takes less than 13 seconds to build everything AND populate the 10 Million row table. (Try THAT with a recursive CTE, While loop, or GO 10000000. OH! Major leg-cramp! 😊 ). Again, YMMV.

Running the Performance Tests

Please see the following script in the ZIP file attached to this article for code that will run the performance tests for all 4 correctly working methods.

0200 - Performance Tests for the 4 Correct Methods.sql

Once you’ve reviewed the code, there’s nothing special to do (after you’ve successfully executed script 0100 previously cited above) and it’ll do all the tests and return timing results. For my testing, I disabled all the script timing results and used SQL Profiler instead (you don't need to do that).

Here are the SQL Profiler results:

Method 1, which uses DATEADD of days to a converted TIME came in second for performance. It uses “normal/supported” code to do so. If you're a bit squeamish about using "undocumented" methods, like that found in Method 4, then this is the method to use.

Method 4 is the hands-down winner both for CPU usage and Duration. This method used a “Black Arts” method (unsupported, in this case) of converting the DATE and TIME to a binary DATETIME2. That spooks some people and so Method 1 is what a lot of people will fall back to. That’s OK because it’s still more than twice as fast as Methods 2 and 3.

Methods 2 and 3 are more than twice as slow even when compared to the 2nd place Method 1. That’s because of the character-based conversions and reconversion along with string concatenation. I strongly recommend NOT using Method 2 or 3.

Epilogue

The “tl;dr” section at the beginning of this article would make for a pretty good summary of what we covered and so I’ll leave it at that instead of repeating it here. Thank you for the read and comments in the article discussion are welcome. You can get there by clicking on the link titled “Join the discussion and add your comment” just a little below the end of this article. The attached ZIP file is located at the “Resources” link just above the discussion link.

As a bit of a sidebar, the 2 script files that are in the ZIP file attached to this article have some interesting bits of code in them. For all you newbies and folks that just want to practice SQL a bit more, I (as have many of the denizens of this site) taught myself a whole lot about SQL Server and, especially, T-SQL by learning very early on that being able to quickly design code to generate mountains of test data was going to be the key to success.

For the rest of the folks that already know that, be advised that things like the fnTally function I used are still necessary even though Microsoft finally (15 years after Erland Sommarskog first wrote a “Connect” suggestion about it) came out with the new GENERATE_SERIES() function. It turns out that it has the nasty habit of negating “Minimal Logging”, possibly because it’s likely to be a “CLR” or other call to a .NET dll behind the scenes. I found out the hard way while making a 100 million row, 52GB test table that created an 88GB log file instead of the 600MB log file that I was expecting.

And remember… When working on someone else’s code or database design, always keep a Styptic Pencil (for the high spots you shave off) and an orange (source of Potassium for the leg cramps) handy. 😊

This was a lot of fun. And, a special thanks to Phil Parkin for the guidance in the reviews he provides.

Thanks for listening, folks.

-- Jeff Moden

© Copyright - Jeff Moden, 11 June 2023, All Rights Reserved