Introduction

The single most expensive native operation SQL Server can perform is reading and writing to the disk. While SSD drives have been hailed as the future and fault tolerant ram drives are prohibitively expensive, most of us still use the humble mechanical disk drive to store and retrieve data. Understanding SQL's I/O patterns can help you design your disk infrastructure and knowing your application's patterns can help you get the most out of your disks.

In this article I will be show how to measure the quantity and size of I/O requests in each database as well as being able to work out where your I/O's are hitting and then matching those up with physical tables. To do this we can use the free "Process Monitor" tool and then load the output into SQL Server.

Capturing

Capturing the data is very straight forward. First you will need a copy of procmon (Process Monitor), which you can download from http://technet.microsoft.com/en-us/sysinternals/bb896645.aspx.

What we will do is start procmon and create a filter for just the SQL Data files, because there are processes which constantly read and write to files we want to ignore the general "chatter". When you run procmon the first thing you are asked to do is to set up a filter (aside from the one time EULA!). If you choose "Path" then "Ends With" and enter ".mdf" you can filter on all mdf data files. If you wanted to, you could restrict procmon to work with a specific database or set of databases using a more selective filter.

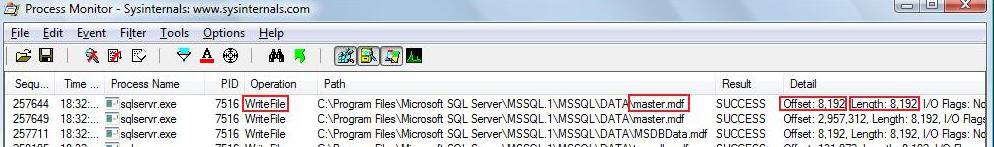

If you then apply the filter and press OK the application will start gathering data and when any file access occurs, you will see the operation displayed on the screen. If the file is being used then you will already start to see entries. It should look something like this image, I have highlighted the areas we are particularly interested in:

When you have captured enough data you can stop the trace. If you save it as a csv file we can then import this into SQL. Ideally you should capture enough data to show a representation of the operations that normally occur on the system. If this is a test system then you will need to carry out the same actions as your users would and try to avoid anything that is out of the ordinary (i.e. running a defrag or backup on the database) as this would skew the results unfairly.

Importing

Once you have the actual data you then need to analyse it. This is fairly straightforward and involves taking the Operation, FileName, Offset and Length from the data we have already captured. To display the name of the table, we need to import the data onto the same instance that had the data captured. If you just want to be able to see the type and size of I/O requests the you can import the data onto any instance (or even some other tool like excel). To get the name of the table then we must use a combination of system metadata and the DBCC PAGE command:

DBCC Page takes a database id, file id, page number and format type. When you run DBCC Page, it will return information on a page and we can then use this information to match data in tables such as sysobjects and sysindexes to find the table / index details.

Operation

This shows use whether it was a Read or a Write, this is used to show what types of requests are being sent to the files.

FileName

This shows which database file is being used, we can then use this as the file id with DBCC PAGE.

Offset

This shows us the location of the request in the file. Database files are made up of 8kb pages, each one is numbered sequentially so the page at location 8192 (bytes) is page 1 - so to get the page id, you just divide the Offset (which is in bytes) by 8192.

Length

This shows the length of the request, although we don't need this to get the name of the table it is useful to show the length of I/O requests and can be used to help decide which cluster size you should use. For example if 99% of your I/O's are 8K then consider testing your applications with 8K cluster size on the disks.

The easiest way to import the csv file is to use the Import/Export wizard in SQL Management Studio. If you use the script below to create the table [Iops] and then use the wizard to load the data into it:

CREATE TABLE [dbo].[Iops]( [Sequence] [nvarchar](4000) NULL, [Time of Day] [nvarchar](4000) NULL, [Process Name] [nvarchar](4000) NULL, [PID] [nvarchar](4000) NULL, [Operation] [nvarchar](4000) NULL, [Path] [nvarchar](4000) NULL, [Result] [nvarchar](4000) NULL, [Detail] [nvarchar](4000) NULL, [Offset] [int] NULL, [Length] [int] NULL, [name] [varchar](max) NULL --If you are not going to run the second script you don't need this. ) ON [PRIMARY]

When you import the data it should look like:

| Sequence | Time Of Day | Process Name | Pid | Operation | Path | Result | Detail | Offset | Length | Name |

|---|---|---|---|---|---|---|---|---|---|---|

| 4176 | 12:35:24.0954613 | sqlservr.exe | 6824 | ReadFile | C:\Database\Data\Datafile.mdf | SUCCESS | Offset: 983,040, Length: 65,536, I/O Flags: Non-cached | NULL | NULL | NULL |

| 4177 | 12:35:24.1954613 | sqlservr.exe | 6824 | ReadFile | C:\Database\Data\Datafile.mdf | SUCCESS | Offset: 327,680, Length: 65,536, I/O Flags: Non-cached | NULL | NULL | NULL |

| 4178 | 12:35:24.2954613 | sqlservr.exe | 6824 | ReadFile | C:\Database\Data\Datafile.mdf | SUCCESS | Offset: 589,824, Length: 65,536, I/O Flags: Non-cached | NULL | NULL | NULL |

Now we have the raw data imported we need to use the Detail column to get the Offset and the Length, we do this simply with by stripping out the text we don't want and updating the Offset and Length columns of our table:

UPDATE iops

SET Offset = CAST( REPLACE(SUBSTRING(Detail, CHARINDEX(':', Detail) +1, CHARINDEX('Length', Detail) - CHARINDEX(':', Detail)-1), ',', '') AS INT),

Length = CAST( REPLACE(SUBSTRING(Detail, CHARINDEX('Length:', Detail) +8, CHARINDEX('I/O', Detail) - CHARINDEX('Length:', Detail) -8), ',', '') AS INT)

| Sequence | Time Of Day | Process Name | Pid | Operation | Path | Result | Detail | Offset | Length | Name |

|---|---|---|---|---|---|---|---|---|---|---|

| 4176 | 12:35:24.0954613 | sqlservr.exe | 6824 | ReadFile | C:\Database\Data\Datafile.mdf | SUCCESS | Offset: 983,040, Length: 65,536, I/O Flags: Non-cached | 983040 | 65536 | NULL |

| 4177 | 12:35:24.1954613 | sqlservr.exe | 6824 | ReadFile | C:\Database\Data\Datafile.mdf | SUCCESS | Offset: 327,680, Length: 65,536, I/O Flags: Non-cached | 327680 | 65536 | NULL |

| 4178 | 12:35:24.2954613 | sqlservr.exe | 6824 | ReadFile | C:\Database\Data\Datafile.mdf | SUCCESS | Offset: 589,824, Length: 65,536, I/O Flags: Non-cached | 589824 | 65536 | NULL |

This now shows us the location of the I/O requests as well as the size. You can use this information to be able to find what type of requests you get i.e.

SELECT Length/1024 AS IOSize, COUNT(*) AS IOCount FROM iops GROUP BY Length/1024 ORDER BY Length/1024

| IOSize | IOCount |

|---|---|

| 4 | 1 |

| 8 | 2 |

If you are on the same instance as the one where the data was captured you can take it one step further to get the name of the table / index that was the target for the request:

DECLARE @sqlcode TABLE( sequence int, sql VARCHAR(MAX), dbname VARCHAR(MAX))

INSERT INTO @sqlcode

SELECT sequence, 'DBCC PAGE(' + CAST(dbid AS VARCHAR(MAX)) + ',' + CAST(fileid AS VARCHAR(MAX)) + ',' + CAST((offset/8192) AS VARCHAR(MAX)) + ', 0) WITH TABLERESULTS', DB_NAME(dbid) FROM iops iop JOIN sys.sysaltfiles saf ON iop.Path = saf.filename

DECLARE @iCur INT, @iMax INT

SELECT @iCur = MIN(sequence), @iMax = MAX(sequence) FROM @sqlcode

CREATE TABLE #pageOutput( ParentBuffer VARCHAR(MAX), OBJECT VARCHAR(MAX), FileId VARCHAR(MAX), Value VARCHAR(MAX))

WHILE @iCur <= @iMax

BEGIN

TRUNCATE TABLE #pageOutput

DECLARE @sql NVARCHAR(MAX), @sequence INT, @dbname VARCHAR(MAX), @ObjectId INT, @IndId INT

SELECT @sql = sql, @sequence = sequence, @dbname = dbname FROM @sqlcode WHERE sequence = @iCur

INSERT INTO #pageOutput

EXEC sp_executesql @sql

SELECT @ObjectId = Value FROM #pageOutput WHERE FileId = 'Metadata: ObjectId'

SELECT @IndId = Value FROM #pageOutput WHERE fileId = 'Metadata: IndexId'

DECLARE @sqlupdate nVARCHAR(MAX)

select @sqlupdate = 'UPDATE iops SET NAME = ''' + @dbname + '.'' + ' + '(SELECT so.NAME + ''.'' + COALESCE(si.NAME, ''' + CAST(@IndId AS VARCHAR(MAX)) + ' (id)'') FROM ' + @dbname + '.sys.sysindexes si JOIN ' + @dbname + '.sys.sysobjects so ON si.id = so.id WHERE so.id = ' + CAST(@ObjectId AS varchar(MAX)) + ' AND si.indid = ' + cast(@IndId as varchar(max)) + ') where sequence =' + CAST(@sequence AS VARCHAR(MAX))

EXEC sp_executesql @sqlupdate

SET @iCur = @iCur + 1

END

SELECT * FROM iops

DROP TABLE #pageOutput

What this script does is to take the path of each request, match it to the sysaltfiles table which has a dbid and fileid. Along with the PageID it gets by dividing the offset by 8192 it calls DBCC PAGE and gets this header information:

PAGE: (1:120) BUFFER:

BUF @0x02E8BBA0 bpage = 0x140F0000 bhash = 0x00000000 bpageno = (1:120)

bdbid = 85 breferences = 0 bUse1 = 21992

bstat = 0xc00009 blog = 0x159a2159 bnext = 0x00000000 PAGE HEADER:

Page @0x140F0000

m_pageId = (1:120) m_headerVersion = 1 m_type = 1

m_typeFlagBits = 0x4 m_level = 0 m_flagBits = 0x8200

m_objId (AllocUnitId.idObj) = 85 m_indexId (AllocUnitId.idInd) = 256

Metadata: AllocUnitId = 72057594043498496

Metadata: PartitionId = 72057594038452224 Metadata: IndexId = 0

Metadata: ObjectId = 2089058478 m_prevPage = (0:0) m_nextPage = (0:0)

pminlen = 8 m_slotCnt = 426 m_freeCnt = 2

m_freeData = 7338 m_reservedCnt = 0 m_lsn = (32:341:343)

m_xactReserved = 0 m_xdesId = (0:0) m_ghostRecCnt = 0

m_tornBits = 191948272

When you have the ObjectId and IndexID you then just need to match these to the Id in sys.sysobjects and the IndId in sys.sysindexes to get the object and index name:

SELECT * FROM sys.sysobjects WHERE id = 2089058478

Sysobjects:

| name | id | xtype | uid | info | status | base_schema | replinfo | parent_obj | ftcatid | schema_ver | stats_schema_ver | type | userstat | sysstat | indexdel | refdate | version | deltrig | instrig | updtrig | seltrig | category | cache |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Users | 2089058478 | U | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | U | 1 | 3 | 0 | 2009-03-31 09:00 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

Sysindexes:

| id | status | first | indid | root | minlen | keycnt | groupid | dpages | reserved | used | rowcmt | rowmdcnt | reserved3 | reserved4 | xmaxlen | maxirow | OrigFillFactor | StatVersion | reserved2 | FirstIAM | impid | lockflags | pgmodctr | keys | name | statblob | maxlen | rows |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2089058478 | 0 | 0x780000000100 | 0 | 0x0000000000 | 8 | 0 | 1 | 20 | 25 | 21 | 8192 | 8192 | 0 | 0 | 2048 | 0 | 0 | 0 | 0 | 0xAE0000000100 | 0 | 0 | 0 | NULL | IndexName | NULL | 8000 | 8192 |

This then shows the name of the table and the index:

| Sequence | Time Of Day | Process Name | Pid | Operation | Path | Result | Detail | Offset | Length | Name |

|---|---|---|---|---|---|---|---|---|---|---|

| 4176 | 12:35:24.0954613 | sqlservr.exe | 6824 | ReadFile | C:\Database\Data\Datafile.mdf | SUCCESS | Offset: 983,040, Length: 65,536, I/O Flags: Non-cached | 983040 | 65536 | dbName.TableName.IndexName |

| 4177 | 12:35:24.1954613 | sqlservr.exe | 6824 | ReadFile | C:\Database\Data\Datafile.mdf | SUCCESS | Offset: 327,680, Length: 65,536, I/O Flags: Non-cached | 327680 | 65536 | dbName.TableName.0 (id) |

| 4178 | 12:35:24.2954613 | sqlservr.exe | 6824 | ReadFile | C:\Database\Data\Datafile.mdf | SUCCESS | Offset: 589,824, Length: 65,536, I/O Flags: Non-cached | 589824 | 65536 | dbName.TableName.indexName |

Note, sometimes an index will not have a name, in that case the script shows the id and "(id)" in brackets.

Summary

Using Process Monitor to record the I/O patterns and then using Sql to shred the data into sizes, offsets and table / index names is pretty straightforward but gives you access to a level of information that isn't currently readily available. Perfmon will tell you how many bytes in total are read and written but at a disk level and hardware vendors may have tools available but procmon will work in all situations and has the added benefit of being free!

What Now?

When designing and implementing your Sql Server solution there are tools available like sqlio.exe which let you test different types and sizes of I/O patterns against your disks so you can test your infrastructure and find a way to get the most performance from your disks but they require that you specify the I/O size and while you can find out how SQL should behave, it is important to understand how it behaves in your environment to enable you to make the best decisions. Using this approach you can know for sure what size, type (read or write) and whether the I/O's are random or sequential.

Further Information

When dealing with SQL and I/O I would recommend these resources: