Backup Monitoring and Reporting

Database recovery is a core task of database administration and is defined as executing the tasks necessary to backup, save, retrieve and restore databases from hardware, software and user error. Your database recovery procedures should be documented and regularly tested by restoring either a full or random sample of backups. A key part of database backups is monitoring whether backups are in fact occurring. A common mistake in backup monitoring is only checking for backup success or failure when there are in fact three possible outcomes; success, failure and nothing. What is nothing? Nothing is what happens when a database or entire SQL instance for that matter is not configured for backups or the SQL Agent job or Windows services which are often a part of 3rd tools are in a hung state. In order to achieve effective backup monitoring you need to look at the non-event of a lack of backup rather than failed backup messages. Regardless of how a database is backed up, whether through SQL Server native backups or any one of a number of commercial backup software a row is written to the msdb.backupset table. Knowing this information, you can create daily monitoring and backup reports.

Monitoring Daily Backups

Monitoring daily backups is simple, the following query will find all databases that have not been backed up within the past day:

SELECT MAX(ISNULL(DATEDIFF(dd,ISNULL(b.backup_start_date, '01/01/1900'),GETDATE()),0)) AS 'NumDays'

, d.name as 'DBName'

FROM master..sysdatabases d

LEFT JOIN msdb..backupset b ON d.name = b.database_name

AND b.backup_start_date = (SELECT MAX(backup_start_date)

FROM msdb..backupset b2

WHERE b.database_name = b2.database_name AND b2.type IN ('D','I'))

WHERE d.name != 'tempdb'

--AND d.name NOT IN (SELECT db_name FROM dbautility.dbo.db_exclude)

AND DATABASEPROPERTYEX(d.name, 'Status') = 'ONLINE'

GROUP BY d.name, b.type, b.backup_size

HAVING MAX(ISNULL(DATEDIFF(dd,ISNULL(b.backup_start_date, '01/01/1900'),GETDATE()),0)) > 1

You can automate daily backup monitoring using the above query through SQL Agent or Microsoft Operations Manager/System Center Operations Manager. An important consideration for any monitoring solution is the ability to add exclusions. To implementation an exclusions create a db_exclude table; populate the table with any databases you wish to exclude and uncomment "AND d.name NOT IN (SELECT db_name FROM dbautility.dbo.db_exclude)" in line above.

Reporting Backup Information

In addition to monitoring daily backups you can also report backup events across servers and databases over different periods of times. If like many large organizations, your backup responsibilities are performed by a group other than your DBAs; creating a backup report is especially important in order to ensure any operating level agreements (OLAs) you have are being met. To create a backup report you will need to define a collection process to centralize backup information and create a few SQL Server Reporting Services (SSRS) reports to present the data.

Getting Started

Overview

- Create tables using backdw.tables.sql

- Create fnSeqDates function

- Populate server_dim, exclude_db_dim and dt_dim tables

- Create procedures using backup.routines.sql

The download section of this article includes a setup of scripts for creating database objects needed for generating a consolidated report. To get started, create a database in this example, the article uses BackupDW. Then run the code in BackupDW.tables.sql to create the tables. The script will create the following six tables which will be used for collecting and reporting backup information:

You'll need to populate lookup/control tables: server_dim, dt_dim and db_exclude_dim. The remaining tables will be loaded through the collection process. Add servers to the server_dim table for each server you wish to collect backup information. For example the following code adds the Z001\Sql1 server:

insert server_dim (server_name) values ('Z001\Sql1')

Next add the server and databases you wish to exclude from analysis to the db_exclude_dim table. To populate the dt_dim we'll make use of the fnSeqDates function created by Peter Larsson as described in his article How many more Mondays until I retire?. Note, the dt_dim table contains two columns window_start_dtm and window_end_dtm which are used to determine the backup window for a given date. The query uses 6 PM, DATEADD(hh,18,SeqDate) as the window_start_dtm and 6 AM, DATEADD(hh,30,SeqDate) as the window_end_dtm. And for Fridays the query defines the backup window from Friday 6 PM through Monday 6 AM, DATEADD(hh,78,SeqDate). Your backup window may vary, adjust the CASE statement as needed. Create the fnSeqDates function in the backupdw database and run the following code to populate the dt_dim table adjusting the begin and end dates as well as the start and end date time calculations as desired.

insert dt_dim

select CAST(SeqDate AS int),

SeqDate,

DATEPART(yy,SeqDate),

DATEPART(qq,SeqDate),

DATEPART(mm,SeqDate),

DATEPART(dd,SeqDate),

DATEPART(wk,SeqDate),

DATEPART(dw, SeqDate),

DATEADD(hh,18,SeqDate),

CASE

WHEN DATEPART(dw,SeqDate) BETWEEN 2 AND 5 THEN DATEADD(hh,30,SeqDate)

WHEN DATEPART(dw,SeqDate) = 6 THEN DATEADD(hh,78,SeqDate)

END, 0, 0

from fnSeqDates('2008-01-01','2010-01-01')

Two additional interesting pieces of information include; whether the particular week is Microsoft patch week i.e. the week falls on the second Tuesday of the month and whether a particular day is a holiday. Run the following code to update the dt_dim table with Microsoft patch week information:

update dt_dim

set isMSPatchWk = 1

WHERE wk in

(SELECT MIN(wk)

FROM dt_dim

WHERE dw = 3 AND dd > 7

GROUP BY mm)

Update holiday information with the following code:

update dt_dim

set isHoliday = 1

WHERE dt in ('2009-01-01', '2009-01-19', '2009-02-16', '2009-04-10', '2009-05-25', '2009-07-03',

'2009-09-07', '2009-11-26', '2009-12-25')

Next create the following procedures in by running the backupdw.routines.sql script in the accompanying download:

- dbo.insert_backup_fact_sp

- dbo.merge_db_dim_sp

- dbo.update_backup_fact_sp

- dbo.update_server_dim_sp

- dbo.usp_backup_analysis_detail

- dbo.usp_backup_analysis_rpt

The data will be collected by a Powershell script, backupAnalysis.ps1 and you'll need to change the following variables in the Powershell script to reflect the server and database name where the data will be stored: The following excerpt from, backupAnalysis.ps1 sets the server name and database name variables:

$destServer = 'Z002\SQL2'

$destdb = 'backupdw'

Collecting the Data

Overview

- Run Powershell script backupAnalysis.ps1

- Execute update_server_dim_sp

- Execute merge_db_dim_sp

- Execute insert_backup_fact_sp

- Execute update_backup_fact_sp

- Schedule SQL Agent to automate steps one through six.

To collect the data run the Powershell script from a Powershell console:

./backupAnalysis.ps1

The script executes the following query on the target server to load the data into the centralized server backupset_dim table:

SELECT @@servername, backup_set_id, database_name, backup_start_date, backup_finish_date, user_name,type

FROM backupset

WHERE type IN ('D','I')

AND backup_start_date > '2008-01-01'

AND backup_set_id > $backup_set_id

The value of backup_set_id is passed to the script from the server_dim.max_backup_set_id column. This way only new backup rows are collected on each run. The Powershell, script could just as easily be replaced with an SSIS package or linked server calls, but for simple ETL process Powershell works very well.

Next run update_server_dim_sp to update the max_backup_set_id column. Then run merge_db_dim_sp to insert new databases and update existing database information in the db_dim table. Insert the backup_fact rows by running insert_backup_fact_sp. Finally run update_backup_fact_sp which will update the success flag and username columns. Note: Determining whether a date's backup is successful requires considering the backup window, if a backup occurs outside the backup window, the backup is not counted as a success. You can adjust the backup window columns in the dt_dim table as needed.

In order to automate and schedule the data collection process a SQL job creation script, BackupDW.Jobs.sql is included with the article download. You'll need to modify the location of the Powershell script in the first job step for your environment.

Queries

After collecting you can easily create some very interesting queries using different date parts and grouping levels. The following are several examples. Note: A BackupDay is defined as a day on which you should have a backup and successfulBackups are days in which there were in fact a successful backup. The PercentSuccessful is calculated by dividing the successful backups by the total backup days.

Overall backup success for the time period

SELECT count(*) as TotalBackupDays, sum(CAST(isSuccess AS int)) as SuccessfulBackups,

round(sum(CAST(isSuccess AS float))/count(*) * 100,2) As PercentSuccessful

FROM backup_fact f

JOIN dt_dim d ON f.dt_id = d.dt_id

WHERE dt BETWEEN '2009-01-02' AND '2009-03-31'

Overall backup success by month

SELECT yy, mm,

count(*) as TotalBackupDays , sum(CAST(isSuccess AS int)) as SuccessfulBackups,

round(sum(CAST(isSuccess AS float))/count(*) * 100,2) As PercentSuccessful

FROM backup_fact f

JOIN dt_dim d

ON f.dt_id = d.dt_id

WHERE dt BETWEEN '2009-01-02' AND '2009-03-31'

Overall backup success by week

SELECT yy, mm, wk,

count(*) as TotalBackupDays, sum(CAST(isSuccess AS int)) as SuccessfulBackups,

round(sum(CAST(isSuccess AS float))/count(*) * 100,2) As PercentSuccessful

FROM backup_fact f

JOIN dt_dim d

ON f.dt_id = d.dt_id

WHERE dt BETWEEN '2009-01-02' AND '2009-03-31'

GROUP BY yy, mm, wk

Overall backup success by weekday

SELECT dw,

count(*) as TotalBackupDays, sum(CAST(isSuccess AS int)) as SuccessfulBackups,

round(sum(CAST(isSuccess AS float))/count(*) * 100,2) As PercentSuccessful

FROM backup_fact f

JOIN dt_dim d

ON f.dt_id = d.dt_id

WHERE dt BETWEEN '2009-01-02' AND '2009-03-31'

GROUP BY dw

Any of the above queries can be modified to report by server or by database. The following is an example by server for a specified time period

SELECT server_name,

count(*) as TotalBackupDays, sum(CAST(isSuccess AS int)) as SuccessfulBackups,

round(sum(CAST(isSuccess AS float))/count(*) * 100,2) As PercentSuccessful

FROM backup_fact f

JOIN dt_dim d

ON f.dt_id = d.dt_id

WHERE dt BETWEEN '2009-01-02' AND '2009-03-31'

GROUP BY server_name

To simplify the various reporting options, a stored procedure, usp_backup_analysis_rpt is included with the download. Using the procedure you can run any of the above queries and specify different grouping levels. The procedure accepts the following parameters:

| Parameter | Values |

|---|---|

| @reportType | Overall,Server or Database |

| @reportInterval | ALL, Month, Week, Weekday |

| @start_dt | date |

| @end_dt | date |

Reporting

The following reports are included in the download section of the article:

| BackupDWDetail | Executes procedure dbo.usp_backup_analysis_detail providing detailed level report of backup information |

|---|---|

| BackupDWDetail | Executes procedure dbo.usp_backup_analysis_rpt providing summary information at the all, weekly, monthly and weekday level. Report is summarized by server, database or overall level. |

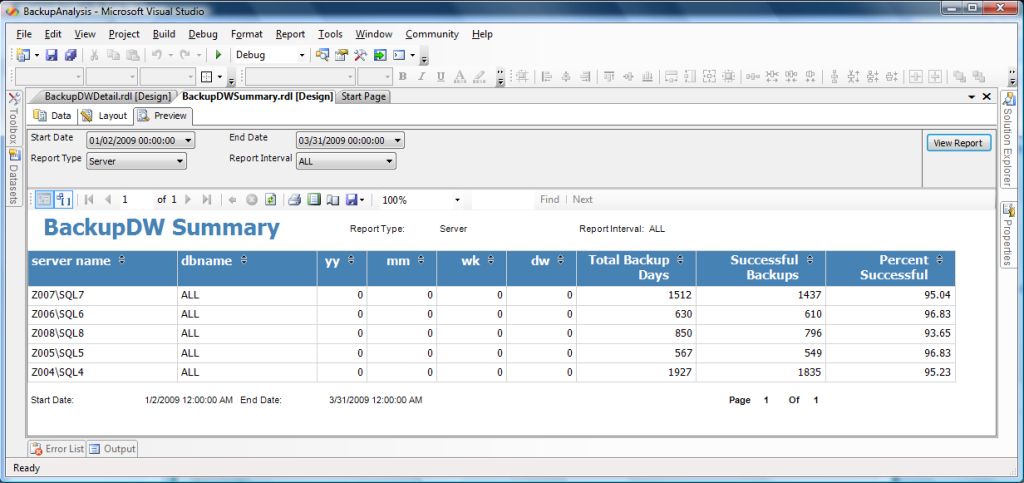

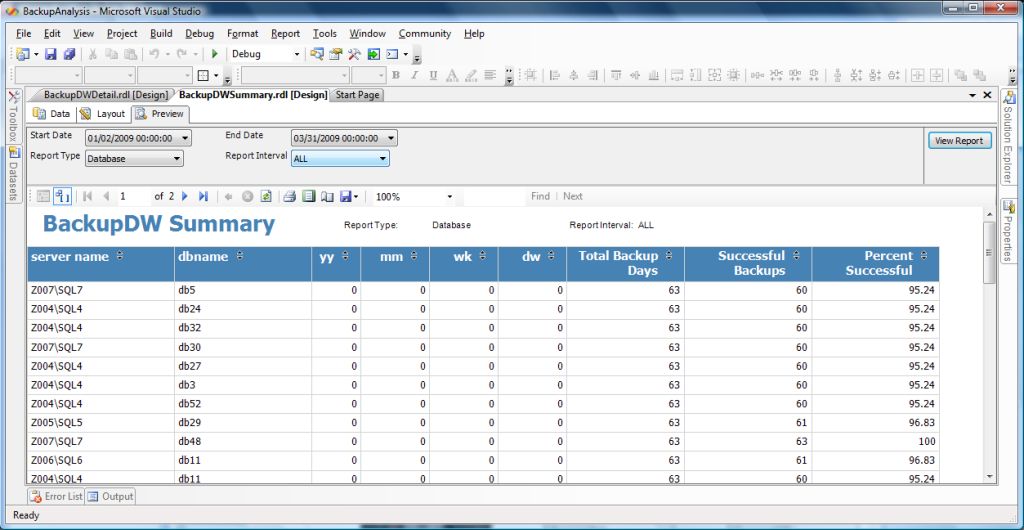

To configure the reports open the Solution file, BackupAnalysis.sln in Visual Studio 2005 (VS) or SQL Server Business Intelliegence Studio (BIDS) and change the shared data source to your server and database name. Having completed the setup, data collection and report configuration you can generate the reports. You can run the reports locally through VS or BIDS or deploy the reports to SQL Server Reporting Services Server. The following are several sample reports, selecting various reporting options.

Overall backup report:

By Server backup report

By database backup report

Summary

The need to verify your databases are being backed up is a common task for database administrators. You can use the process described in this article to accomplish daily backup monitoring and reporting. You may be surprised to learn your backup success rates in your environment.