“SQL Spackle” is a collection of short articles written to provide answers to specific, common problems or to explain particular methods, techniques, or tools. These short articles are NOT meant to be complete solutions. Rather, they are meant to “fill in the cracks”.

--Phil McCracken

Introduction

Not so long ago, I ran into a problem that I thought worthy of writing about. A client had a small (4TB) database that was being log-shipped to a DR site. Now, the pipe between the primary and secondary servers was fast enough for the log shipping, but not for transferring a backup. It was faster to perform a full backup, copy it to an external USB drive, overnight it to the DR site, and then copy the files off of it and restore the backup – literally a three day process. Obviously, we needed to ensure that we didn't do anything that would break the log shipping and require it to be rebuilt.

In our database, there's a table that has an identity column which is based upon the INTEGER data type, and it was running out of room. A staged maintenance was planned to have a parallel table put in place that based the identity column on the BIGINT data type. This approach required adding a new, temporary data file to this database. For the first step, this new data file was added to the primary database. Those involved were flabbergasted as the restore jobs for the secondary database promptly started failing.

The Test Environment

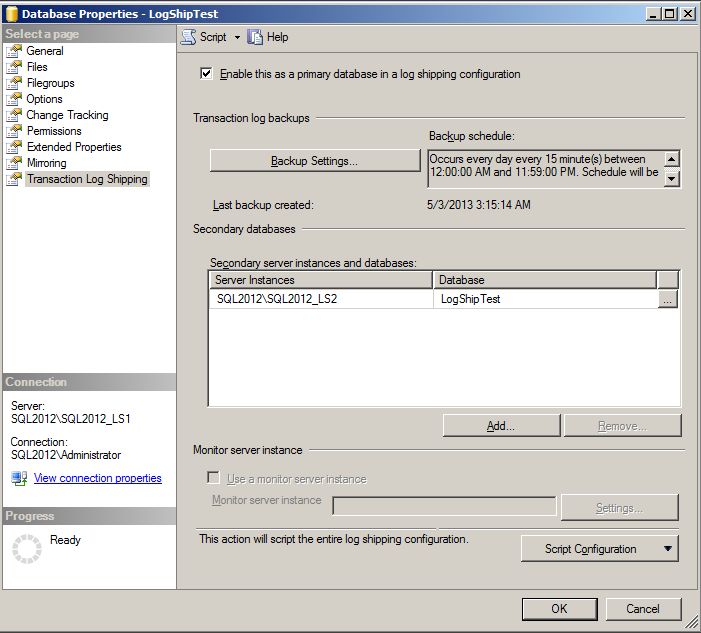

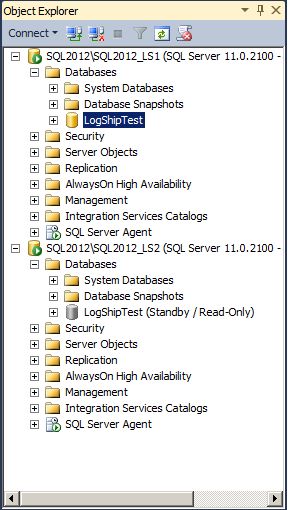

As we start to walk through the problem and solution, let’s start by setting the scene. First, we set up log shipping between two databases. For this, I’ll use a VM with two named instances. I’ll create a database on one instance, and establish log shipping of this database to the second instance.

USE master;

GO

IF DB_ID('LogShipTest') IS NULL EXECUTE ('CREATE DATABASE LogShipTest');

GO

Notes about the Log Shipping setup: Transaction logs are being backed up, copied over to the secondary instance (SQL2012\SQL2012_LS2), and restored in standby mode. These jobs run every 15 minutes. Just a plain, ordinary log shipping setup.

At this point, this is just an empty database – a far cry from 4TB, but it will suffice for this demonstration. Notice that the secondary database is in standby mode so that it can be queried during this test.

Testing the Log Shipping configuration

Let’s add a table and some data to the primary database:

USE LogShipTest;

GO

IF OBJECT_ID('dbo.Test', 'U') IS NULL

CREATE TABLE dbo.Test (Col1 INT IDENTITY);

GO

INSERT INTO dbo.Test DEFAULT VALUES;

GO 5After running the log shipping backup/copy/restore operations, we can connect to the secondary database and query the data to see that it is in fact copied over. Perfect.

Breaking the Log Shipping

Now it’s time to recreate the problem by adding a new file group to the primary database and putting a file into the file group. Let's go through the extra steps of adding a table to this file group, and finally adding some data to the table.

USE master;

GO

ALTER DATABASE [LogShipTest] ADD FILEGROUP [NewFG]

GO

ALTER DATABASE [LogShipTest]

ADD FILE (

NAME = N'LogShipData2',

FILENAME = N'C:\Program Files\Microsoft SQL Server\MSSQL11.SQL2012_LS1\MSSQL\DATA\LogShipData2.ndf' ,

SIZE = 3072KB , FILEGROWTH = 1024KB )

TO FILEGROUP [NewFG];

GO

USE LogShipTest;

GO

CREATE TABLE dbo.Test2 (Col1 INT IDENTITY) ON NewFG;

GO

INSERT INTO dbo.Test2 DEFAULT VALUES;

GO 5We run the log shipping operations again. However, this time we have a problem. The restore job comes back with the following error messages:

*** Error: Could not apply log backup file 'C:\LogShipCopy\LogShipTest_20130503075309.trn' to secondary database 'LogShipTest'.(Microsoft.SqlServer.Management.LogShipping) *** *** Error: The operating system returned the error '5(Access is denied.)' while attempting 'RestoreContainer::ValidateTargetForCreation' on 'C:\Program Files\Microsoft SQL Server\MSSQL11.SQL2012_LS1\MSSQL\DATA\LogShipData2.ndf'. File 'LogShipData2' cannot be restored to 'C:\Program Files\Microsoft SQL Server\MSSQL11.SQL2012_LS1\MSSQL\DATA\LogShipData2.ndf'. Use WITH MOVE to identify a valid location for the file. Problems were identified while planning for the RESTORE statement. Previous messages provide details. RESTORE LOG is terminating abnormally.(.Net SqlClient Data Provider) ***

Problem Analysis

What went wrong here? When the restore was running, it encountered a new file group and a new file to add to the database. The file name is specified for the new file. In this scenario, that specified file is already in use by the primary database (since the two instances are on the same server). With our 4TB database, the problem was that the secondary server did not have identical drive volumes, and the specified path did not exist. Compounding this is that the drive letter was in use for another purpose, and it did not have sufficient space for the new file. Both issues have the same problem: the new file cannot be created on the secondary instance in the specified location.

Problem Fix Attempt #1

One sure-fire way to fix this would be to perform a full backup of the database, copy it to the USB drive, overnight it to the DR site, and restore the database. This would be a 3 day process, and having the secondary out of sync for this long is not acceptable. Another solution is necessary.

Problem Fix Attempt #2

In thinking about this, it was realized that the full restore would need to utilize the WITH MOVE option to move the various files to their proper locations. Which brings to mind – can we just perform a restore of this one transaction log backup file, specifying WITH MOVE to put the new data file into an appropriate location? In SSMS, you bring up the “Restore Transaction Log” wizard, select the transaction log file to restore, and then you find out that the “Restore Transaction Log” wizard doesn’t have an option for moving files to a new location like the “Restore Database” wizard does. Again, we need to find another solution.

Problem Solution

Since the “Restore Transaction Log” wizard doesn’t have an option to move files, let’s try it using T-SQL and the RESTORE statement:

USE master;

GO

RESTORE LOG LogShipTest

FROM DISK = 'C:\LogShipCopy\LogShipTest_20130503075309.trn'

WITH

STANDBY='C:\LogShipCopy\LogShipTest_RollbackUndo.bak',

MOVE N'LogShipData2' TO N'C:\Program Files\Microsoft SQL Server\MSSQL11.SQL2012_LS2\MSSQL\DATA\LogShipData2.ndf';For this scenario, I just moved the new file to a different location on the drive. For the 4TB database, we moved it to a newly mounted volume on the server. Either way, the result is the same: success. After applying all of the subsequent transaction log backup files, we can connect to the secondary database and query the new table and verify that the data is in place. The secondary database is back in sync with the primary, log shipping is running without errors, and the DR site is ready to run if needed.

Wrap Up

I’ll end this with two cautionary notes: First, this is a prime example of why the configuration on the DR site needs to mirror the configuration of the live server. If the volumes had matched, this issue would not have happened. Secondly, know the limitations of the GUI being presented by SSMS. If this had happened to you, would the panic of breaking the log shipping have kept you from this solution? Would you have initiated the 3 day process to backup/copy/restore the database?