If you have ever worked on a data integration project "in the old days" of pre-SSIS, you know how much custom work has to be done to deal with data that comes from systems with bad source data and a severe lack of constraints. With SSIS, you can easily direct rows that cause errors to a different destination for saving.

After working on some basic packages, it was becoming cumbersome to set up custom data destinations for each transformation where I wanted to redirect bad data. Having all these different tables or files would make it difficult to aggregate into a report that was meaningful or make it easy to search for patterns. With a central logging table for these various pieces of bad data, anyone could setup reports that are automatically emailed to the source data owners as a kind of "rub it in your face" report of their data quality. My initial intention was for my own use, but immediately it became clear this could be used to arm project managers with enough information to call meetings with other project managers and let everyone else work. There would be little need for them to call you into the meeting if they had all the information (in theory it sounded good). Whatever your interests might be, this article offers a quick step by step way to aggregate a variety of data source's information into a single source to be queried as needed.

I started by doing what we all do when faced with a new problem, search to see who has come across this same problem, and solved it already. My search ended up here on www.SQLServerCentral.com reading Jack Corbett's article Error Handling in SSIS . I downloaded his component (link in the article discussion) and began converting it for use in Business Intelligence Development Studio(BIDS) 2008. Once it was working, I noticed that I wanted to add a few things to the logged output like package name and the user who invoked it.

1. To get started, we need a table to house the error rows in. This is the schema I use, it lets the component auto map the columns from the component to the table - saving a little time on each use.

CREATE TABLE [dbo].[SSIS_ERROR_LOG](

[ErrorLogId] [int] IDENTITY(1,1) NOT NULL,

[ErrorCode] [int] NULL,

[ErrorColumn] [nvarchar](128) NULL,

[ErrorDetails] [xml] NULL,

[ErrorDesc] [nvarchar](256) NULL,

[ErrorStep] [nvarchar](256) NULL,

[ErrorTask] [nvarchar](256) NULL,

[PackageTime] [smalldatetime] NULL,

[PackageName] [nvarchar](256) NULL,

[UserName] [nvarchar](128) NULL,

CONSTRAINT [PK_SSIS_ERROR_LOG] PRIMARY KEY NONCLUSTERED

(

[ErrorLogId] ASC

)WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY]

) ON [PRIMARY]

2. Using the SSIS.Logging.dll (download at the end of the article), you will need to use gacutil.exe to register the custom component before adding to the BIDS toolbox. I setup a batch script in my bin folder to help with quick deployment as I modify the component.

copy "C:\myfolder\SSIS.Logging\bin\SSIS.Logging.dll" "C:\Program Files\Microsoft SQL Server\100\DTS\PipelineComponents\"

"C:\ETL\gacutil.exe" /if "C:\Program Files\Microsoft SQL Server\100\DTS\PipelineComponents\SSIS.Logging.dll"

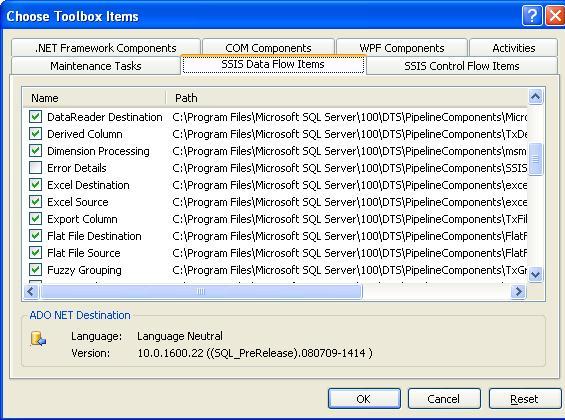

3. Now that you have the component registered, you can add it to your toolbox. Right-click in the Data Flow Transformations pane and click "Choose Items".

Once added, you can now utilize the component for error handling. I setup a simple csv of a few baseball players from Milwaukee (note JJ Hardy is missing a number),

21,Alcides Escobar,12/16/86

28,Prince Fielder,05/09/84

24,Mat Gamel,07/26/85

,J.J. Hardy,08/19/82

9,Hernan Iribarren,06/29/84

8,Ryan Braun,11/17/83

25,Mike Cameron,01/08/73

22,Tony Gwynn,10/04/82

1,Corey Hart,03/24/82

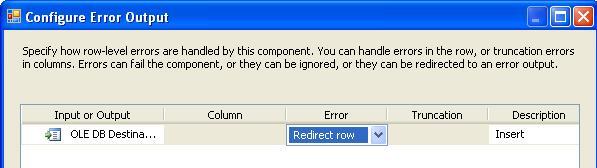

4. I want to load this into a table on my database server. I setup a Data Flow task and connections to the file and database. I map the tranform and then add the Error Output from the database destination component to redirect the row to the Error Details component. Here is what the package looks like after I ran it.

5. I won't cover the basic transformation, but what we want to do is redirect the rows in error to the "Error Details" component.

6. Next, open the Error Details component and go to the Input Columns tab. Add the columns that you want to track to the input buffer of the component.

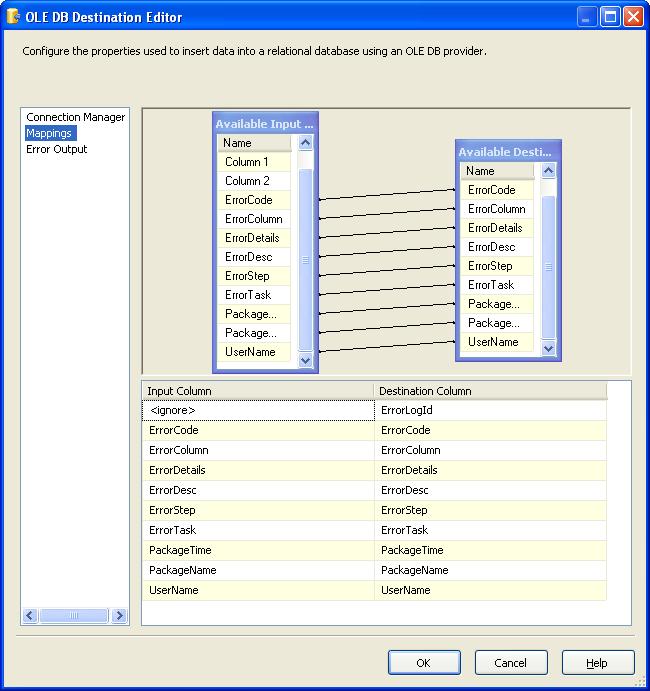

7. After setting up your database connection, map the columns from the Error Details component to the OLEDB destination.

That is it, you can execute your test package and query the bad data. Here is a query that I have come up with to find what baseball player has no number. (Column 0 has no value in this case)

SELECT * FROM ETL.dbo.SSIS_ERROR_LOG

WHERE ErrorDetails.exist ('/fields/field[@name = "Column 0" and @value = ""]') = 1

That is it! If you have any enhancements or thoughts on improving the component, post to the article discussion so everyone can benefit. Thanks to Jack for doing all the initial hard work in his article!