Overview

Our application is a microservice that stores metadata for actual data and is backed by Amazon Postgres RDS. It is deployed in a multi-tenant/multi-region/multi-cell environment. The Gateway handles the multi-region aspect of the system. That is to say, the gateway decides which region/cell to route each tenant. However, there can be multiple tenants in a particular cell and each microservice owns this complexity.

We had to change the data model for our microservice from a single tenant per DB to multiple tenants per DB as a result of a change in the project roadmap. We did this for better scalability and a simpler DB connection pool design. To achieve this we had to migrate all the data from the old DB to the new DB with minimum client impact and zero downtime. Also, we had to ensure that the new service provides maximum data isolation. If one of our tenants gains access to another tenant’s data, then it can permanently damage the company’s reputation. Moreover, we had to deliver all this within a stipulated amount of time.

We found managing all these complexities pretty challenging and on top of that a strict deadline further complicated the situation. However, PostgreSQL RLS policy that was introduced as part of Postgres 9.5 came to our rescue.

Old Data Model

Earlier, before the change, we had each tenant having its own database in the same Amazon RDS cluster instance. For example: if there were 3 tenants in cell1, then each tenant would have its own DB in a single Amazon RDS instance. And each DB would have its own schema and tables as shown in Fig.1.1

Fig. 1.1 Old Data Model

This kind of architecture is pretty beneficial if you want customization on tenant level. However, for our use case this wasn’t a requirement.

New Data Model

Our requirement was an architecture that was:

- easy to scale

- provided data isolation

- provided multi-tenancy support

- was cost effective

- could handle millions of requests per tenant

- had a simple connection pooling design

To achieve this we had to pivot to the data model shown in Fig 1.2 where all tenants belonged to the same DB. Each table had a tenant_id to differentiate the individual tenant records.

Fig. 1.2 New Data Model

Problem

This design helped us achieve all our requirements. However, one major drawback of this design is data isolation. Tenants might access other tenants' data if the SQL queries are not well-formed. The other major problem for us was to migrate existing data and live traffic without making a lot of DAO (Data Access Object) changes.

Our Solution

The solution was to use PostgreSQL Row Security Policies to ensure that queries executed by users only returned the appropriate data. RLS is a policy that restricts the rows that can be returned by normal select queries. Or it can restrict rows inserted, updated or deleted by data modification commands. Also, admins can use it to define policies that control specific rows to display. It can be controlled based on certain criteria like user roles, location or business. For example, you can restrict only the current_user to see/update the accounts table shown in Fig1.1 by setting the account_id to account_manager.

You can also apply multiple policies on a table at the same time. Additionally, policies can cover all or a few SQL statements (SELECT, INSERT, UPDATE, DELETE). For more information, see Row Security Policies on the PostgreSQL website.

How did it resolve our problem?

Our first problem was data isolation. We wanted to ensure that only the data belonging to the tenant firing the request is returned. This can be achieved by adding tenant_id to the WHERE clause. This solution, however, allows for the possibility of human error. We are entirely depending on the developer to have a well formed query. Therefore, to avoid this, we decided to add a row level policy like shown below

ALTER TABLE user ENABLE ROW LEVEL SECURITY;

CREATE POLICY tenant_isolation_policy ON user

USING (tenant_id = current_setting('app.current_tenant')::Text);This policy ensures that whenever a query is fired on table “user” the tenant_id is set. Else there is an error thrown. This policy makes it mandatory for tenant_id to be passed with every query. Consequently, this lowers the chances of human error to zero.

Our second problem was to migrate all the existing data and the live traffic from old DB to new DB with minimum DAO(Data Access Object) changes. In the legacy data model, each tenant had their own DB with tenant_id as the database name. And the DB connection was made to a particular tenant DB. As a result, none of the queries included tenant_id in the WHERE clause. Nor was there any field with tenant_id name in the tables.

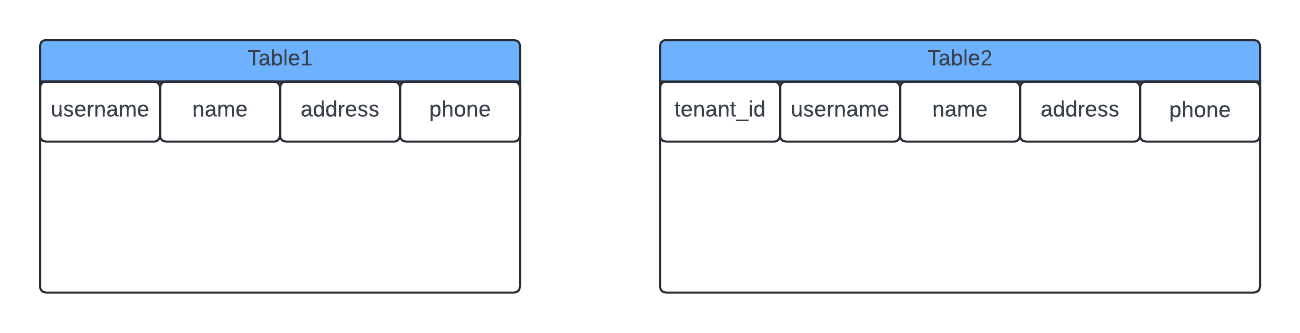

Now, with a change in design, we needed to find a way to maintain logical seperation between the tenants. Hence, we had to introduce tenant_id field in the tables. Therefore, during migration, we had to move all data from Table1, which lacked tenant_id, to Table2, which includes tenant_id as shown Fig 1.3

Fig. 1.3 Tables

This new field would introduce a change in all the queries in the application DAO layer. However, with RLS in place, we did not need to include tenant_id predicate in the SELECT, UPDATE, and DELETE queries. The predicate was applied automatically thus resulting in minimum DAO changes.

Implementation

We wanted to ensure there was minimum downtime and client impact. Therefore, we decided to migrate one tenant DB at a time. Here are the steps we followed

- Firstly, we altered the old table, Table1 in this case, to add tenant_id field with a default value

ALTER TABLE Table1 ADD tenant_id VARCHAR(100) NOT NULL DEFAULT ’tenant_001’;

- Then we created an RLS policy on Table2 to avoid any changes to the DAO layer and reuse the code.

ALTER TABLE Table2 ENABLE ROW LEVEL SECURITY;

CREATE POLICY tenant_isolation_policy ON Table2 USING (tenant_id = current_setting('app.current_tenant')::Text);The current_setting function shown above reads the session variable at runtime. So all we had to do was pass the tenant_id to the active JDBC session when executing the query. This ensured that the subsequent SQL queries were only able to access the data that belonged to the designated tenant_id.

- Then we ensured that the DAO layer executed the following statement before running any SQL query for a given tenant_id.

SET app.current_tenant = '" + tenant_id + "'";

With RLS in place, we did not need to include a tenant_id predicate in the SELECT, UPDATE, and DELETE queries. The predicate was applied automatically.

- After all the above things were in place, we utilized pg_dump to dump the data to Table2.

- Meanwhile, at the DAO layer we also blocked all writes to the old DB and only allowed reads during the period of migration(pg_dump). For all reads, the service would first look for data in the old DB. After that, it would check the new DB only in case it is unable to find it in the old one.

- Finally, we marked Table1 as Table1_old, making the service unable to find the old table and rerouting the calls to Table2.

This approach helped us avoid spending time on legacy code changes and enabled us to reuse the existing code. Thus reducing the overall effort which enabled us to deliver things on time.

Performance

You can think of RLS policy as an automated WHERE clause that is managed by the database engine. It basically adds the tenant_id setting in the above example automatically to the WHERE clause. RLS policies operate quickly for simple examples like the one described above. However, complex RLS policies with JOIN queries, or certain operators or functions may lead to performance degradation. You can find more RLS use cases and their performance implications here and here. And here are some best practices that can be followed to avoid performance hits.

Conclusion

In conclusion, Row Level Security (RLS) is a powerful tool that can be used by multi-tenant applications to enforce logical separation. It's a cost-effective way to share database resources and reduce the risk and overhead of enforcing data isolation. It enables you to enforce policies at one centralised place. Thus reducing the burden on developers and minimising the need for extensive code modifications.