I was trying some stuff out in a notebook on top of a Microsoft Fabric Lakehouse. I was wondering what some of the default values are of the configuration variables, and if there’s an easy way to retrieve them all. Luckily there is. In the code, I’m using Scala because it has a nice GetAll() function.

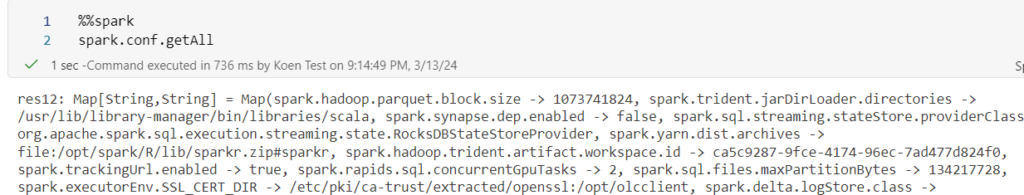

%%spark spark.conf.getAll

This returns a map type with all of the configurations and their current values:

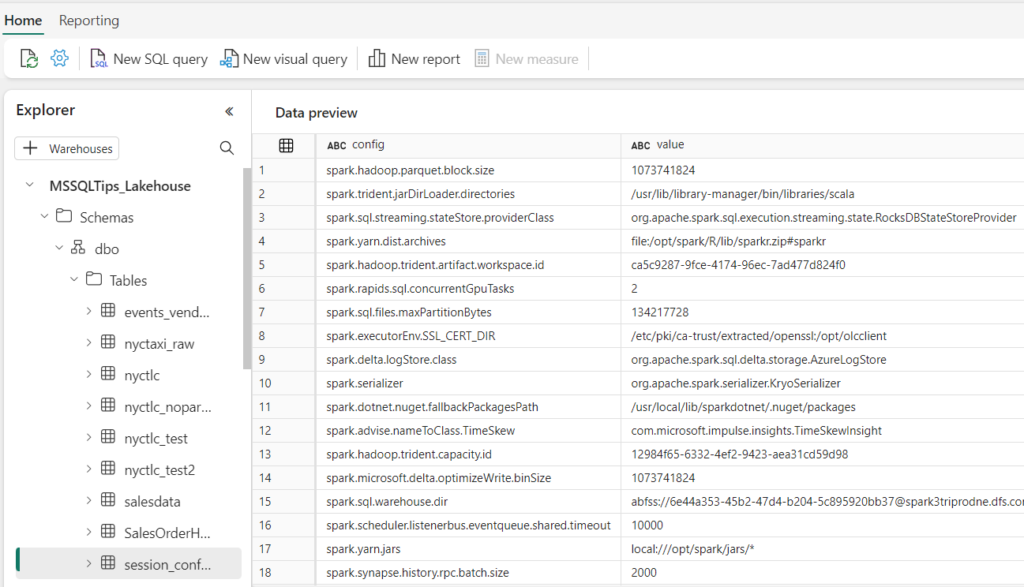

Not exactly super useful to look through, so let’s try to dump this data into a table. Let’s store the map into a variable, convert it to a sequence and then to a data frame. Finally, we can write that data frame to a delta table:

%%spark

val m = spark.conf.getAll

val df = m.toSeq.toDF("config","value")

df.write.mode("overwrite").saveAsTable("session_config")The result is a nicely query-able table:

UPDATE

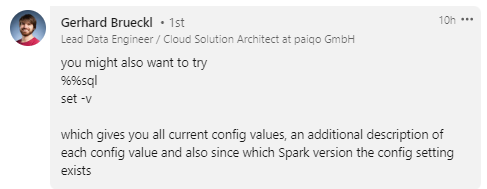

I got an interesting comment on LinkedIn from Gerhard Brueckl:

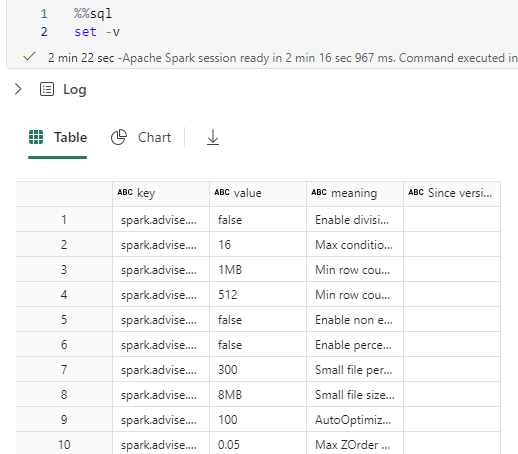

So I tried the statement and it returns a big list of config values (which you can export to CSV):

However, this is a different list than the one I extracted in this blog post. My post is about session configs, while SET -v returns the Spark configuration. Nonetheless, still very interesting, especially because of the additional explanation for each config.

UPDATE 2

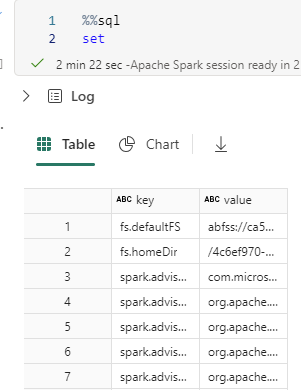

If you run SET without the -v parameter, you do get the current session config instead of the Spark config.

If you just want a quick export to CSV, this is a good option. If you want it to dump into a table in Fabric, the Scala option is better.

The post How to Retrieve all the Spark Session Configuration Variables in Microsoft Fabric first appeared on Under the kover of business intelligence.