ssas memory issues even after switching over to table level refreshes

-

February 20, 2024 at 9:07 pm

Hi, we just moved to 2019 std from 2016 std. We comingle the engine, ssas and ssrs on one server. I just realized that i allowed my post at https://www.sqlservercentral.com/forums/topic/is-there-a-way-to-process-a-tabular-models-tables-in-sequence to go off on a tangent so i'm going to carefully move the memory part of that discussion here and just leave the tsml sequence part of it there.

Since our move my "50 million row fact/approx 10 related dim" tabular model started going down from sql agent as well as manual full refreshes at the db level. I tried separating the dim table full refreshes (step 1) from the fact full refresh (steps 2) but step 2 continues to go down with a memory issue. At the moment i've left the compatibility level at 2016. Interestingly the same model from exactly the same data source refreshes full at the db level on the old server. Two of the most noticeable differences between the servers are old has 8 processors, new has 4. But who knows if there is a good reason for that. And maxdop on old server is 0 but 2 on new. So here are the details in which i hope someone sees a big flaw...

My gut tells me that partitioning would be overkill for such a small fact table. But that rather we aren't managing memory correctly. We know we have 88 gig of ram on the new server, 4 processors, maxdop 2, memory on the general tab in ssms shows 90111 mb but on the memory tab shows a high of 54789 mb (probably reduced from before). Interestingly, the server we came from had maxdop 0. We started watching and believe that sql (the engine) was grabbing somewhere near 60-65 gig of that memory before our dba adjusted sql's allocation. And as i recall from reading years ago sql can be pretty stingy with that. So as we were watching we got the impression that ssas was maxing out around 10 gig when i purposely ran a full refresh on the db at the same time. I'm only guessing but believe right now that ssas is forced to take some chunk of what is "left" from 88 gig after subtracting what sql engine is using. Our dba turned down sql's allowable memory and we saw ssas then max out around 12 gig. But i dont know how much he turned sql's allocation down. From other visuals on the server's dashboard we concluded that we arent near capacity in memory usage. So at the moment i am guessing that we need to turn sql's allocation down further. I should add that on the screen in ssms where you can see the vertipac mem limit as well as ssas's hard/low/total memory limit, i see hard as 0,0,0 for value/current/default, low as 65 , 65, 65 and total as 80,80,80. I have to read. To complicate things we are trying to get a phoenix backup product working there. I know its a hog on cpu but didnt pay attention to how much memory it was using.

-

February 20, 2024 at 11:19 pm

It's been a long time since I had to deal with this problem, but I think it helped to run a process clear before process data. According to the link below the processing of a table is a transaction that can be rolled back. This means that the old data are retained in memory while the new data are being built, using close to twice the memory the model normally requires. I think running the process clear avoids the need for memory overhead, but you also don't have anything to fall back to if the process fails. I can't find any suggestions to use process clear to help with memory issues, so perhaps it's not a valid approach, but I would try it. If it still fails then I would try partitioning the fact. Partitioning gives you control over how much data to process, but it does require maintenance.

https://www.wiseowl.co.uk/blog/s2484/processing-theory.htm

-

February 21, 2024 at 8:49 pm

we tried your idea Ed B, thank you. I think its going to help in the long run. At the same time we temporarily decreased sql server's (the engine) high memory limit and have concluded one thing for sure...that limit constrains ssas's ability to grab available memory. Before we think about partitioning, we are going to upgrade our new server with the cores and degrees of parallelism it should have, leave the reduced high mem limit alone and see if somehow this cube can refresh on the new server just like it does on the old one using 8 cpus (instead of 4) and max dop 0 instead of 2. I have a few concerns with partitioning...1) we have to swap out our current power bi gateway connection for this new one and who knows if pbi will refuse it because there is a change and cause us to recode our reports and dashboards, 2) 55 million fact records seems to me premature for partitioning , 3) introducing higher maintenance costs before managing memory properly seems a bad practice, 4) if it works on the old server as is, which it does, it should work on the new server as is.

-

February 21, 2024 at 10:48 pm

I believe SQL Server Standard limits a tabular instance to 16 GB. It sounds like you may be getting close if you have seen it max out around 12. Is the fact table increasing with time or do you limit data to a rolling window? If it's getting bigger it may become more of a problem later.

This link is quite old, but it has some suggestions concerning memory. https://byobi.wordpress.com/2016/12/08/how-much-ram-do-i-need-for-my-ssas-tabular-server/

-

February 22, 2024 at 2:26 pm

thanks Ed. Do you believe that 16 gig to be db sizes on disk or maybe total available (to ssas) ram?

Our 3 tabular model dbs total about 5 gig in disk space.

The 3 ton elephant in the room is "why does it work fine on a (our previous) 2016 server?". Same exact source. Same exact model.

The source fact table is growing by about the same volume yoy. but ity seems we have a different issue if the same volume is refreshing on a 2016 server , same model.

-

February 22, 2024 at 4:33 pm

My understanding is that there is a 16 GB limit for RAM per tabular instance.

If the total used by all databases is 5GB you should be fine. I think the memory you need for processing will be that 5 GB, plus the size of the database being processed.

It is weird that it worked on one server, but not the other. I would look at the memory settings for SQL and review how much it is actually using. Maybe the old server used less memory because it had more processors and the maxdop wasn't limited to 2. This is a complete guess though. It does seem that the tabular memory requirements are small. Are the two sources truly identical? Are the SQL databases in the same compatibility mode? Are the execution plans the same for the fact load queries? Are the stats up to date on the new server?

-

February 22, 2024 at 9:04 pm

what we saw before the processing started was ssas using about 10 gig for nothing specific that we know about. I think that's where it is always at rest. When and while processing was running for one db we watched it inch toward 16 gig which we assume right now wasnt enough at least for this # of processors. That db is between 3 and 4 gig physically, presumably in a compressed state. the compatibility level on the cube dbs is identical. I have to look at the compatibility level on the engine stuff. and dont know at this point about the plans but i believe the model just references the tables directly with no intermediate queries and is aware of their relationships. i'll have to look at stats.

-

February 22, 2024 at 9:39 pm

stan wrote:what we saw before the processing started was ssas using about 10 gig for nothing specific that we know about. .

Tabular models are loaded into memory when the instance starts, that's what makes them so fast. If it is at 10GB when everything is processed then it sounds like your models take up 10GB of RAM. If the one model is 4 GB then you will need 14 GB to process it unless you clear it from memory first. When that model hits 6 GB you may be beyond the limits of SQL Server Standard unless you canmake it smaller (if I have read it correctly).

I know this doesn't explain why one works and one doesn't, but if you are reading the entire table you may be including columns you don't need. If the fact table has an identity column it likely has no meaning and as a unique column it won't compress well. Any column that is not a dimension key, a measure or a required attribute should probably be excluded from the source query. Obviously I have no idea what your table looks like so I'm guessing what might help.

-

February 22, 2024 at 10:21 pm

you been advised to do process clear before process full - you still not doing it based on your last script.

you rmemory (tabular) likely not set correctly either - as well as your vertipacpolicy (to use Windows paging file)

you need to change the process so you do a clear, then one db at the time, starting with the biggest ones.

and if all your db's don't fit into a single Tabular instance, create more - limit of 16GB is per instance, not server.

and finally you will need a lot more than 88GB of ram on that server - or your SQL instance will starve

-

February 23, 2024 at 2:28 pm

thx frederico. you should have seen somewhere me saying clear didnt help. Neither did doing table by table after the clear. Neither did splitting the dim refreshes into one step and the fact into another both followed by clears.

Your help is appreciated.

i think the ssas memory settings are defaults. If you have suggestions that would be great. I believe they are being ignored anyway (except maybe for vertipac which i understand conceptually as the column store and dictionary engine but have to read about to understan as a memory consideration) and they all worked fine with those same values on the old 2016 server.

the problem is with just one cube's refresh and it wasnt a problem on the 2016 server with the exact same data source and model. And its only 3.6 gig. And the other 2 combined are about 1 gig. And all 3 refreshed without incident on the old server.

finally i dont see that server starving right now. I watched for any stress and didnt see any. Its a WH server and i dont believe its doing much beside housing and trying once daily to refresh 3 tabular models (one which is close to 1 gig and nobody uses), servicing some random ssrs , adhoc and .net based report requests against the engine and servicing random pbi and excel based pivot requests against the cubes, specifically 2 of the cubes, one of which is only 4 meg, yes i said meg.

it does some crunching each morning between 3 and maybe 6:30 am. In about 12 steps it ingests sales data from 12 different erps sequentially to the warehouse followed by 3 steps in which the cube refreshes are attempted. Nobody would care if i deleted the 1 gig cube. But it sure would be nice to understand exactly how its presence affects one way or the other the refresh of the 3.6 gig cube. Admittedly i didnt clear it too while experimenting with the clear command

-

February 23, 2024 at 7:40 pm

what i did in response to frederico's post was add a clear step on our 3rd cube prior to the dim table level sequenced refreshes (was step 1) and fact table level refresh (was step 2).

This 3rd item is a 1 gig cube. Im assuming all 3 of our cubes are sitting in memory when things are otherwise idle because when i went to look at memory usage this time, ssas was way down from its normal 10 gig baseline at about 6 gig. And while it was doing its thing, never went much above 9 gig. Whereas in prior tests i saw it around that 16 gig limit everybody talks about.

i havent done the recalc yet which i assume is necessary since i refreshed the 3.6 gig cube at the table level. And will be curious to see what happens if i try to pivot the 1 gig guy whose clear i just added, my assumption is that ssas will load it back into memory for me without a hitch.

-

February 23, 2024 at 7:44 pm

have a look at attached spreadsheet - it is what I use to setup my servers.

on your case you will need to manually ensure that the SSAS memory does not go over 16GB on cell B6

and read these

https://www.sqlbi.com/articles/optimizing-memory-settings-in-analysis-services/

https://www.sqlbi.com/articles/memory-settings-in-tabular-instances-of-analysis-services/

https://shabnamwatson.com/2018/12/09/understanding-ssas-memory-settings-with-real-examples/ - this one makes it clearer the usage of the memory settings and the fact they are percentages over total server memory vs what we think SSAS can use

you should also compare the contents of your old server config file vs new server

msmdsrv.ini - normally located on ?:\Program Files\Microsoft SQL Server\MSAS??.Instance_Name\OLAP\Config

settings also visible through the properties of the instance, tick show advanced

EDIT below

also look at https://www.sqlbi.com/articles/data-model-size-with-vertipaq-analyzer/ for a tool to analyze your cube.

and look through your data and column definition - datetime columns for example are one of the targets to change and split date from time into 2 different columns - memory savings can be significant.

String fields may sometimes benefit of a split - I have a cube with 2 Billion rows and some of the attributes contain data that are basically the same string up to char 30 (some variations) followed by some very distinct values - by splitting this column into 2 we saved over 5 GB on the cube. there is a "cost" from a development point of view on the reporting in that these need to be rejoined for visualization.

- This reply was modified 2 years ago by frederico_fonseca.

Attachments:

You must be logged in to view attached files. -

February 23, 2024 at 7:56 pm

apparently clearing means its gone unless you plan to reprocess. its a catch 22.

for me right now this is ok because nobody uses the 1 gig cube anyway.

i'm guessing that having put yet another clear in the fact table refresh tran step probably made my dim info disappear, so i'll retry without that clear. and just leave 1 clear on the 3.6 gig cube right in the dim table level refresh.

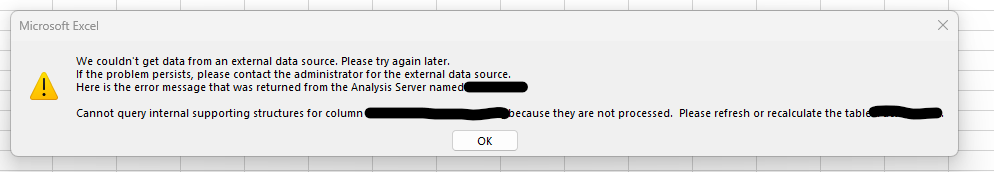

this is what i got pivoting the 1 gig cube in excel

- This reply was modified 2 years ago by stan.

Viewing 13 posts - 1 through 13 (of 13 total)

You must be logged in to reply to this topic. Login to reply