I’ve been building lakehouses using Databricks Unity catalog for a couple of clients. Overall, I like the technology, but there are a few things to get used to. This includes the fact that primary key and foreign key constraints are informational only and not enforced.

If you come from a relational database background, this unenforced constraint may bother you a bit as you may be used to enforcing it to help with referential integrity. It is still otherwise useful when paired with data exploration or analytics tools that can detect the constraints and use them to help a user write a query or build a semantic model.

Let’s look at an example of the consequences of primary key constraints not being enforced.

First, let’s create a new catalog and schema in a Unity Catalog metastore.

Create Catalog devcatalog1;

Use catalog devcatalog1;

Create schema myschema;Next, we create a table with a primary key constraint and insert two rows.

CREATE TABLE IF NOT EXISTS devcatalog1.myschema.table1

(id Int NOT NULL, columnB String, CONSTRAINT table1_pk Primary Key(id));

INSERT INTO TABLE devcatalog1.myschema.table1

VALUES

(1, "one"),

(2, "two");Then we can create a second table that has a foreign key constraint that goes back to the first table.

CREATE TABLE IF NOT EXISTS devcatalog1.myschema.table2

(table2id INT NOT NULL Primary Key, table1id INT NOT NULL, EventDate date NOT NULL,

CONSTRAINT table2_table1_fk FOREIGN KEY(table1id) REFERENCES devcatalog1.myschema.table1);

INSERT INTO TABLE devcatalog1.myschema.table2

VALUES

(1, 1, '2023-06-15'),

(2, 1, '2023-06-15'),

(3, 2, '2023-06-15');If we then insert a new row into table1 that has an id value of two, we are violating our primary key constraint. But nothing happens (no error is thrown) because it is unenforced.

INSERT INTO TABLE devcatalog1.myschema.table1

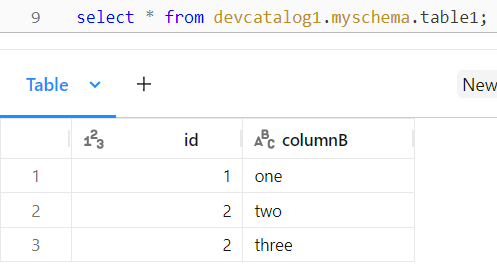

values (2, 'three');Querying table1 shows our duplicate values in the id column.

Now we don’t know which row the foreign key constraint in table2 was supposed to reference.

What do we do now?

Unenforced constraints are not the end of the world. Many people argue that referential integrity should be maintained in the business logic layer code that populates the tables rather than in the database engine. Enforced constraints in the database are a failsafe when the code that updates the tables fails to maintain that referential integrity. For now, we just have to make sure that the way we populate the tables does not allow us to violate these constraints, even when Unity Catalog doesn’t stop us. You may have to check whether rows exist before inserting new ones. And you may need data quality checks after batch loads to make sure something wasn’t missed. But you likely needed those things anyway. This is definitely something that can be worked around. And it’s likely an issue in many MPP systems, so it is something to think through and have a design pattern ready to handle.

Note that as of November 2023, primary key and foreign key constraints are in preview in Databricks, so there may be more enhancements to come.

Get the notebook

If you need to explain this concept to someone else, feel free to grab my example notebook from Github.