UPDATE query is running slow and is causing blocking

-

Hi All,

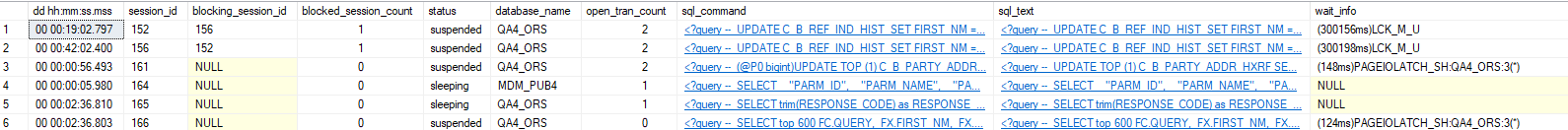

We have an UPDATE query which is causing a lot of blocking in QA env.

Its taking more than 4 hours and keep running and we see a waittype as "(151ms)PAGEIOLATCH_SH:QA4_ORS:3(*)".

Worst part is, the app team has scheduled this stmt at multiple times of the day. Even before the 1st UPDATE completes, the same UPDATE stmt comes up with a different sessionid? No idea why they are doing so but resulting in lot of heavy blocking. I want to check if they can first archive old data and then perform the UPDATE. but sooner or later as the data gets increased we get back to same problem.

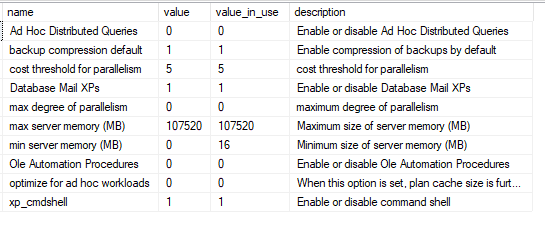

The server has 8 cpu's. 128GB RAM and max server memory set to 105 GB.

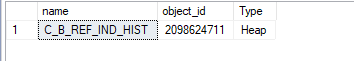

This is a history table. There is no PRIMARY KEY on this table. Its a HEAP.

The table size is 74GB and it has 34,866,803 rows. Its a wide table with 90+ columns.

Please let me know if there is any better way to re-write this below query.

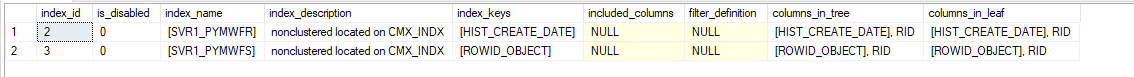

Attaching table structure, index details and Estimated plan. if you need more info, please let me know.

UPDATE C_B_REF_IND_HIST

SET FIRST_NM = '********',

MIDDLE_NM = '********',

LAST_NM = '********',

YEAR_OF_BIRTH = '*****',

AGE_RANGE = '********',

GENDER_CD = NULL,

LOCATION = '********',

CITY = '********',

REGION = '********',

COUNTRY_CD = '********',

ORGANIZATION = '********',

JOB_TITLE = '********',

PRIMARY_PHOTO = '********',

LINKED_IN = '********',

FACEBOOK = '********',

TWITTER = '********',

EMPLMT_DTL = NULL,

EDU_DTL = NULL

WHERE FIRST_NM = '********'

Estimated plan link

https://www.brentozar.com/pastetheplan/?id=By2xN1BV6

Thanks & Regards,

Sam

Attachments:

You must be logged in to view attached files. -

November 17, 2023 at 2:02 pm

the issue should be clearer even for someone not experienced.

there is no index on the FIRST_NM - so for each update it needs to do a table scan.

add a index on the FIRST_NM and it should speed up

-

November 17, 2023 at 3:15 pm

"...There is no PRIMARY KEY on this table. Its a HEAP...."

The lack of a primary key does not make the table a heap. ( it is more a lack design considerations )

The lack of a clustering index makes the table a heap.

As Frederico explained, the lack of a usable index for your query causes every statement to have to scan the full table to perform its operation.

Johan

Learn to play, play to learn !Dont drive faster than your guardian angel can fly ...

but keeping both feet on the ground wont get you anywhere :w00t:- How to post Performance Problems

- How to post data and code to get the best help- How to prevent a sore throat after hours of presenting ppt

press F1 for solution, press shift+F1 for urgent solution 😀

Who am I ? Sometimes this is me but most of the time this is me

-

November 17, 2023 at 3:37 pm

and something I've noticed - your SQL settings are default ones - you should change them - maxdop normally should not be set to zero, and Cost Threshold for Parallelism should definitely be set to a higher value - optimal value would need to be determined by you but I would start with 50.

MAXDOP depends on how many cores/vcpu's you have on that server.

-

November 18, 2023 at 9:11 am

In addition to, creating a non-clustered index on "first_nm" column, is there a way to make it run faster? can it done in small chunks ?

Regards,

Sam

-

November 18, 2023 at 9:45 am

the code you gave us is already doing it in "small" chunks - indeed its doing it on the smallest possible chunk size.

and that is what should not be done normally - you should update multiple rows in one go when possible - only when volumes are very high and server is limited do you get to a position where you may be better off updating smaller volumes (like 100k rows at the time)

-

November 19, 2023 at 5:14 am

frederico_fonseca wrote:the code you gave us is already doing it in "small" chunks - indeed its doing it on the smallest possible chunk size.

I didn't get it. the code doesn't have any batch size. Smallest possible chunk size? could you please elaborate on this. you mean, when nothing is specified, SQL is updates each row at a time? correct me if am wrong.

you should update multiple rows in one go when possible

How this can be done? any example please?

Regards,

Sam

-

November 19, 2023 at 9:34 am

vsamantha35 wrote:frederico_fonseca wrote:the code you gave us is already doing it in "small" chunks - indeed its doing it on the smallest possible chunk size.

I didn't get it. the code doesn't have any batch size. Smallest possible chunk size? could you please elaborate on this. you mean, when nothing is specified, SQL is updates each row at a time? correct me if am wrong.

you should update multiple rows in one go when possible

How this can be done? any example please?

Regards,

Sam

regarding first question.

it does not need to have a batch explicitly specified - but if it had, and as you should know, the batch size could be anything from 1 to yyy - so by default, a single update with a single value (even if your criteria on this case could update more than 1 row) would be considered a batch size = 1.

regarding second question - if you don't know how you can update multiple rows in one go I would suggest that perhaps you should consider a change in career - as the cheer volume of posts you had here would indicate that you should indeed know how to do it.

with regards to your app and their code - they need to explain what they are doing and discuss best ways to improve it - if their updates are from a UI where user is updating a single row/entity then yes a single update is likely the only thing required - but in this case the underlying tables MUST have the correct indexes - and in a very high likelihood they should also have a correct clustered index (and additional indexes) to help support their updates and their queries.

-

To be honest, you need to challenge their design... this table is a HISTORY table! Why are they updating a HISTORY table? It should be an INSERT ONLY table that records history.

I also can't speak for your shop but this is why any company that has to pass SOC2 and other audits forbids developers from promoting their own code to production. It has to go through an honest peer review, then through QA, then through a DBA review, then through UAT. No one in their right mind in that chain of inspections should allow a system where a history table is updated.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

November 20, 2023 at 11:52 am

Thank you Jeff and Frederico.

Viewing 10 posts - 1 through 10 (of 10 total)

You must be logged in to reply to this topic. Login to reply