High tempdb usage alternatives

-

September 27, 2023 at 6:45 pm

Hi

We have an stored procedure which runs frequently and it contain logic in which data are first dumped in temproray table and then moved to physical table .due to large data and frequent run of sp .tempdb database get filled up quickly .We cannot take server restart everytime so whats alternative to temp variables so as to minimized tempdb usage so that file size donot get spike

Regards

Anoop

-

September 27, 2023 at 8:20 pm

Increase the size of your tempdb files.

SQL DBA,SQL Server MVP(07, 08, 09) "It's a dog-eat-dog world, and I'm wearing Milk-Bone underwear." "Norm", on "Cheers". Also from "Cheers", from "Carla": "You need to know 3 things about Tortelli men: Tortelli men draw women like flies; Tortelli men treat women like flies; Tortelli men's brains are in their flies".

-

September 27, 2023 at 8:49 pm

SQL Server will clean up temporary table when the stored procedure has finished but you can also drop the temporary tables within the stored procedure whenever they are finished with in the procedure.

tempdb should be sized to your application's needs so best advice, as Scott said, is to increase the size of tempdb.

-

September 28, 2023 at 7:27 am

As stated by the previous repliers, you must size your tempdb file(s) to be able to cope with your systems consumptions!

Things that may help out :

- grant your service account "Instant File initialization"

- provide multiple files for your tempdb ( I use 1 file per core on our smaller systems )

- pre-size all your tempdb files

Johan

Learn to play, play to learn !Dont drive faster than your guardian angel can fly ...

but keeping both feet on the ground wont get you anywhere :w00t:- How to post Performance Problems

- How to post data and code to get the best help- How to prevent a sore throat after hours of presenting ppt

press F1 for solution, press shift+F1 for urgent solution 😀

Who am I ? Sometimes this is me but most of the time this is me

-

September 28, 2023 at 12:34 pm

In addition to all the other good advice, look at the logic of the query. Filter data on retrieval instead of loading everything into temporary tables for processing.

"The credit belongs to the man who is actually in the arena, whose face is marred by dust and sweat and blood"

- Theodore RooseveltAuthor of:

SQL Server Execution Plans

SQL Server Query Performance Tuning -

September 28, 2023 at 6:23 pm

anoop.mig29 wrote:Hi

We have an stored procedure which runs frequently and it contain logic in which data are first dumped in temproray table and then moved to physical table .due to large data and frequent run of sp .tempdb database get filled up quickly .We cannot take server restart everytime so whats alternative to temp variables so as to minimized tempdb usage so that file size donot get spike

Regards

Anoop

You do realize that you've told us nothing about the current size and file configuration of your TempDB and nothing about the size of the Temp Table the code is trying to build nor whether it was the MDF/NDFs that got too large or if the LDF got too large or it was a combination of both, right?

I've also found that a lot of code that does such things with large tables are frequently done incorrectly. For example, a large Temp Table needs to be loaded in a "Minimally Logged" fashion and, if a clustered index is beneficial, needs to be added before any data is so that you don't effectively double the amount of space required for the full load, etc, etc.

To summarize, we need a whole lot more information to effectively help. Without it, we're just guessing.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

October 4, 2023 at 2:07 pm

Heh... Ok! The OP has left the building!

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

October 8, 2023 at 10:49 am

Hi folks sorry for reverting too late

ScottPletcher wrote:Increase the size of your tempdb files.

Sorry cannt do it as size of disk on which tempdb resides is limited.Wont get permission to increase its size.

Johan Bijnens wrote:As stated by the previous repliers, you must size your tempdb file(s) to be able to cope with your systems consumptions! Things that may help out : - grant your service account "Instant File initialization" - provide multiple files for your tempdb ( I use 1 file per core on our smaller systems ) - pre-size all your tempdb files

I think we have followed all best practice regarding tempdb

Jeff Moden wrote:anoop.mig29 wrote:Hi

We have an stored procedure which runs frequently and it contain logic in which data are first dumped in temproray table and then moved to physical table .due to large data and frequent run of sp .tempdb database get filled up quickly .We cannot take server restart everytime so whats alternative to temp variables so as to minimized tempdb usage so that file size donot get spike

Regards

Anoop

You do realize that you've told us nothing about the current size and file configuration of your TempDB and nothing about the size of the Temp Table the code is trying to build nor whether it was the MDF/NDFs that got too large or if the LDF got too large or it was a combination of both, right?

I've also found that a lot of code that does such things with large tables are frequently done incorrectly. For example, a large Temp Table needs to be loaded in a "Minimally Logged" fashion and, if a clustered index is beneficial, needs to be added before any data is so that you don't effectively double the amount of space required for the full load, etc, etc.

To summarize, we need a whole lot more information to effectively help. Without it, we're just guessing.

Jeff Moden wrote:anoop.mig29 wrote:Hi

We have an stored procedure which runs frequently and it contain logic in which data are first dumped in temproray table and then moved to physical table .due to large data and frequent run of sp .tempdb database get filled up quickly .We cannot take server restart everytime so whats alternative to temp variables so as to minimized tempdb usage so that file size donot get spike

Regards

Anoop

You do realize that you've told us nothing about the current size and file configuration of your TempDB and nothing about the size of the Temp Table the code is trying to build nor whether it was the MDF/NDFs that got too large or if the LDF got too large or it was a combination of both, right?

I've also found that a lot of code that does such things with large tables are frequently done incorrectly. For example, a large Temp Table needs to be loaded in a "Minimally Logged" fashion and, if a clustered index is beneficial, needs to be added before any data is so that you don't effectively double the amount of space required for the full load, etc, etc.

To summarize, we need a whole lot more information to effectively help. Without it, we're just guessing.

Well i think its increase both log and mdf/ndf files of tempdb .How to get size of temp table ?

- This reply was modified 2 years, 4 months ago by anoop.mig29.

-

October 8, 2023 at 11:42 am

anoop.mig29 wrote:Well i think its increase both log and mdf/ndf files of tempdb .How to get size of temp table ?

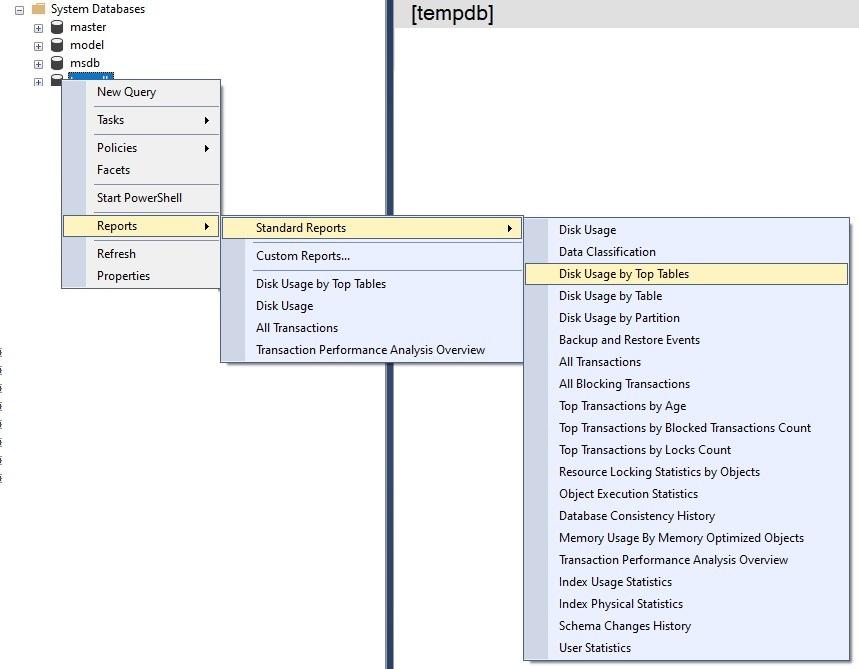

One way of doing it is to right click on the database in SSMS and then select Reports\Standard Reports\Disk Usage by Top Tables

You can split a tempdb over multiple disks. So you can just add a data file for it to another disk.

-

October 9, 2023 at 11:01 pm

anoop.mig29 wrote:Well i think its increase both log and mdf/ndf files of tempdb .How to get size of temp table ?

For the first statement, you don't actually know if that's a good idea or not because you don't know how to do the second statement. 😉

You've also not yet told us how big your TempDB is, how many files there are, what their sizes are, what their growth factors are, and nothing about log file in TempDB nor anything about the size of the disk(s) TempDB is on. We need to know all that, please.

Last but not least, we need to know where the code is. Is it contained in a stored procedure? Is it contained in an SQL AGENT job? Where is the code and what is firing it if it's not in a proc or a job?

I need to know all of that so I can tell you where to put the code that will return information about the size of the table in TempDB as it happens.

Also, do you have a scratch or DBA database that we can write to so you don't have to go searching through run logs to find this information? For that matter, do you even have run logs?

Like I said, so far, you haven't given us enough information to help you with this problem. Please claw through the above and provide it all, please. There's nothing I've asked for that would compromise security.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally)

Viewing 10 posts - 1 through 10 (of 10 total)

You must be logged in to reply to this topic. Login to reply