Fact Table - Various Grains

-

June 9, 2023 at 12:06 am

Hi there, I'm on a team developing an enterprise data warehouse for our organization. I'm working in one area of the business where the grain is a bit of a challenge. It's a classic order header/order detail scenario. I'm committed to modeling (at least the main fact) with a combined work order header/detail star..so no snowflake between header/detail and no work order dimension or anything like that. The challenge is that several elements of the data don't really roll up. A work order has a lot of different types of information such as:

HEADER:

- Work order id

- vehicle

- date time open & closed

- total labor cost

- total labor hours

- total parts cost

- total parts hours

- days between open/close

The detail section will contain all the labor lines, all the parts lines and so forth. The labor and parts costs roll up, but for example, the days between open/close do not come from the line detail...so that column would just be a repeating metric value which we want to avoid. I can't derive it from all the labor lines. Additionally, some metrics would be derived from a subset of the work order header/detail fact (e.g., on time preventive maintenance based on a specific type of task only)

Next, there are downtime hours and delay hours. Neither of those metrics come from the labor or parts lines. They're tracked disparately but connected to the work order.

Focusing on just the header/detail, I've modeled a very simple version of a header and detail table (variables). I'm wondering if anyone has any suggestions how you would deal with a metric that is not coming from a child table that becomes a repeating value. Allocation doesn't really make sense in this case.

SAMPLE SOURCE FOR FACT ETL

declare @workorder table (work_order_id int, vehicle_id int, labor_hours float, labor_cost float, parts_qty int, parts_cost int, date_open datetime,

date_closed datetime, days_open_close float)

insert into @workorder (work_order_id, vehicle_id, labor_hours, labor_cost, parts_qty, parts_cost, date_open, date_closed, days_open_close)

values

(1, 100, 10, 1000, 5, 4000, '2023-01-01 10:50', '2023-01-05 10:00', 3.97)

declare @workordertask table (work_order_id int, record_type varchar(10), task_type varchar(50), employee_id int, labor_start_datetime datetime,

labor_end_datetime datetime, labor_rate float, labor_hours float, labor_cost float, part_id varchar(30),

part_qty int, part_price int, part_cost int)

insert into @workordertask (work_order_id, record_type, task_type, employee_id, labor_start_datetime,

labor_end_datetime, labor_rate, labor_hours, labor_cost, part_id, part_qty, part_price, part_cost)

values

(1, 'Labor', 'Preventive Maintenance A', 17, '2023-01-01 12:00', '2023-01-01 14:30', 2.5, 100, 250, null, null, null, null)

,(1, 'Labor', 'Repair steering', 17, '2023-01-01 16:00', '2023-01-01 23:30', 7.5, 100, 750, null, null, null, null)

,(1, 'Parts', 'Repair steering', null, null, null, null, null, null, 'Steering Column', 1, 500, 500)

,(1, 'Parts', 'Repair steering', null, null, null, null, null, null, 'Power Steering fluid', 7, 500, 3500)

--sample source for a fact ETL

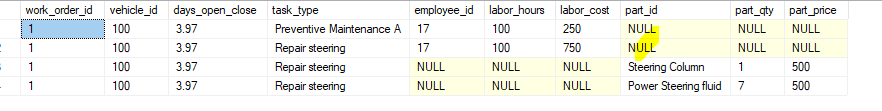

select w.work_order_id, w.vehicle_id, w.days_open_close, t.task_type, t.employee_id, t.labor_hours, t.labor_cost, t.part_id, t.part_qty, t.part_price

from @workorder w

join @workordertask t on t.work_order_id=w.work_order_idSample output

Note days_open_close will be repeating and cannot be reproduced by any line item details

The 2nd part to this is in the same data set, we have a business process that is wholly dependent on rows where the task type will be preventive maintenance (so for example, row 1 here). Would you all create a separate fact table that is built around the 1 type of row? (i.e., rows from this fact where task type is "Preventive Maintenance _")

Thanks!

- This topic was modified 2 years, 8 months ago by flamblaster.

- This topic was modified 2 years, 8 months ago by flamblaster.

-

June 9, 2023 at 2:16 am

FLOAT uses 8 bytes (total of 16 bytes and possibly not appearing as you might like). A DECIMAL (9,1) and DECIMAL(9,2) each use only 5 bytes. So my first question is why are you using FLOAT for labor_hours and labor_cost?

My next question would be, how did you end up with 5 for parts_qty in in @workorder when there are either 2 lines for 2 different parts or a total of 1+7 or 8 parts in the @workordertask sample data?

Since @workorder and @worordertask are the "source tables", I'd normalize those as follows...

And, as a suggestion, you should have two tables... one for labor and 1 for parts. That way, you don't have a shedload of NULLs to deal with and, no... nulls don't take 0 bytes except if you get very lucky with the physical placement of variable width columns and there are no variable width columns to the right of them that contain data. Otherwise, NULL variable width columns with occupy two bytes.

I'd also use some form of integer for the for both the record_type and task_type, although the record_type may be redundant if you split labor and parts into two separate tables as I recommend above.

I'd also use an actual part_id (integer) instead of the name of a part.

Then, instead of working with a date warehouse, which will usually be out of date and need constant updating by some expensive ETL process, I'd consider using an indexed view to replace the need for a WH table. You might need to add a either and auto-incrementing number to the @workordertask table to be able to create the Clustered Index on the indexed view (a requirement for indexed views).

Indexed Views in SQL Server are not quite the same as a "Materialized View" in other database engines. Indexed Views will auto-magically stay up to date as data is inserted or updated into the underlying tables. You have to tell "Materialized Views" in other database engines to "refresh". That's as bad as or worse than an ETL process because it requires an "outage".

That would also make the creation of your days_open_close column super simple and, yep, it could be indexed with a non-clustered index applied to the indexed view. Also remember that all columns in an indexed view must be deterministic but I don't see anything preventing that in this table.

You could join to reference tables in the indexed view but I wouldn't do that. Save it for a "Display" query that uses the view.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

June 9, 2023 at 3:11 am

Jeff,

Thanks for the reply. Data types are really immaterial to the question. I just put something together to emulate the question which is about the atomic grain; not the actual data types. The quantity of parts - that was a typos, so apologies for the mistake. I realize it's easy to get off track from the core question when the question quality isn't great. Again, really trying to concentrate on the issue of the mixed grain. Also, I'm not looking to normalize the tables, but rather denormalize it into a single source table during the ETL load into a fact_work_order_detail table.

The attributes like task type etc, yes, they will all be surrogate keys to a dimension table. the reason I have so much slop here is because I can't expose the source schema as it's from one of our vendors and proprietary. So I spent a few minutes trying to create a miniature sampling to explain the base problem which is, the mixed grain.

If you don't mind focusing on the fact that the days_open_close column is of mixed grain with the line detail, I'd appreciate your thoughts. Please ignore all the data type issues. I have about a dozen stars developed that are all working fine with properly denormalized dim/fact relationships. Most of the data sets I've worked with roll up from bottom to top. This data source has about a dozen metrics that cannot be derived from the line level detail along with several that can. I'm at the point where I feel like I need to have a fact_work_order and a fact_work_order_detail table; not for joining, but just to maintain the separate levels of granularity.

Hopefully this is making sense to you. Thanks for your time.

-

July 1, 2023 at 3:13 pm

I apologize... This slipped off my radar. Hopefully, you found an answer but I notice that you updated the question a day ago.

To be honest, I'm not sure, from your description, of what you actually want to accomplish. You talk a whole lot about the days_open_close column. The code you have does produce the "sample output" you have listed.

Are you saying that's the output you want and you simply want the days_open_close column to contain the value just one time at the top of the list?

Can you post a sample of what output you'd actually like to see?

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

July 7, 2023 at 4:27 am

-- For repeating values you can remove them from the Fact and add it to a dimension/or create a Junk dimension.

-- You can go ahead with two fact tables, one for header and one for details. If you want the data from both in reports, you need to careful in joining those facts. Kimball also shown a way for allocating certain measures that can be pushed to fact detail from fact header.

=======================================================================

Viewing 5 posts - 1 through 5 (of 5 total)

You must be logged in to reply to this topic. Login to reply