DBCC CHECKDB and Backup Database

-

February 11, 2023 at 1:09 pm

Hello.

Are there any references to running DBCC CHECKDB and/or Backup Database concurrently against different databses in the same SQL Instance?

My searches are turning up discussions against concurrent activities against the same DB.

DBCC CHECKDB is resource intensive (in terms of Memory) but Backup Database seemingly is not - other than IOPS. It does not seem as though DBCC Checkdb can utiilse multiple processors in this version of SQL - at least nowhere near the number installed or in MAXDOP - so CPU is not an issue but potentially memory is.

The situation is that we have a daily process that runs DBCC CHECKDB against all DBs in the instance, followed by Backup Database against those same DBs. Some are large and the DBCC CHECKDBs can take up to 2 hours each as can the backups - and starting at 9PM, with the numbers of DBs the Backups kick in around 5-6AM and don't finish until the afternoon.

I don't know if the Backups are impacting DB performance in the morning but it strikes me that if the SQL agent job was broken down to multiple jobs to either run DBCC CHECKDB concurrently against multiple DBs (which would likely lead to Memory contention) - or perhaps to run the Backup Databases alongside the DBCC CHECKDB for different DBs it may be possible to complete the processing prior to the start of day for most processing.

For example - Job 1 kicks off at 9PM and runs DBCC CHECKDBs - Job 2 kicks off at 12:00AM and runs Database Backup for the database already checked. The first Db to be checked takes 2 hours so allowing a window so as not to overlap or backup a DB whilst it is still being checked.

Appreciate any thoughts on this - and methods to avoid contention (i.e. Job 1 overruns etc).

Thanks

Steve O.

-

February 11, 2023 at 3:59 pm

To start with, please post one of the actual backup commands that gets executed. Obfuscate the name of the database and any machine names the command may contain but don't change anything else. There may be a couple of tricks we can show you to speed backups up quite a bit.

Please post one of the DBCC commands your running in a similar fashion and for the same reason.

We don't know your definition of what a large database is. Please tells the size of the largest database in MB or GB, how long it takes for the DBCC to run, and how long ittakes to do the full backup.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

February 11, 2023 at 4:56 pm

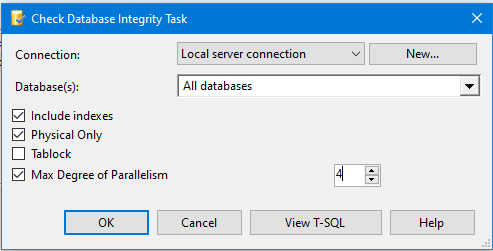

You could consider using DBCC CHECKDB with the "PHYSICAL_ONLY" option, which only performs a physical consistency check and not a logical consistency check. This can significantly reduce the memory requirements of the operation and has a shorter runtime.

-

February 11, 2023 at 5:02 pm

That's one of the reasons why I want to see both the Backup and DBCC commands that are being used. We don't know what the OP is actually using.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

February 12, 2023 at 12:08 pm

Thanks both.

I have been trying to get more information on this since I don't setup or maintain the schedules. From what I can tell this is actually an SSIS package that runs a maintenance sub-plan? I cannot see what commands are executed but I can see the first part of the execution in the SQL Agent History prior to it being truncated - and the messages on the log. The package says that it runs a DBCC checkdb against every DB in the instance and then runs a Backup for every DB.

Here is what I see in the History :

Executing query "DECLARE @Guid UNIQUEIDENTIFIER EXECUTE msdb..sp...".: 100% complete End Progress Progress: 2023-02-10 21:00:02.76 Source: Check Database Integrity Executing query "USE [master] ".: 50% complete End Progress Progress: 2023-02-10 21:00:25.50 Source: Check Database Integrity Executing query "DBCC CHECKDB(N'master') WITH NO_INFOMSGS ".: 100% complete End Progress Progress: 2023-02-10 21:00:25.51 Source: Check Database Integrity Executing query "USE [model] ".: 50% complete End Progress Progress: 2023-02-10 21:00:45.03 Source: Check Database Integrity Executing query "DBCC CHECKDB(N'model') WITH NO_INFOMSGS ".: 100% complete End Progress Progress: 2023-02-10 21:00:45.11 Source: Check Database Integrity Executing query "USE [msdb] ".: 50% complete End Progress Progress: 2023-02-10 21:01:24.09 Source: Check Database Integrity Executing query "DBCC CHECKDB(N'msdb') WITH NO_INFOMSGS ".: 100% complete End Progress Progress: 2023-02-10 21:01:24.11

This continues for each DB in the instance before the History Log file is truncated.

4 of the DBs are over 700GB in size - the largest of those is 1.6TB in size - the rest are much smaller (some are tiny).

DBCC CheckDB took 2 hours 45 minutes on the largest DB last night - and the backup took 2 hours 10 minutes.

Steve O.

-

February 12, 2023 at 12:43 pm

OK - found a bit more - here is a snip from the History for the Maintenance Plan (Backup step)

BACKUP DATABASE [DBAUtilities] TO DISK = N''O:\Backups\Daily\DBAUtilities\DBAUtilities_backup_2023_02_11_053151_2683033.bak'' WITH NOFORMAT, NOINIT, NAME = N''DBAUtilities_backup_2023_02_11_053151_2683033'', SKIP, REWIND, NOUNLOAD, STATS = 10

GO

declare @backupSetId as int

select @backupSetId = position from msdb..backupset where database_name=N''DBAUtilities'' and backup_set_id=(select max(backup_set_id) from msdb..backupset where database_name=N''DBAUtilities'' )

if @backupSetId is null begin raiserror(N''Verify failed. Backup information for database ''''DBAUtilities'''' not found.'', 16, 1) end

RESTORE VERIFYONLY FROM DISK = N''O:\Backups\Daily\DBAUtilities\DBAUtilities_backup_2023_02_11_053151_2683033.bak'' WITH FILE = @backupSetId, NOUNLOAD, NOREWIND

-

February 12, 2023 at 2:57 pm

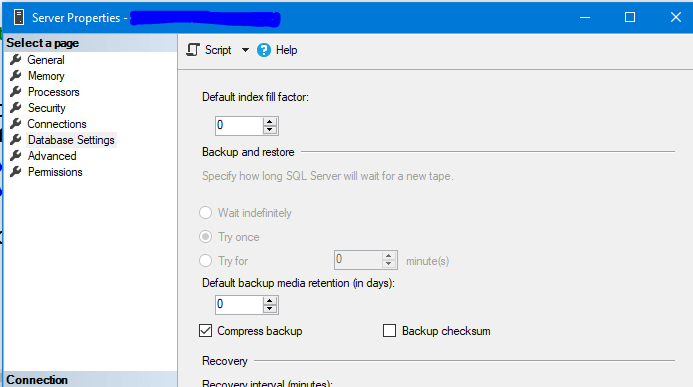

one thing for you to do is to add compression to the backup - it will speed it up and will reduce the size of files as well.

depending on how the backup command is built you may need to manually change it or just change the server level default setting

-

February 12, 2023 at 3:35 pm

Thanks Federico - I recall being told long ago that this was set at Instance level - I just checked using that link and compress backups is ticked. I do notice that Backup Checksum is not set at server level - I am guessing that adding this will incur additional overhead and actually extend the Backup time so may not be desirable but perhaps warrants review as part of this. If I can break down the SSIS plan to separate jobs I think it will do what I want and I can add additional options after that - but any suggestions - or links to reading welcome.

Regards

Steve O.

-

February 12, 2023 at 5:17 pm

it all depends on one's needs - but normally checkdb is done once a week instead of daily.

as for checksum - its advisable to have it assuming your server can hold the performance hit - but if done once a week maybe that is ok even if there is indeed a high hit.

its a question of trying it out.

regarding the backup of your big db's - if not done already it may be worth while having specific backup scripts for those - and play around with number of files to backup to (in our shop most are split onto 4 files, some few cases with 8 files) as well as as 3 other parameters - read about it at https://www.sqlshack.com/ms-sql-server-backup-optimization/

as with everything you will need to try out and see what is best for your server/db's

-

February 12, 2023 at 8:21 pm

Ok.. I've checked the code you provided for both the DBCC CHECKDB and the Backups. There are some serious opportunities for improvement in both...

With regards to the code provided for the DBCC CHECKDB task, I'd do or have someone else to the following...

- You should have two jobs for this...

- First Job

- Second Job

- Both Jobs

- Set the MAXDOP option to "4". You can play with this but the "sweet spot" for me was 4 on a 32 core box.

With regards to the code provided for the BACKUP task, I'd do or have someone else to the following...

- Stop using the BACKUP task in SSMS. They've not included one of the most important settings there is in the GUI for it. Use something like Ola Hallengren's backup solution or something else but stop using the BACKUP task. Whatever solution you end up using, it must all for the following 2 optional settings (and I've included the settings I've been using since 2011 and haven't needed to change them).

- BufferCount = 17

- MaxTransferSize = 1048576

- Ensure that the instance-level settings for databases is to Compress Backups.

- Make sure that your server is on the same sub-net as your backup device, whatever it is.

- If the backup storage is on NAS, then make sure both have good NICs and that the pipe between them is good.

And, yeah... I did all the same things back in 2011. I'm backing up 6.01 Terabytes to a 1.5TB compressed set of compressed backup files (the instance has many databases, the largest of which is just under 3TB and another at 1.6 TB) in 2 hours and 28 minutes to NAS (network attached storage). Back in 2011, my largest database was only 65GB. The settings have been good even though the database sizes have grown a whole lot in the more than last decade.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) - You should have two jobs for this...

-

February 12, 2023 at 9:06 pm

BTW... if you want a super early warning for something that has gone wrong with pages being used in a database, consider setting up a job that checks the suspect_pages table in the MSDB database every 10 or 15 minutes and send an alert if it finds anything in the table. It's not a replacement for DBCC CHECKDB but it can give you and extra leg up and it will "mark the spot" so you can do a PiT restore if you ever need to. It'll also give you the early opportunity to try to fix something before it eats your face off. 😀

https://learn.microsoft.com/en-us/sql/relational-databases/system-tables/suspect-pages-transact-sql

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally)

Viewing 11 posts - 1 through 11 (of 11 total)

You must be logged in to reply to this topic. Login to reply