Help with proper indexes

-

February 23, 2022 at 5:25 pm

I am new to sql server and hav been researching indexes but I am still not sure on the best practice for indexes for one of my tables. The table currently has over 12 million rows and has a primary key that is just and auto id field.

I have several fields we usually search by: Date of import, Railcar ID, Car Number, Customer Name, Customer ID. Most of the searches are created by me so i can search by RailcarID and CustomerID for example.(instead of Car Number or Customer Name) However, I know you can have multiple columns in an index and I am not sure if I should use a dual index, like RailcarID, Date of Import or just create an index for each column that is usually searched. That would be Date of Import, RailcarID, and CustomerID.

Any enlightenment is appreciated. (also is Primary Key bad on the auto id field-it is the only way i can make sure each row is unique)

-

February 23, 2022 at 5:36 pm

Auto-id (identity in SQL Server) is not always bad as the clustering key (*), but it's disastrously bad to default to using it as the clustering key. The clustering key is the single biggest factor, by far, in overall performance of the table, and thus should always be very carefully chosen.

Generally you'll want a clus key that is sequential, so off the top it looks like the clus key should start with Date of Import.

But to really determine that, you need to look at several different things.

If you're willing to run the script below for your table, and post the results, we can review the results to determine which indexes to create and why. If you're looking for some super-easy way to determine the best indexes, sorry, that just doesn't exist.

BEFORE running, be sure to change the table name pattern to include ONLY the table(s) you want to review. I've marked that spot in the code. By default it looks at all tables.

(*) Identity is very often great as a Primary Key (PK), as long as you make sure it's NONCLUSTERED.

/*capture changed system settings so that they can be reset at the end of this script*/DECLARE @deadlock_priority smallint

DECLARE @transaction_isolation_level smallint

SELECT @deadlock_priority = deadlock_priority, @transaction_isolation_level = transaction_isolation_level

FROM sys.dm_exec_sessions

WHERE session_id = @@SPID

SET DEADLOCK_PRIORITY -8; /*"tell" SQL that if this task somehow gets into a deadlock, cancel THIS task, NOT any other one*/SET TRANSACTION ISOLATION LEVEL READ UNCOMMITTED

SET NOCOUNT ON;

DECLARE @filegroup_name nvarchar(128) = '%'

DECLARE @include_system_tables bit

DECLARE @list_filegroup_and_drive_summary bit

DECLARE @list_missing_indexes bit

DECLARE @list_missing_indexes_summary bit

DECLARE @list_total_size bit

DECLARE @max_compression int

DECLARE @order_by smallint /* -2=size DESC; 1=table_name ASC; 2=size ASC; */DECLARE @table_name_pattern sysname = '%' --<<--!!YOUR TABLE NAME GOES HERE!!

DECLARE @table_name_exclude_pattern sysname = '#%'

SET @list_missing_indexes = 1 --NOTE: can take some time, set to 0 if you don't want to wait.

SET @list_missing_indexes_summary = 0 /*not available unless you uncomment the code for it which requires DelimitedSplit8K function*/SET @order_by = -2 /* -2=size DESC; 1=table_name ASC; 2=size ASC; */SET @list_total_size = 1

SET @filegroup_name = '%'

SET @table_name_pattern = '%'

IF @include_system_tables IS NULL

SET @include_system_tables = CASE WHEN DB_NAME() IN ('master', 'msdb', 'tempdb') THEN 1 ELSE 0 END

SET @list_filegroup_and_drive_summary = 0

DECLARE @debug smallint

DECLARE @format_counts smallint --1=',0'; 2/3=with K=1000s,M=1000000s, with 0/1 dec. places;.

DECLARE @include_schema_in_table_names bit

DECLARE @sql_startup_date datetime

DECLARE @total decimal(19, 3)

SELECT @sql_startup_date = create_date

FROM sys.databases WITH (NOLOCK)

WHERE name = 'tempdb'

SET @include_schema_in_table_names = 0

SET @format_counts = 3

SET @debug = 0

IF OBJECT_ID('tempdb.dbo.#index_missing') IS NOT NULL

DROP TABLE dbo.#index_missing

IF OBJECT_ID('tempdb.dbo.#index_operational_stats') IS NOT NULL

DROP TABLE dbo.#index_operational_stats

IF OBJECT_ID('tempdb.dbo.#index_specs') IS NOT NULL

DROP TABLE dbo.#index_specs

IF OBJECT_ID('tempdb.dbo.#index_usage') IS NOT NULL

DROP TABLE dbo.#index_usage

SELECT *

INTO #index_operational_stats

FROM sys.dm_db_index_operational_stats ( DB_ID(), NULL, NULL, NULL )

CREATE TABLE dbo.#index_specs (

object_id int NOT NULL,

index_id int NOT NULL,

min_compression int NULL,

max_compression int NULL,

drive char(1) NULL,

alloc_mb decimal(9, 1) NOT NULL,

alloc_gb AS CAST(alloc_mb / 1024.0 AS decimal(9, 3)),

used_mb decimal(9, 1) NOT NULL,

used_gb AS CAST(used_mb / 1024.0 AS decimal(9, 3)),

rows bigint NULL,

table_mb decimal(9, 1) NULL,

table_gb AS CAST(table_mb / 1024.0 AS decimal(9, 3)),

size_rank int NULL,

approx_max_data_width bigint NULL,

max_days_active int,

UNIQUE CLUSTERED ( object_id, index_id )

) --SELECT * FROM #index_specs

--**********************************************************************************************************************

PRINT 'Started @ ' + CONVERT(varchar(30), GETDATE(), 120)

DECLARE @is_compression_available bit

DECLARE @sql varchar(max)

IF (CAST(SERVERPROPERTY('Edition') AS varchar(40)) NOT LIKE '%Developer%' AND

CAST(SERVERPROPERTY('Edition') AS varchar(40)) NOT LIKE '%Enterprise%')

AND (CAST(SERVERPROPERTY('ProductVersion') AS varchar(30)) NOT LIKE '1[456789]%.%')

SET @is_compression_available = 0

ELSE

SET @is_compression_available = 1

SET @sql = '

INSERT INTO #index_specs ( object_id, index_id,' +

CASE WHEN @is_compression_available = 0 THEN '' ELSE '

min_compression, max_compression,' END + '

alloc_mb, used_mb, rows )

SELECT

base_size.object_id,

base_size.index_id, ' +

CASE WHEN @is_compression_available = 0 THEN '' ELSE '

base_size.min_compression,

base_size.max_compression,' END + '

(base_size.total_pages + ISNULL(internal_size.total_pages, 0)) / 128.0 AS alloc_mb,

(base_size.used_pages + ISNULL(internal_size.used_pages, 0)) / 128.0 AS used_mb,

base_size.row_count AS rows

FROM (

SELECT

dps.object_id,

dps.index_id, ' +

CASE WHEN @is_compression_available = 0 THEN '' ELSE '

MIN(p.data_compression) AS min_compression,

MAX(p.data_compression) AS max_compression,' END + '

SUM(dps.reserved_page_count) AS total_pages,

SUM(dps.used_page_count) AS used_pages,

SUM(CASE WHEN dps.index_id IN (0, 1) THEN dps.row_count ELSE 0 END) AS row_count

FROM sys.dm_db_partition_stats dps ' +

CASE WHEN @is_compression_available = 0 THEN '' ELSE '

INNER JOIN sys.partitions p WITH (NOLOCK) ON

p.partition_id = dps.partition_id ' END + '

--WHERE dps.object_id > 100

WHERE OBJECT_NAME(dps.object_id) LIKE ''' + @table_name_pattern + ''' AND

OBJECT_NAME(dps.object_id) NOT LIKE ''' + @table_name_exclude_pattern + '''

GROUP BY

dps.object_id,

dps.index_id

) AS base_size

LEFT OUTER JOIN (

SELECT

it.parent_id,

SUM(dps.reserved_page_count) AS total_pages,

SUM(dps.used_page_count) AS used_pages

FROM sys.internal_tables it WITH (NOLOCK)

INNER JOIN sys.dm_db_partition_stats dps WITH (NOLOCK) ON

dps.object_id = it.parent_id

WHERE it.internal_type IN ( ''202'', ''204'', ''211'', ''212'', ''213'', ''214'', ''215'', ''216'' )

GROUP BY

it.parent_id

) AS internal_size ON base_size.index_id IN (0, 1) AND internal_size.parent_id = base_size.object_id

'

IF @debug >= 1

PRINT @sql

EXEC(@sql)

--**********************************************************************************************************************

UPDATE [is]

SET approx_max_data_width = index_cols.approx_max_data_width

FROM #index_specs [is]

INNER JOIN (

SELECT index_col_ids.object_id, index_col_ids.index_id,

SUM(CASE WHEN c.max_length = -1 THEN 16 ELSE c.max_length END) AS approx_max_data_width

FROM (

SELECT ic.object_id, ic.index_id, ic.column_id

--,object_name(ic.object_id)

FROM sys.index_columns ic

WHERE

ic.object_id > 100

UNION

SELECT i_nonclus.object_id, i_nonclus.index_id, ic_clus.column_id

--,object_name(i_nonclus.object_id)

FROM sys.indexes i_nonclus

CROSS APPLY (

SELECT ic_clus2.column_id

--,object_name(ic_clus2.object_id),ic_clus2.key_ordinal

FROM sys.index_columns ic_clus2

WHERE

ic_clus2.object_id = i_nonclus.object_id AND

ic_clus2.index_id = 1 AND

ic_clus2.key_ordinal > 0 --technically superfluous, since clus index can't have include'd cols anyway

) AS ic_clus

WHERE

i_nonclus.object_id > 100 AND

i_nonclus.index_id > 1

) AS index_col_ids

INNER JOIN sys.columns c ON c.object_id = index_col_ids.object_id AND c.column_id = index_col_ids.column_id

GROUP BY index_col_ids.object_id, index_col_ids.index_id

) AS index_cols ON index_cols.object_id = [is].object_id AND index_cols.index_id = [is].index_id

UPDATE ispec

SET table_mb = ispec_ranking.table_mb,

size_rank = ispec_ranking.size_rank

FROM #index_specs ispec

INNER JOIN (

SELECT *, ROW_NUMBER() OVER(ORDER BY table_mb DESC, rows DESC, OBJECT_NAME(object_id)) AS size_rank

FROM (

SELECT object_id, SUM(alloc_mb) AS table_mb, MAX(rows) AS rows

FROM #index_specs

GROUP BY object_id

) AS ispec_allocs

) AS ispec_ranking ON

ispec_ranking.object_id = ispec.object_id

--**********************************************************************************************************************

IF @list_missing_indexes = 1

BEGIN

SELECT

IDENTITY(int, 1, 1) AS ident,

DB_NAME(mid.database_id) AS Db_Name,

CONVERT(varchar(10), GETDATE(), 120) AS capture_date,

ispec.size_rank, ispec.table_mb,

CASE WHEN @format_counts = 1 THEN REPLACE(CONVERT(varchar(20), CAST(dps.row_count AS money), 1), '.00', '')

WHEN @format_counts = 2 THEN CAST(CAST(dps.row_count * 1.0 / CASE ca1.row_count_suffix

WHEN 'M' THEN 1000000 WHEN 'K' THEN 1000 ELSE 1 END AS int) AS varchar(20)) + ca1.row_count_suffix

WHEN @format_counts = 3 THEN CAST(CAST(dps.row_count * 1.0 / CASE ca1.row_count_suffix

WHEN 'M' THEN 1000000 WHEN 'K' THEN 1000 ELSE 1 END AS decimal(14, 1)) AS varchar(20)) + ca1.row_count_suffix

ELSE CAST(dps.row_count AS varchar(20)) END AS row_count,

CASE WHEN @include_schema_in_table_names = 1 THEN OBJECT_SCHEMA_NAME(mid.object_id /*, mid.database_id*/) + '.'

ELSE '' END + OBJECT_NAME(mid.object_id /*, mid.database_id*/) AS Table_Name,

mid.equality_columns, mid.inequality_columns,

LEN(mid.included_columns) - LEN(REPLACE(mid.included_columns, ',', '')) + 1 AS incl_col_count,

mid.included_columns,

user_seeks, user_scans, NULL AS max_days_active, /*cj1.max_days_active,*/ unique_compiles,

last_user_seek, last_user_scan,

CAST(avg_total_user_cost AS decimal(9, 3)) AS avg_total_user_cost,

CAST(avg_user_impact AS decimal(9, 3)) AS [avg_user_impact%],

system_seeks, system_scans, last_system_seek, last_system_scan,

CAST(avg_total_system_cost AS decimal(9, 3)) AS avg_total_system_cost,

CAST(avg_system_impact AS decimal(9, 3)) AS [avg_system_impact%],

mid.statement, mid.object_id, mid.index_handle

INTO #index_missing

FROM sys.dm_db_missing_index_details mid WITH (NOLOCK)

INNER JOIN sys.indexes i ON i.object_id = mid.object_id AND i.index_id IN (0, 1) AND i.data_space_id <= 32767

LEFT OUTER JOIN sys.dm_db_missing_index_groups mig WITH (NOLOCK) ON

mig.index_handle = mid.index_handle

LEFT OUTER JOIN sys.dm_db_missing_index_group_stats migs WITH (NOLOCK) ON

migs.group_handle = mig.index_group_handle

LEFT OUTER JOIN sys.dm_db_partition_stats dps WITH (NOLOCK) ON

dps.object_id = mid.object_id AND

dps.index_id IN (0, 1)

OUTER APPLY (

SELECT CASE WHEN dps.row_count >= 1000000 THEN 'M' WHEN dps.row_count >= 1000 THEN 'K' ELSE '' END AS row_count_suffix

) AS ca1

OUTER APPLY (

SELECT ispec.table_mb, ispec.size_rank

FROM dbo.#index_specs ispec

WHERE

ispec.object_id = mid.object_id AND

ispec.index_id IN (0, 1)

) AS ispec

--order by

--DB_NAME, Table_Name, equality_columns

WHERE

1 = 1

AND mid.database_id = DB_ID()

AND OBJECT_NAME(mid.object_id) LIKE @table_name_pattern

ORDER BY

--avg_total_user_cost * (user_seeks + user_scans) DESC,

Db_Name,

CASE WHEN @order_by IN (-2, 2) THEN ispec.size_rank * -SIGN(@order_by) ELSE 0 END,

Table_Name,

equality_columns, inequality_columns,

user_seeks DESC

SELECT *

FROM #index_missing

ORDER BY ident

/*

IF @list_missing_indexes_summary = 1

BEGIN

SELECT

derived.Size_Rank, derived.table_mb,

derived.Table_Name, derived.Equality_Column, derived.Equality#, derived.User_Seeks,

ISNULL((SELECT SUM(user_seeks)

FROM #index_missing im2

OUTER APPLY dbo.DelimitedSplit8K (inequality_columns, ',') ds

WHERE im2.Size_Rank = derived.Size_Rank AND

LTRIM(RTRIM(ds.Item)) = derived.Equality_Column

), 0) AS Inequality_Seeks,

derived.User_Scans, derived.Last_User_Seek, derived.Last_User_Scan,

derived.Max_Days_Active, derived.Avg_Total_User_Cost, derived.Approx_Total_Cost

FROM (

SELECT

Size_Rank, MAX(table_mb) AS table_mb, Table_Name, LTRIM(RTRIM(ds.Item)) AS Equality_Column,

SUM(user_seeks) AS User_Seeks, SUM(user_scans) AS User_Scans,

MAX(last_user_seek) AS Last_User_Seek, MAX(last_user_scan) AS Last_User_Scan,

MIN(max_days_active) AS Max_Days_Active,

MAX(avg_total_user_cost) AS Avg_Total_User_Cost,

(SUM(user_seeks) + SUM(user_scans)) * MAX(avg_total_user_cost) AS Approx_Total_Cost,

MAX(ds.ItemNumber) AS Equality#

FROM #index_missing

CROSS APPLY dbo.DelimitedSplit8K (equality_columns, ',') ds

WHERE equality_columns IS NOT NULL

GROUP BY size_rank, Table_Name, LTRIM(RTRIM(ds.Item))

) AS derived

ORDER BY Size_Rank, Table_Name, Approx_Total_Cost DESC

END --IF

*/END --IF

PRINT 'Index Usage Stats @ ' + CONVERT(varchar(30), GETDATE(), 120)

--**********************************************************************************************************************

-- list index usage stats (seeks, scans, etc.)

SELECT

IDENTITY(int, 1, 1) AS ident,

DB_NAME() AS db_name,

ispec.size_rank, ispec.alloc_mb - ispec.used_mb AS unused_mb,

CASE WHEN i.data_space_id <= 32767 THEN FILEGROUP_NAME(i.data_space_id) ELSE '{CS}' END AS main_fg_name,

CAST(NULL AS int) AS filler,

CASE WHEN @include_schema_in_table_names = 1 THEN OBJECT_SCHEMA_NAME(i.object_id /*, DB_ID()*/) + '.'

ELSE '' END + OBJECT_NAME(i.object_id /*, i.database_id*/) AS Table_Name,

key_cols AS key_cols, nonkey_cols AS nonkey_cols,

LEN(nonkey_cols) - LEN(REPLACE(nonkey_cols, ',', '')) + 1 AS nonkey_count,

CAST(NULL AS varchar(100)) AS filler2,

ius.user_seeks, ius.user_scans, --ius.user_seeks + ius.user_scans AS total_reads,

ius.user_lookups, ius.user_updates,

CASE WHEN @format_counts = 1 THEN REPLACE(CONVERT(varchar(20), CAST(dps.row_count AS money), 1), '.00', '')

WHEN @format_counts = 2 THEN CAST(CAST(dps.row_count * 1.0 / CASE ca1.row_count_suffix

WHEN 'M' THEN 1000000 WHEN 'K' THEN 1000 ELSE 1 END AS int) AS varchar(20)) + ca1.row_count_suffix

WHEN @format_counts = 3 THEN CAST(CAST(dps.row_count * 1.0 / CASE ca1.row_count_suffix

WHEN 'M' THEN 1000000 WHEN 'K' THEN 1000 ELSE 1 END AS decimal(14, 1)) AS varchar(20)) + ca1.row_count_suffix

ELSE CAST(dps.row_count AS varchar(20)) END AS row_count,

ispec.alloc_gb AS index_gb, ispec.table_gb,

SUBSTRING('NY', CAST(i.is_primary_key AS int) + CAST(i.is_unique_constraint AS int) + 1, 1) +

CASE WHEN i.is_unique = CAST(i.is_primary_key AS int) + CAST(i.is_unique_constraint AS int) THEN ''

ELSE '.' + SUBSTRING('NY', CAST(i.is_unique AS int) + 1, 1) END AS [Uniq?],

REPLACE(i.name, oa1.table_name, '~') AS index_name,

i.index_id,

ispec.approx_max_data_width AS [data_width],

CAST(CAST(ispec.used_mb AS float) * 1024.0 * 1024.0 / NULLIF(dps.row_count, 0) AS int) AS cmptd_row_size,

CASE

WHEN ispec.max_compression IS NULL THEN '(Not applicable)'

WHEN ispec.max_compression = 2 THEN 'Page'

WHEN ispec.max_compression = 1 THEN 'Row'

WHEN ispec.max_compression = 0 THEN ''

ELSE '(Unknown)' END AS max_compression,

i.fill_factor,

dios.leaf_delete_count + dios.leaf_insert_count + dios.leaf_update_count as leaf_mod_count,

dios.range_scan_count, dios.singleton_lookup_count,

DATEDIFF(DAY, STATS_DATE ( i.object_id , i.index_id ), GETDATE()) AS stats_days_old,

DATEDIFF(DAY, CASE

WHEN o.create_date > @sql_startup_date AND @sql_startup_date > o.modify_date THEN o.create_date

WHEN o.create_date > @sql_startup_date AND o.modify_date > @sql_startup_date THEN o.modify_date

ELSE @sql_startup_date END, GETDATE()) AS max_days_active,

dios.row_lock_count, dios.row_lock_wait_in_ms,

dios.page_lock_count, dios.page_lock_wait_in_ms,

ius.last_user_seek, ius.last_user_scan,

ius.last_user_lookup, ius.last_user_update,

fk.Reference_Count AS fk_ref_count,

ius2.row_num,

ius.system_seeks, ius.system_scans, ius.system_lookups, ius.system_updates,

ius.last_system_seek, ius.last_system_scan, ius.last_system_lookup, ius.last_system_update,

GETDATE() AS capture_date

INTO #index_usage

FROM sys.indexes i WITH (NOLOCK)

INNER JOIN sys.objects o WITH (NOLOCK) ON

o.object_id = i.object_id

INNER JOIN dbo.#index_specs ispec ON

ispec.object_id = i.object_id AND

ispec.index_id = i.index_id

OUTER APPLY (

SELECT CASE WHEN EXISTS(SELECT 1 FROM #index_specs [is] WHERE [is].object_id = i.object_id AND [is].index_id = 1)

THEN 1 ELSE 0 END AS has_clustered_index

) AS cj2

OUTER APPLY (

SELECT STUFF((

SELECT

', ' + COL_NAME(ic.object_id, ic.column_id)

FROM sys.index_columns ic WITH (NOLOCK)

WHERE

ic.key_ordinal > 0 AND

ic.object_id = i.object_id AND

ic.index_id = i.index_id

ORDER BY

ic.key_ordinal

FOR XML PATH('')

), 1, 2, '')

) AS key_cols (key_cols)

OUTER APPLY (

SELECT STUFF((

SELECT

', ' + COL_NAME(ic.object_id, ic.column_id)

FROM sys.index_columns ic WITH (NOLOCK)

WHERE

ic.key_ordinal = 0 AND

ic.object_id = i.object_id AND

ic.index_id = i.index_id

ORDER BY

COL_NAME(ic.object_id, ic.column_id)

FOR XML PATH('')

), 1, 2, '')

) AS nonkey_cols (nonkey_cols)

LEFT OUTER JOIN sys.dm_db_partition_stats dps WITH (NOLOCK) ON

dps.object_id = i.object_id AND

dps.index_id = i.index_id

LEFT OUTER JOIN sys.dm_db_index_usage_stats ius WITH (NOLOCK) ON

ius.database_id = DB_ID() AND

ius.object_id = i.object_id AND

ius.index_id = i.index_id

LEFT OUTER JOIN (

SELECT

database_id, object_id, MAX(user_scans) AS user_scans,

ROW_NUMBER() OVER (ORDER BY MAX(user_scans) DESC) AS row_num --user_scans|user_seeks+user_scans

FROM sys.dm_db_index_usage_stats WITH (NOLOCK)

WHERE

database_id = DB_ID()

--AND index_id > 0

GROUP BY

database_id, object_id

) AS ius2 ON

ius2.database_id = DB_ID() AND

ius2.object_id = i.object_id

LEFT OUTER JOIN (

SELECT

referenced_object_id, COUNT(*) AS Reference_Count

FROM sys.foreign_keys WITH (NOLOCK)

WHERE

is_disabled = 0

GROUP BY

referenced_object_id

) AS fk ON

fk.referenced_object_id = i.object_id

LEFT OUTER JOIN #index_operational_stats dios ON

dios.object_id = i.object_id AND

dios.index_id = i.index_id

OUTER APPLY (

SELECT OBJECT_NAME(i.object_id/*, DB_ID()*/) AS table_name

) AS oa1

OUTER APPLY (

SELECT CASE WHEN dps.row_count >= 1000000 THEN 'M' WHEN dps.row_count >= 1000 THEN 'K' ELSE '' END AS row_count_suffix

) AS ca1

WHERE

--i.object_id > 100 AND

(i.is_disabled = 0 OR @order_by IN (-1, 1)) AND

i.is_hypothetical = 0 AND

i.data_space_id <= 32767 AND

--i.type IN (0, 1, 2) AND

o.type NOT IN ( 'IF', 'IT', 'TF', 'TT' ) AND

(

o.name LIKE @table_name_pattern AND

o.name NOT LIKE 'dtprop%' AND

o.name NOT LIKE 'filestream[_]' AND

o.name NOT LIKE 'MSpeer%' AND

o.name NOT LIKE 'MSpub%' AND

--o.name NOT LIKE 'tmp[_]%' AND

--o.name NOT LIKE 'queue[_]%' AND

--(DB_NAME() IN ('master', 'msdb') OR o.name NOT LIKE 'sys%')

(@include_system_tables = 1 OR o.name NOT LIKE 'sys%')

--AND o.name NOT LIKE 'tmp%'

) AND

(@filegroup_name IS NULL OR CASE WHEN i.data_space_id <= 32767 THEN FILEGROUP_NAME(i.data_space_id) ELSE '{CS}' END LIKE @filegroup_name) AND

(@max_compression IS NULL OR ispec.max_compression <= @max_compression)

ORDER BY

--cj2.has_clustered_index, ispec.size_rank, --heaps first, by size

db_name,

--i.index_id,

--ius.user_seeks - ius.user_scans,

CASE WHEN @order_by IN (-2, 2) THEN ispec.size_rank * -SIGN(@order_by) ELSE 0 END,

--ius.user_scans DESC,

--ius2.row_num, --user_scans&|user_seeks

table_name,

-- list clustered index first, if any, then other index(es)

CASE WHEN i.index_id IN (0, 1) THEN 1 ELSE 2 END,

key_cols

OPTION (MAXDOP 3, RECOMPILE)

SELECT *

FROM #index_usage

ORDER BY ident

IF @list_total_size > 1

SELECT SUM(index_gb) AS Total_Size_GB

FROM #index_usage

IF @list_filegroup_and_drive_summary = 1

SELECT

LEFT(df.physical_name, 1) AS drive,

FILEGROUP_NAME(au_totals.data_space_id) AS filegroup_name,

au_totals.total_mb AS total_fg_mb,

au_totals.used_mb AS used_fg_mb,

au_totals.total_mb - au_totals.used_mb AS free_fg_mb,

CAST(df.size / 128.0 AS decimal(9, 3)) AS file_size_mb

FROM (

SELECT

au.data_space_id,

CAST(SUM(au.total_pages) / 128.0 AS decimal(9, 3)) AS total_mb,

CAST(SUM(au.used_pages) / 128.0 AS decimal(9, 3)) AS used_mb

FROM sys.allocation_units au

INNER JOIN sys.filegroups fg ON

fg.data_space_id = au.data_space_id

GROUP BY au.data_space_id WITH ROLLUP

) AS au_totals

INNER JOIN sys.database_files df ON

df.data_space_id = au_totals.data_space_id

ORDER BY filegroup_name, drive

PRINT 'Ended @ ' + CONVERT(varchar(30), GETDATE(), 120)

SET DEADLOCK_PRIORITY @deadlock_priority

IF @transaction_isolation_level = 1

SET TRANSACTION ISOLATION LEVEL READ UNCOMMITTED

ELSE

IF @transaction_isolation_level = 2

SET TRANSACTION ISOLATION LEVEL READ COMMITTED

ELSE

IF @transaction_isolation_level = 3

SET TRANSACTION ISOLATION LEVEL REPEATABLE READ

ELSE

IF @transaction_isolation_level = 4

SET TRANSACTION ISOLATION LEVEL SERIALIZABLE

ELSE

IF @transaction_isolation_level = 5

SET TRANSACTION ISOLATION LEVEL SNAPSHOTSQL DBA,SQL Server MVP(07, 08, 09) "It's a dog-eat-dog world, and I'm wearing Milk-Bone underwear." "Norm", on "Cheers". Also from "Cheers", from "Carla": "You need to know 3 things about Tortelli men: Tortelli men draw women like flies; Tortelli men treat women like flies; Tortelli men's brains are in their flies".

-

February 23, 2022 at 5:50 pm

An identity/sequence is fine for a primary key, especially if it's very expensive to define a unique key (i.e., requires many columns, especially if they're big -- e.g., long strings), or that table is joined to others and that single artificial key can be used significantly more efficiently than a multi-column key, even if relatively small. But that primary key doesn't necessarily have to be clustered if your queries would benefit more from clustering on other column(s).

Queries can only take advantage of an index if the first column in the index is used by the query (e.g. where clause, joins, order by). If you have multiple queries that can use the same combinations of leading columns in an index, they may be able to use the same index. Look at the "predicates" in your queries -- column you filter by in where clauses, columns you join on, and columns you order by.

Look at actual execution plans in SSMS to see what takes the most I/O. SQL Sentry Plan Explorer is a great free tool that makes analysis of execution plans even easier than using SSMS. Are queries that should be able to isolate to a single or few rows scanning whole tables?

Then try indexes based on analysis of the predicates. Then look at the actual execution plans again with the index.

From what you're describing, you may need a few indexes for your varying queries.

Be wary of adding redundant/duplicate indexes, or so many indexes it severely impacts inserts & updates.

I wonder about your assertion that you can't define uniqueness. It happens sometimes, particularly w/ logging data. But otherwise it may be a red flag.

Is there really no combination of other columns that should uniquely identify a row?

If not, do you have duplicate data? How does it affect your queries? Do you use group by or distinct to resolve the duplicates?

-

February 24, 2022 at 1:51 pm

As you can tell, this is a huge topic. There's a lot to it. Honestly, I'd suggest you grab a copy of my book, link in the signature below this post. Since you're just getting going with all that, it really could help.

"The credit belongs to the man who is actually in the arena, whose face is marred by dust and sweat and blood"

- Theodore RooseveltAuthor of:

SQL Server Execution Plans

SQL Server Query Performance Tuning -

February 24, 2022 at 3:42 pm

Hello, I realize that indexes are a big deal and have read up on them online a bit and in a book I have Wrox-beginning sql server 2012 programming (even though i am on 2017) Grant I will check out your book too. I am always looking for new resources as I am trying to teach myself sql server to help my company and better myself.

I felt I was not able to define uniqueness because this is a daily report of railcars and their location. Each day I trace several thousand cars and get feedback on where they are. Sometimes the car doesnt move and the only difference in the record from the previous day record is the date of import I create. The date in the file often stays the same. Also, one of my users may trace the railcar later in the day to see where it is so it might get more than one trace per day. I could solve this by not adding the users trace to the database or by using a date/time stamp so then I do believe that a timestamp/CarInitials/CarNumber might work to be unique (some of our cars have the same number but different initials). I also do some data transformation on import and add a railcarID. So in reality I could use timestamp/RailcarID and that would work 99.9% of the time i believe. Sometimes we are tracing cars that are being re-stenciled so that would cause the railcarid not to work since it is the same car and railcar id but with different initials and/or number. I link this table in to an access database to create front end reports for the users and Access requires a primary key when you do that and that is why I added the ID field. Currently the speed is ok for most things. I have a non-clusterd index for RailcarID, ImportDate, CarNumber and CustomerID. I have an access db where I display the data for users and when I run the customer report it takes about 3 seconds.

I was not sure if a random id field would be better for the primary key or a Time/CarInitials/CarNumber combo would be better.

Scott, i ran your code and got two lines returned. I just rebuilt the table this morning because I needed to move it to a new database and the identity field didnt transfer so it may be short on stats of usage, which is what it looks like this script is looking at.

identDb_Namecapture_datesize_ranktable_mbrow_countTable_Nameequality_columnsinequality_columnsincl_col_countincluded_columnsuser_seeksuser_scansmax_days_activeunique_compileslast_user_seeklast_user_scanavg_total_user_costavg_user_impact%system_seekssystem_scanslast_system_seeklast_system_scanavg_total_system_costavg_system_impact%statementobject_idindex_handle

1TCIXRail2022-02-2413472.912.5MFinalizedTraceData[DateofImport]NULLNULLNULL30NULL22022-02-24 08:53:28.523NULL280.94699.98000NULLNULL0.0000.000[TCIXRail].[dbo].[FinalizedTraceData]9655784784212identdb_namesize_rankunused_mbmain_fg_namefillerTable_Namekey_colsnonkey_colsnonkey_countfiller2user_seeksuser_scansuser_lookupsuser_updatesrow_countindex_gbtable_gbUniq?index_nameindex_iddata_widthcmptd_row_sizemax_compressionfill_factorleaf_mod_countrange_scan_countsingleton_lookup_countstats_days_oldmax_days_activerow_lock_countrow_lock_wait_in_mspage_lock_countpage_lock_wait_in_mslast_user_seeklast_user_scanlast_user_lookuplast_user_updatefk_ref_countrow_numsystem_seekssystem_scanssystem_lookupssystem_updateslast_system_seeklast_system_scanlast_system_lookuplast_system_updatecapture_date

1TCIXRail1603.7PRIMARYNULLFinalizedTraceDataIDNULLNULLNULL20803049512.5M3.3923.392YPK_~_214240012495678179400111500118952102022-02-24 08:53:29.3132022-02-24 08:53:28.523NULL2022-02-24 08:52:18.370NULL10300NULL2022-02-24 08:52:20.613NULLNULL2022-02-24 08:57:46.823Sorry, not sure if this is the best way to display the results.

-

February 24, 2022 at 3:46 pm

deleted

-

February 24, 2022 at 4:05 pm

railman wrote:I am new to sql server and hav been researching indexes but I am still not sure on the best practice for indexes for one of my tables. The table currently has over 12 million rows and has a primary key that is just and auto id field.

I have several fields we usually search by: Date of import, Railcar ID, Car Number, Customer Name, Customer ID. Most of the searches are created by me so i can search by RailcarID and CustomerID for example.(instead of Car Number or Customer Name) However, I know you can have multiple columns in an index and I am not sure if I should use a dual index, like RailcarID, Date of Import or just create an index for each column that is usually searched. That would be Date of Import, RailcarID, and CustomerID.

Any enlightenment is appreciated. (also is Primary Key bad on the auto id field-it is the only way i can make sure each row is unique)

I think the real key is to ask "What problem are you trying to solve"? Do you currently have performance issues? I've seen a whole lot of people change things based on a "blanket recommendation" that destroyed previous acceptable to good performance. To Grant's good point, there is no panacea. Each table has it's own "personality" due to its design (or lack of) and the queries that play against it. The ONLY way to actually determine which indexes are beneficial is to look at the Actual Execution Plans for the various workloads and let those be your dowsing sticks.

And I can personally attest to the books Grant recommends in his signature line. I read earlier versions of both of his books (and the 2nd one is free through RedGate) and they were the best I'd ever read on the subject.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

February 24, 2022 at 4:14 pm

There are no real bad speed issues at this time. I am in the process of moving a bunch of data out of Microsoft Access and into SQL Server. Some of the speed issues are related to the jet database engine and I am learning to do pass through queries etc. There are a couple of slow reports but only in the neighborhood of 3-5 seconds. Most of my reports are search by railcarID/Number, CustomerID, Date or a combo of these. The indexes I put on RailcarID, CustomerID, ImportDate, CarNumber seem to help, but may be my imagination.

I was concerned about it slowing down as it grows but I think I will do some more reading, monitoring and analysis of the table before I make any changes. I also do web work and I will treat it like SEO. I will make one change, then monitor it for a while to see the effect before I make another.

My only question now is do you think it would be better to set the primary key to be ImportDate/CarInitial/CarNumber or just leave it on the random ID field.

-

February 24, 2022 at 4:26 pm

Edit: I just read the email of the query results, which were perfectly readable. I've adjusted the comments/code to reflect that.

--N/A after Edit: It's extraordinarily hard to read those results all pushed together like that, but luckily they were short.

Based on those results, while the current clustered index is OK, you could also start the clus index with DateOfImport, which would be my inclination. To make the index unique, include the $IDENTITY as the last column in the index.

Part of how helpful these stats are is how long SQL has been able to accumulate them for these tables/indexes. In my query, I use column "max_days_active" to show that value. Here it is NULL, so I can't tell over how many days the index stats were collected. (I'll need to check my code to see why it returned a NULL there.) Typically you'd like at least 30 days' worth to be sure you have a representative sample of data accesses.

The existing PK is a clus index. In order to create a different clus index, we'll have to change drop the existing PK first. We will re-add it as nonclus.

--it-s very likely PAGE compression is worthwhile for a table this size, but you should verify it by running:

--EXEC sys.sp_estimate_data_compression_settings 'dbo', 'FinalizedTraceData', NULL, NULL, 'PAGE'

ALTER TABLE dbo.FinalizedTraceData DROP CONSTRAINT [PK_FinalizedTraceData_2];

CREATE UNIQUE CLUSTERED INDEX [FinalizedTraceData__CL] ON dbo.FinalizedTraceData ( DateOfImport, $IDENTITY ) WITH ( DATA_COMPRESSION = PAGE, FILLFACTOR = 98, SORT_IN_TEMPDB = ON ) ON [PRIMARY];

ALTER TABLE dbo.FinalizedTraceData ADD CONSTRAINT [PK_FinalizedTraceData_2] PRIMARY KEY NONCLUSTERED ( $IDENTITY ) WITH ( DATA_COMPRESSION = ROW, FILLFACTOR = 98, SORT_IN_TEMPDB = ON ) ON [PRIMARY];- This reply was modified 4 years ago by ScottPletcher.

SQL DBA,SQL Server MVP(07, 08, 09) "It's a dog-eat-dog world, and I'm wearing Milk-Bone underwear." "Norm", on "Cheers". Also from "Cheers", from "Carla": "You need to know 3 things about Tortelli men: Tortelli men draw women like flies; Tortelli men treat women like flies; Tortelli men's brains are in their flies".

-

February 24, 2022 at 4:54 pm

My specialty is tuning indexes. I do it nearly every day. And I typically don't look at queries to do that. I don't generally need to. Nor would I have the time to. I just need the index stats SQL provides, albeit often augmented by cardinality and other data from the table itself.

I do review the biggest-IO / biggest-CPU queries and other potentially problem queries on very large tables. I also provide "rules" (guidelines) to developers for writing better queries. And undoubtedly proper query writing can help tremendously. But you still always need the stats from SQL itself to tune indexes.

Besides, in our environment, we have a lot of dynamic SQL that changes every day and we allow power users to write their own queries against our data mart data. I have to provide proper indexes for those queries too, and there's no time to review all the queries.

When tuning, first concentrate on getting the best clustered index on every (significant) table. The clus index is by far the most significant performance factor for the table. A rule that says you should just slap an $IDENTITY column on a table and automatically make it the clus index is the most damaging myth in table design.

After determining the best clus indexes, then decide on the nonclus indexes. Be ready to compromise. For example, sometimes you may allow an existing nonclus index to be scanned rather than creating a separate nonclus index.

As to PKs, they are very useful, but always remember that they can be nonclustered (as shown in the code above).

Tuning is definitely an iterative process. Often those perfect covering indexes you create don't last. They add one column to the query and, boom, it's no longer covering and you're back to a huge table scan.

I will say that logical data modeling and proper normalization makes using clus indexes as the primary access method much more effective. But almost no developers I've ever known do a logical design. Too bad, it's a great loss for your tables structures.

Thus, whenever you can, before a physical table(s) gets created, do a logical design an go thru NFs. It will save you much rework and trouble in the long run. And you won't end up with all those ultra-wide, 150+ column tables.

SQL DBA,SQL Server MVP(07, 08, 09) "It's a dog-eat-dog world, and I'm wearing Milk-Bone underwear." "Norm", on "Cheers". Also from "Cheers", from "Carla": "You need to know 3 things about Tortelli men: Tortelli men draw women like flies; Tortelli men treat women like flies; Tortelli men's brains are in their flies".

-

February 24, 2022 at 5:02 pm

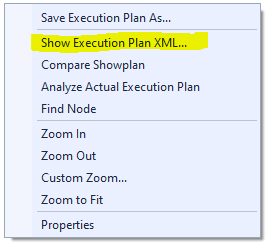

If you have a query that is taking a long time to execute. Just paste it into SSMS and press the estimated execution plan button in the menu:

It will show if there is a index that will improve performance.

If you right click on the execution plan and select "Show Execution Plan XML..." you can search the execution plan XML for more missing indexes.

A primary key does not have to be clustered (though it is the default). You can make a primary key unclustered and make a different index clustered. So there is no need to change the primary key. You just might need to uncluster it and make a different index clustered.

Also you can create the equivalent of a clustered index, without dropping an existing clustered index, by creating a covering index. That is an index that has different columns and "includes" all the other columns that are not in the indexing part of the index on your table. The performance should be just about the same as if it were a clustered index. Though you need to realise that your updates and inserts will be slowed down the more indexes that have to be updated, it will also use more space on your drive.

-

February 24, 2022 at 5:12 pm

Jonathan AC Roberts wrote:If you have a query that is taking a long time to execute. Just paste it into SSMS and press the estimated execution plan button in the menu:

It will show if there is a index that will improve performance.

If you right click on the execution plan and select "Show Execution Plan XML..." you can search the execution plan XML for more missing indexes.

A primary key does not have to be clustered (though it is the default). You can make a primary key unclustered and make a different index clustered. So there is no need to change the primary key. You just might need to uncluster it and make a different index clustered.

You really should not just automatically create any index that SQL "tells" you to. Instead, you should very carefully review its suggestions before acting on them. Also, compare them to current indexes first. For example, you might just need to add one column to an existing index rather than creating a new one.

SQL DBA,SQL Server MVP(07, 08, 09) "It's a dog-eat-dog world, and I'm wearing Milk-Bone underwear." "Norm", on "Cheers". Also from "Cheers", from "Carla": "You need to know 3 things about Tortelli men: Tortelli men draw women like flies; Tortelli men treat women like flies; Tortelli men's brains are in their flies".

-

February 24, 2022 at 5:14 pm

ScottPletcher wrote:Jonathan AC Roberts wrote:If you have a query that is taking a long time to execute. Just paste it into SSMS and press the estimated execution plan button in the menu:

It will show if there is a index that will improve performance.

If you right click on the execution plan and select "Show Execution Plan XML..." you can search the execution plan XML for more missing indexes.

A primary key does not have to be clustered (though it is the default). You can make a primary key unclustered and make a different index clustered. So there is no need to change the primary key. You just might need to uncluster it and make a different index clustered.

You really should not just automatically create any index that SQL "tells" you to. Instead, you should very carefully review its suggestions before acting on them. Also, compare them to current indexes first. For example, you might just need to add one column to an existing index rather than creating a new one.

Yes, of course, I agree, but it's a very easy way for someone new to SQL Server to find out if there are any improvements that can be made to queries.

-

February 24, 2022 at 6:12 pm

ScottPletcher wrote:When tuning, first concentrate on getting the best clustered index on every (significant) table. The clus index is by far the most significant performance factor for the table. A rule that say you should just slap an $IDENTITY column on a table and automatically make it the clus index is the most damaging myth in table design

Just so folks know, that's frequently a whole lot easier said than done especially on really active OLTP tables. Everyone wants queries to run quickly for their reporting but the don't take into consideration such things as page splits caused by Inserts/Updates and the resulting load on the log file or the fragmentation being caused.

The bottom line is , "It depends"... there is no panacea. Even experienced folks have a difficult time in this area

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

February 24, 2022 at 7:25 pm

Jeff Moden wrote:ScottPletcher wrote:When tuning, first concentrate on getting the best clustered index on every (significant) table. The clus index is by far the most significant performance factor for the table. A rule that say you should just slap an $IDENTITY column on a table and automatically make it the clus index is the most damaging myth in table design

Just so folks know, that's frequently a whole lot easier said than done especially on really active OLTP tables. Everyone wants queries to run quickly for their reporting but the don't take into consideration such things as page splits caused by Inserts/Updates and the resulting load on the log file or the fragmentation being caused.

The bottom line is , "It depends"... there is no panacea. Even experienced folks have a difficult time in this area

One might have a very difficult time indeed if one clustered all tables by identity by default.

I do take splits, etc., into consideration. As I noted above, an ascending key is broadly preferred if available. And often you have a key that naturally ascends, such as a datetime, similar to the table above.

I deal with many critical OLTP tables. It's not at all reporting requirements I'm concerned with but join requirements. Such joins need to be very efficient, every time (if possible). And in joins is where a plain $IDENTITY clus key fails worst compared to a clus key of, say, ( parent_key, $IDENTITY ).

So, I repeat:

The single most damaging myth in table "design" is to assume that $IDENTITY should always be considered the default clustering key for a table.

As to reporting, our data mart tables are larger, generally, but don't have OLTP activity, so, yeah, the focus there is on reporting.

SQL DBA,SQL Server MVP(07, 08, 09) "It's a dog-eat-dog world, and I'm wearing Milk-Bone underwear." "Norm", on "Cheers". Also from "Cheers", from "Carla": "You need to know 3 things about Tortelli men: Tortelli men draw women like flies; Tortelli men treat women like flies; Tortelli men's brains are in their flies".

Viewing 15 posts - 1 through 15 (of 18 total)

You must be logged in to reply to this topic. Login to reply