Bulk Insert - Only Half the Records

-

I am having a hard time with this. I am trying to load a *.txt file using BULK INSERT, but no matter what I do, it seems to only load about 50% of the records.

Our *.txt file has two rows of "header" data, and one row of "footer / trailer" data. Unfortunately, this is standard for our organization to include them, but I am not sure they are causing the issue.

So I have two tables. One table is simply one column (NVARCHAR(MAX)) where I load the entire file starting at 3 so that I can get the number of records before the trailer file. the other table is the main table(all columns are NVARCHAR(MAX) which I will change once I get the data to fully load, but did this to avoid any truncation or other data issues), where I try to load all the records using "LAST ROW" However, no matter what I do, I only get about 1/2 the records in the main table. I don't get any error message, and it doesn't seem to matter what I put in the "LAST ROW" value either. This file has about 300,000 records, and I have tried to remove 250,000 records, 299,000 records, no matter what I still only get half the records in the main table.

Here is thh code I am trying:

DECLARE @lastRow AS INT

DECLARE @sql AS NVARCHAR(1000)

BULK INSERT [Temp_Systems]

FROM '\\BulkInsert\Systems.txt'

WITH

(

FIRSTROW = 3,

FIELDTERMINATOR = '|',

ROWTERMINATOR = '\n'

);

SET @lastRow = (SELECT COUNT(*) FROM Temp_Systems)

SET @sql = 'BULK INSERT [Systems_NEW]

FROM ''\\BulkInsert\Systems.txt''

WITH

(

firstrow = 3,

FIELDTERMINATOR = ''|'',

ROWTERMINATOR = ''\n'',

LASTROW = ' + CAST(@lastRow AS NVARCHAR) + ');'

EXEC (@sql)When I run that code, here is the output.

(397758 row(s) affected)

(198878 row(s) affected)

As you can see, I get roughly half the records. I change the LASTROW value, I get the same. I edit the file to remove the vast majority, I get the same issue. I can't seem to figure out why this is happening. I would have thought if the issue was the columns, I would get an error, but don't. If I was using a wrong delimitator, why does it still load so many records?

-

November 8, 2021 at 5:19 pm

When you say that you get half the rows, have you verified that it is the first 50% of the rows in the file? Or perhaps it is skipping rows?

Another thing which I tend to do with problems like this is to make the problem more manageable. Try importing a file with 5 rows and see what happens. Much easier and faster to diagnose issues when you reduce the problem.

- This reply was modified 4 years, 3 months ago by Phil Parkin.

-

November 8, 2021 at 5:41 pm

I have not thought about that, I was just going by record counts shown for each table. I will look into this and see if I can find anything along those lines.

-

November 10, 2021 at 6:34 am

Another possibility is if two files were merged. If (IIRC) a "control Z" character is in the file, that signifies the end of the first file and it may not read the second "file" in the file.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

November 10, 2021 at 11:37 am

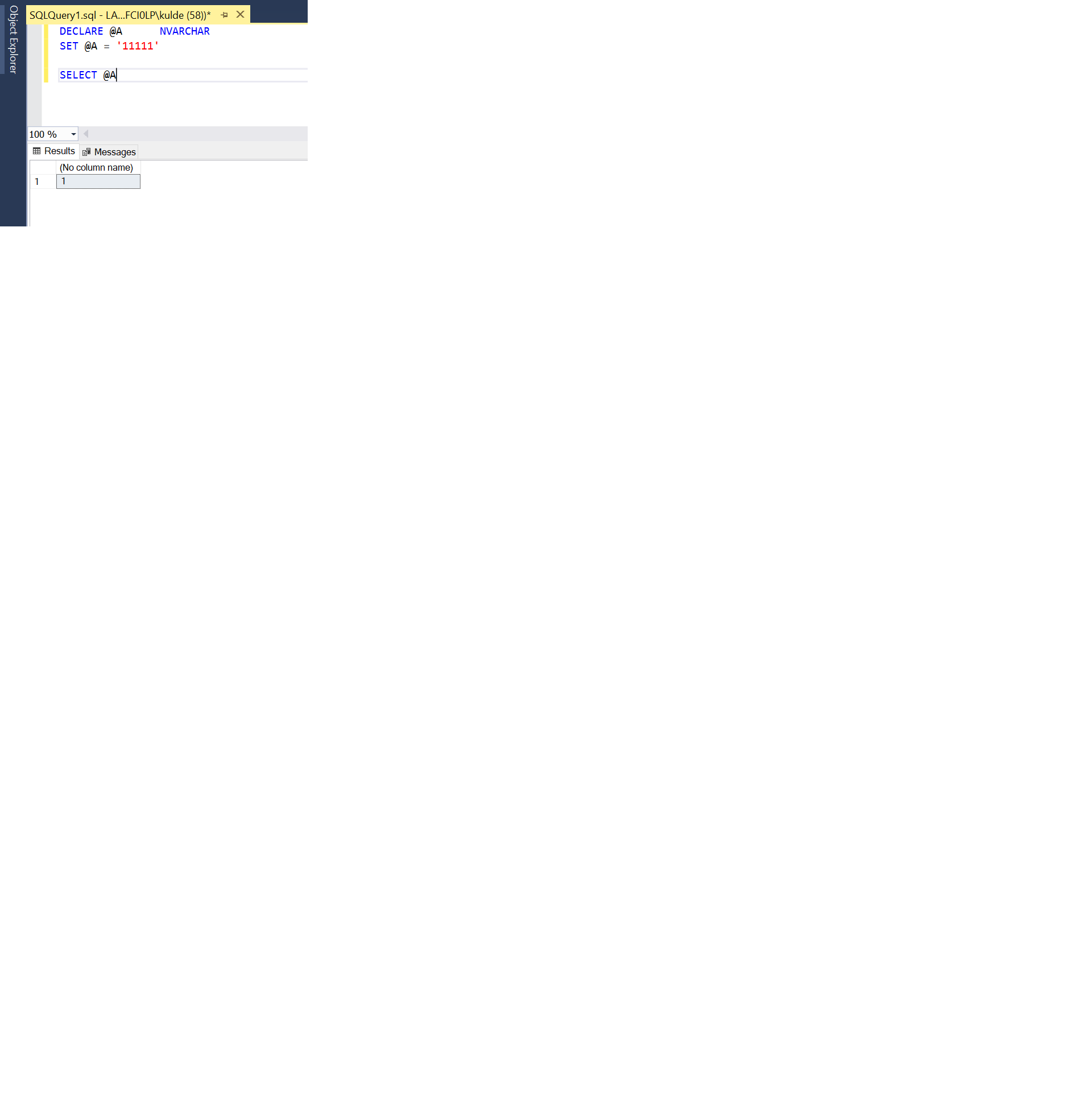

I found an issue in the code you posted. You may try after correcting it.

Assign a length to the NVARCHAR in the following line of your code. If you'll not assign the length then by default only one character is considered. Attached is the screenshot of the test I performed.

LASTROW = ' + CAST(@lastRow AS NVARCHAR) + ');'

-

November 11, 2021 at 2:54 am

Brahmanand Shukla wrote:I found an issue in the code you posted. You may try after correcting it.

Assign a length to the NVARCHAR in the following line of your code. If you'll not assign the length then by default only one character is considered. Attached is the screenshot of the test I performed.

LASTROW = ' + CAST(@lastRow AS NVARCHAR) + ');'

I'm pretty sure that's not the problem here but I tried it... this proves that it's not the problem because it didn't truncate the number assigned in the mod of the code.

DECLARE @lastRow AS INT

DECLARE @sql AS NVARCHAR(1000)

--BULK INSERT [Temp_Systems]

--FROM '\\BulkInsert\Systems.txt'

--WITH

--(

--FIRSTROW = 3,

--FIELDTERMINATOR = '|',

--ROWTERMINATOR = '\n'

--);

SET @lastRow = 397758 --(SELECT COUNT(*) FROM Temp_Systems)

SET @sql = 'BULK INSERT [Systems_NEW]

FROM ''\\BulkInsert\Systems.txt''

WITH

(

firstrow = 3,

FIELDTERMINATOR = ''|'',

ROWTERMINATOR = ''\n'',

LASTROW = ' + CAST(@lastRow AS NVARCHAR) + ');'

PRINT @SQL

;Result from PRINT @sql..

BULK INSERT [Systems_NEW]

FROM '\\BulkInsert\Systems.txt'

WITH

(

firstrow = 3,

FIELDTERMINATOR = '|',

ROWTERMINATOR = '\n',

LASTROW = 397758);--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

November 11, 2021 at 3:04 am

On of the things that's bugging me about this is that you say that the first import is going to a single column table yet you have a field terminator in the code. What happens if you set that to an empty string?

And sorry about my original comment... I didn't see what you were trying to do and and that both imports are from the same file.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

November 11, 2021 at 3:05 am

It would also be helpful if you posted the CREATE TABLE statements for the two target tables and attach a text file with the first 10 or so rows from the file WITH THE UNDERSTANDING THAT IT MUST NOT CONTAIN ANY PII or other sensitive data.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

Thank you everyone for all the help / suggestions but I found the issue. For some odd reason, my "main" table didn't have the correct column counts. I counted numerous times, but found another article on this site where people suggested that was the cause and it turned out, that was the reason. I would have thought I would have gotten an error if I had the wrong number of columns, but for some reason, did not and it.

Viewing 9 posts - 1 through 9 (of 9 total)

You must be logged in to reply to this topic. Login to reply