Recently I was thinking about how I could validate that this solution was installed in the way that I wanted it to be so I turned to Pester You can find a great how to get started here which will show you how to get Pester and how to get started with TDD.

This isn’t TDD though this is Environment Validation and this is how I went about creating my test.

First I thought about what I would look for in SSMS when I had installed the maintenance solution and made a list of the things that I would check which looked something like this. This would be the checklist you would create (or have already created) for yourself or a junior following this install. This is how easy you can turn that checklist into a Pester Test and remove the human element and open your install for automated testing

- SQL Server Agent is running – Otherwise the jobs won’t run

- We should have 4 backup jobs with a name of

- DatabaseBackup – SYSTEM_DATABASES – FULL

- DatabaseBackup – USER_DATABASES – FULL

- DatabaseBackup – USER_DATABASES – DIFF

- DatabaseBackup – USER_DATABASES – LOG

- We should have Integrity Check and Index Optimisation Jobs

- We should have the clean up jobs

- All jobs should be scheduled

- All jobs should be enabled

- The jobs should have succeeded

I can certainly say that I have run through that check in my head and also written it down in an installation guide in the past. If I was being more careful I would have checked if there were the correct folders in the folder I was backing up to.

Ola’s script uses a default naming convention so this makes it easy. There should be a SERVERNAME or SERVERNAME$INSTANCENAME folder or if there is an Availability Group a CLUSTERNAME$AGNAME and in each of those a FULL DIFF and LOG folder which I can add to my checklist

So now we have our checklist we just need to turn in into a Pester Environmental Validation script

It would be useful to be able to pass in a number of instances so we will start with a foreach loop and then a Describe Block then split the server name and instance name, get the agent jobs and set the backup folder name

$ServerName = $Server.Split('\')[0]

$InstanceName = $Server.Split('\')[1]

$ServerName = $ServerName.ToUpper()

Describe 'Testing $Server Backup solution'{

BeforeAll {$Jobs = Get-SqlAgentJob -ServerInstance $Server

$srv = New-Object Microsoft.SQLServer.Management.SMO.Server $Server

$dbs = $Srv.Databases.Where{$_.status -eq 'Normal'}.name

if($InstanceName)

{

$DisplayName = 'SQL Server Agent ($InstanceName)'

$Folder = $ServerName + '$' + $InstanceName

}

else

{

$DisplayName = 'SQL Server Agent (MSSQLSERVER)'

$Folder = $ServerName

}

}

if($CheckForBackups -eq $true)

{

$CheckForDBFolders -eq $true

}

$Root = $Share + '\' + $Folder

$Jobs = $Jobs.Where{($_.Name -like 'DatabaseBackup - SYSTEM_DATABASES - FULL*' + $JobSuffix + '*') -or ($_.Name -like 'DatabaseBackup - USER_DATABASES - FULL*' + $JobSuffix + '*') -or ($_.Name -like 'DatabaseBackup - USER_DATABASES - DIFF*' + $JobSuffix + '*') -or ($_.Name -like 'DatabaseBackup - USER_DATABASES - LOG*' + $JobSuffix + '*')}

actual-value | Should Be expected-value

(Get-service -ComputerName $ServerName -DisplayName $DisplayName).Status | Should Be 'Running'

$jobs = Get-SqlAgentJob -ServerInstance $server ($Jobs | Get-Member -MemberType Property).name $Jobs[0] | Select-Object *

$Jobs = $Jobs.Where{($_.Name -eq 'DatabaseIntegrityCheck - SYSTEM_DATABASES') -or ($_.Name -eq 'DatabaseIntegrityCheck - USER_DATABASES') -or ($_.Name -eq 'IndexOptimize - USER_DATABASES')}

foreach($job in $Jobs)

{

$JobName = $Job.Name

It '$JobName Job Exists'{

$Job | Should Not BeNullOrEmpty

}

It '$JobName Job is enabled' {

$job.IsEnabled | Should Be 'True'

}

It '$JobName Job has schedule' {

$Job.HasSchedule | Should Be 'True'

}

if($DontCheckJobOutcome -eq $false)

{

It '$JobName Job succeeded' {

$Job.LastRunOutCome | Should Be 'Succeeded'

}

}

Context '$Share Share For $Server' {

It 'Should have the root folder $Root' {

Test-Path $Root | Should Be $true

}

foreach($db in $dbs.Where{$_ -ne 'tempdb'})

{

if($Srv.VersionMajor -ge 11)

{

If($srv.Databases[$db].AvailabilityGroupName)

{

$AG = $srv.Databases[$db].AvailabilityGroupName

$Cluster = $srv.ClusterName

$OLAAg = $Cluster + '$' + $AG

if($Share.StartsWith('\\') -eq $False)

{

$UNC = $Share.Replace(':','$')

$Root = '\\' + $ServerName + '\' + $UNC + '\' + $OlaAG

}

else

{

$Root = '\\' + $ServerName + '\' + $UNC + '\' + $Folder

}

}

else

{

if($Share.StartsWith('\\') -eq $False)

{

$UNC = $Share.Replace(':','$')

$Root = '\\' + $ServerName + '\' + $UNC + '\' + $Folder

}

else

{

$Root = $Share + '\' + $Folder

}

}

}

$db = $db.Replace(' ','')

$Dbfolder = $Root + "\$db"

$Full = $Dbfolder + '\FULL'

$Diff = $Dbfolder + '\DIFF'

$Log = $Dbfolder + '\LOG'

If($CheckForDBFolders -eq $True)

{

Context "Folder Check for $db on $Server on $Share" {

It "Should have a folder for $db database" {

Test-Path $Dbfolder |Should Be $true

}

If($CheckForDBFolders -eq $True)

{

Context 'Folder Check for $db on $Server on $Share' {

It 'Should have a folder for $db database' {

Test-Path $Dbfolder |Should Be $true

}

if($Db -notin ('master','msdb','model') -and ($Srv.Databases[$db].RecoveryModel -ne 'Simple') -and ( $LSDatabases -notcontains $db))

{

It 'Has a Full Folder' {

[System.IO.Directory]::Exists($Full) | Should Be $True

}

It 'Has a Diff Folder' {

[System.IO.Directory]::Exists($Diff) | Should Be $True

}

It 'Has a Log Folder' {

[System.IO.Directory]::Exists($Log) | Should Be $True

}

} #

elseif(($Srv.Databases[$db].RecoveryModel -eq 'Simple') -and $Db -notin ('master','msdb','model') -or ( $LSDatabases -contains $db) )

{

It 'Has a Full Folder' {

[System.IO.Directory]::Exists($Full) | Should Be $True

}

It 'Has a Diff Folder' {

[System.IO.Directory]::Exists($Diff) | Should Be $True

}

} #

else

{

It 'Has a Full Folder' {

[System.IO.Directory]::Exists($Full) | Should Be $True

}

}#

} # End Check for db folders

}

If($CheckForBackups -eq $true)

{

Context ' File Check For $db on $Server on $Share' {

$Fullcreate = [System.IO.Directory]::GetCreationTime($Full)

$FullWrite = [System.IO.Directory]::GetLastWriteTime($Full)

if($Fullcreate -eq $FullWrite)

{

It 'Has Files in the FULL folder for $db' {

Get-ChildItem $Full\*.bak | Should Not BeNullOrEmpty

}

}

else

{

It 'Has Files in the FULL folder for $db' {

$FullCreate | Should BeLessThan $FullWrite

}

}

It 'Full File Folder was written to within the last 7 days' {

$Fullwrite |Should BeGreaterThan (Get-Date).AddDays(-7)

}

if($Db -notin ('master','msdb','model'))

{

$Diffcreate = [System.IO.Directory]::GetCreationTime($Diff)

$DiffWrite = [System.IO.Directory]::GetLastWriteTime($Diff)

if($Diffcreate -eq $DiffWrite)

{

It 'Has Files in the DIFF folder for $db' {

Get-ChildItem $Diff\*.bak | Should Not BeNullOrEmpty

}

}

else

{

It 'Has Files in the DIFF folder for $db' {

$DiffCreate | Should BeLessThan $DiffWrite

}

}</div><div>It 'Diff File Folder was written to within the last 24 Hours' {

$Diffwrite |Should BeGreaterThan (Get-Date).AddHours(-24)

}

}

if($Db -notin ('master','msdb','model') -and ($Srv.Databases[$db].RecoveryModel -ne 'Simple') -and ( $LSDatabases -notcontains $db))

{

$Logcreate = [System.IO.Directory]::GetCreationTime($Log)

$LogWrite = [System.IO.Directory]::GetLastWriteTime($Log)

if($Logcreate -eq $LogWrite)

{

It 'Has Files in the LOG folder for $db' {

Get-ChildItem $Log\*.trn | Should Not BeNullOrEmpty

}

}

else

{

It 'Has Files in the LOG folder for $db' {

$LogCreate | Should BeLessThan $LogWrite

}

}

It 'Log File Folder was written to within the last 30 minutes' {

$Logwrite |Should BeGreaterThan (Get-Date).AddMinutes(-30)

}

}# Simple Recovery

}

}# Check for backups[CmdletBinding()] ## Pester Test to check OLA Param( $Instance, $CheckForBackups, $CheckForDBFolders, $JobSuffix , $Share , [switch]$NoDatabaseRestoreCheck, [switch]$DontCheckJobOutcome )

$Script = @{

Path = $Path;

Parameters = @{ Instance = Instance;

CheckForBackups = $true;

CheckForDBFolders = $true;

JobSuffix = 'BackupShare1';

Share = '\\Server1\BackupShare1';

NoDatabaseRestoreCheck= $true;

DontCheckJobOutcome = $true}

}

Invoke-Pester -Script $Script

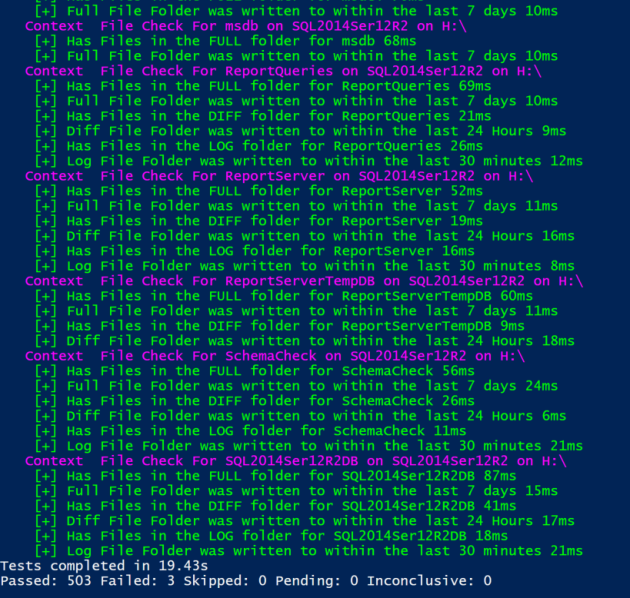

$Servers = 'SQL2008Ser2008','SQL2012Ser08AG1','SQL2012Ser08AG2','SQL2014Ser12R2' Test-OLAInstance -Instance $Servers -Share 'H:\' -CheckForBackups

(The three failures were my Integrity Check jobs holding some test corrupt databases)

(The three failures were my Integrity Check jobs holding some test corrupt databases)