SSIS Package loading CSV failing

-

May 28, 2021 at 2:05 pm

I have a package that needs to do a simple import of a csv file to a SQL Server table and getting a common fixable error of "cannot convert-between unicode and non-unicode" - Change from 'String[DT_STR]' to 'Unicode String[DT_WSTR]' right? and the warning goes away... easy enough. But what to do about csv files that look like this in Notepad++? No erros when I run the package but no data is transferred to SQL Server table:

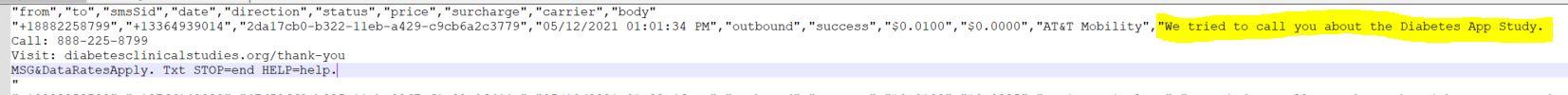

"from","to","smsSid","date","direction","status","price","surcharge","carrier","body"

"+18882258799","+13364939014","2da17cb0-b322-11eb-a429-c9cb6a2c3779","05/12/2021 01:01:34 PM","outbound","success","$0.0100","$0.0000","AT&T Mobility","We tried to call you about the Diabetes App Study.

Call: 888-225-8799

Visit: diabetesclinicalstudies.org/thank-you

MSG&DataRatesApply. Txt STOP=end HELP=help.

"- This topic was modified 4 years, 8 months ago by DaveBriCam.

-

May 28, 2021 at 2:17 pm

Have you set up an error path on your data flow? If I was debugging this, I'd start with that. If records are not flowing to your desired destination, they would flow to the error destination and there should be an error shown for each row. If you just want to see the errors and not write them to a destination, one technique is to route error output to a multicast, then add a viewer to the error path, no need to actually output from the multicast.

Also, look at the record counts on the data flow during execution. If your data flow is complex, this will tell you where things go awry. You can use those counts to decide where you need to examine the error output.

-

May 28, 2021 at 4:00 pm

Are there embedded carriage returns in that file?

Have you set a text qualifier? (")

-

May 28, 2021 at 4:24 pm

A bigger problem is I'm finding it hard to identify where the errors are occuring... the red dot is over the source file when I run the package. The error log tells me very little... at least very little that I understand.

-

May 28, 2021 at 4:39 pm

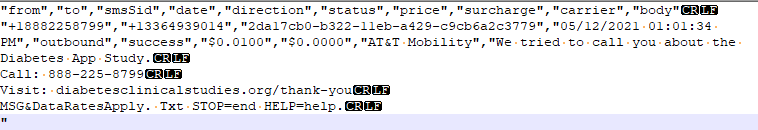

Using Notepad++ you need to show the symbols (View | Show Symbols | View all characters). Once you have done that - you will be able to see the actual characters that are used.

Once you view that - you will see the problem with this file. If the data you posted is the same - then you have embedded CR/LF and you have CR/LF as the record terminator.

Without a way to determine the difference between a record terminator and an embedded value - no import process will be able to handle the file. In SSIS, when you define the record terminator as CR/LF it will also see the embedded CR/LF as a record terminator and you won't be able to process the file.

If possible, have the sender modify their process of creating the file. One way to do that is to convert the CR/LF that are embedded to just a LF. With that - SSIS would then see the CR/LF as the record terminator and leave the embedded LF characters.

Jeffrey Williams

“We are all faced with a series of great opportunities brilliantly disguised as impossible situations.”― Charles R. Swindoll

How to post questions to get better answers faster

Managing Transaction Logs -

May 28, 2021 at 5:14 pm

I was able to get the package to run but the csv is proving to be useless since it inspite of being 1020 chars in the SQL table it cuts off all but what is highlighted in yellow:

-

May 28, 2021 at 5:23 pm

What about a different datatype in SQL... Blob or Html? Would the text shown go into that?

- This reply was modified 4 years, 8 months ago by DaveBriCam.

-

May 28, 2021 at 5:51 pm

Not sure what changes you made to get the package to run - but it is not handling the additional rows.

In Notepad++ I see your text as this...which shows embedded CRLF characters. SSIS will treat the CRLF as a record terminator - and if you enabled error processing the next rows down to the end quote will be redirected to the error output and ignored.

Like I stated before...go back to the group creating this file and have them fix it...the easiest and quickest fix would be to change the record terminator to a CR or LF only. The other option is to fix the data inside the 'body' so that embedded CRLF characters are converted to either a CR or LF - or to a special sequence that you can convert back after importing the data.

Jeffrey Williams

“We are all faced with a series of great opportunities brilliantly disguised as impossible situations.”― Charles R. Swindoll

How to post questions to get better answers faster

Managing Transaction Logs

Viewing 8 posts - 1 through 8 (of 8 total)

You must be logged in to reply to this topic. Login to reply