This past Saturday I had the pleasure of speaking at SQL Saturday #552 here in beautiful Jacksonville, Florida. My good friend, Mitch Pearson (blog | twitter) and I presented our session, Designing a Data Warehouse from the Ground Up. We had a great crowd and lots of great questions from the audience!

Watch Designing a Data Warehouse from the Ground Up Webinar Recording

With all the talk about designing a data warehouse and best practices, I thought I’d take a few moment to jot down some of my thoughts around best practices and things to consider when designing your data warehouse. Below you’ll find the first five of ten data warehouse design best practices that I believe are worth considering. This list isn’t meant to be the ten best “best practices” to follow and are in no particular order. Of course, each design scenario is different so you may find that some of the best practices listed here aren’t optimal in your specific situation.

1. Use descriptive dimension attributes.

The best dimension attributes are those that are descriptive in nature. Descriptive attributes are easier to understand from the user’s perspective because dimension attributes are used to describe, filter, control, sort, and provide context for the quantitative measures. Descriptive attributes allow users to exploit the value of the metrics more fully.

Take the following set of charts for example. On the left you’ll see a report created using ID fields and on the right you’ll see the same chart created using descriptive attributes. Even if you understood the ID fields, effort is still required on the part of the user to “decode” the IDs. And for those users that do not have the IDs memorized, the charts on the left are useless.

While IDs may use a smaller data type and many users may be able to “decode” the IDs, descriptive attributes will provide a better user experience from a reporting stand point. This doesn’t mean that ID fields should not be stored in a data warehouse, but solely relying on the IDs for reporting would be a mistake. What we don’t want is the users having to memorize scores of IDs or having to keep notes to define product IDs, for example.

2. Store additive measures in the data warehouse.

The best type of measures to store in the data warehouse are those measures that can be fully aggregated. A measure that can be fully aggregated is a measure that can be summarized by any dimension or all dimensions and still remain meaningful. For instance, a Sales Amount measure can be summarized by Product, Date, Geography, etc. and still provide valuable insight for the customer.

Semi-additive measures can also be stored in the data warehouse. An example of a semi-additive measure would be a measure like an account balance or an inventory quantity. These measures are usually stored in snapshot fact tables. A measure such as account balance is considered semi-additive because the account balance on each day of a month can not be summed to calculate the month’s account balance. The account balance on the last day of the month is used to accurately represent the monthly account balance. The logic to calculate the balance on the last day of a time period (month, quarter, year, etc.) can be built into a query, a report, or a semantic model, such as SQL Server Analysis Services or Power BI.

Measures that cannot be fully aggregated, such as ratios or other percentage type calculations should be handled in the semantic model or the reporting tool. For example, a measure such as Percentage Profit Margin stored in a table cannot be properly aggregated. A better option would be to store the additive measures that are the base for the Percentage Profit Margin, such as Revenue, Cost, Margin, etc. These base measures can be used to calculate the ratio in a query, semantic model, or reporting tool.

3. Use the smallest data types possible.

Always use the smallest data type possible. What this means is that we should never use a string data type when an integer could be used. Using the smallest data type possible will optimize data storage, ETL (extract – transform – load), reporting, and semantic model processing.

For example, in a SQL Server database a column with an integer data type always uses 4 bytes of storage no matter the number stored and a varchar data type will use the length of the value plus two bytes. So for instance, a value of 1000000 will take up 4 bytes of storage when using the Int data type. But the same value stored as a varchar will use 9 bytes of storage! That’s more than double the amount of storage required when using the integer data type! For more information on data types, use this link: https://msdn.microsoft.com/en-us/library/ms187752.aspx?f=255&MSPPError=-2147217396

This can also greatly affect the performance of an ETL tool such as SQL Server Integration Services (SSIS). SSIS buffers in set of records into memory for transformation and loading into the destination. The width (size based on data types) of the row determines how many records can be loaded into memory. If a data set uses very large data types, fewer records can be loaded into memory thus causing the package to run longer than necessary. Smaller data types can drastically improve the performance of an SSIS package.

Data types also greatly affect the performance of SQL Server Analysis Services (SSAS) cubes. String data types are stored in a special separate file in SSAS which means that query performance and cube processing are negatively affected by using too many string data type columns when not necessary. For more information on SSAS dimension design best practices, use this link: https://sqldusty.com/2015/07/17/3-ssas-dimension-design-best-practices-to-live-by/

4. Use surrogate keys.

Surrogate keys are database keys used to relate the dimension tables to the fact tables. Surrogate keys (SK) have no meaning to the business and no intrinsic meaning. SKs are usually assigned at the time a record is loaded into the dimension table and are usually maintained through the ETL process. SKs are usually used as the primary key on a given dimension table and are different than the business key. Take this table in the Adventure Works DW database for example:

The SalesTerritoryKey column is the surrogate key on the table and the SalesTerritoryAlternateKey column is the business key. The business key is used to relate the dimension records to the source records and the surrogate key is used as the primary key on the dimension table. This also means in the fact table, no business keys are stored. The fact table is usually used to store only the surrogate keys of the dimensions to which it is related and any measures.

There are several advantages to using surrogate keys. Surrogate keys allow us to track the history of dimension records. For example, imagine we have a customer dimension and we wish to track the history of where our customers live. If the primary key on the dimension table is the Customer ID, we can only have one record per customer. But if we create a Customer surrogate key, we can insert multiple records per customer allowing us to easily view the history of each customer.

Surrogate key fields can also provide superior performance compared to using a business key, which could use a string data type for example. When we create the dimension table, use an integer data type. This will provide better storage of the data and better performance when writing queries that use joins on the surrogate keys.

5. Use a star schema design when possible.

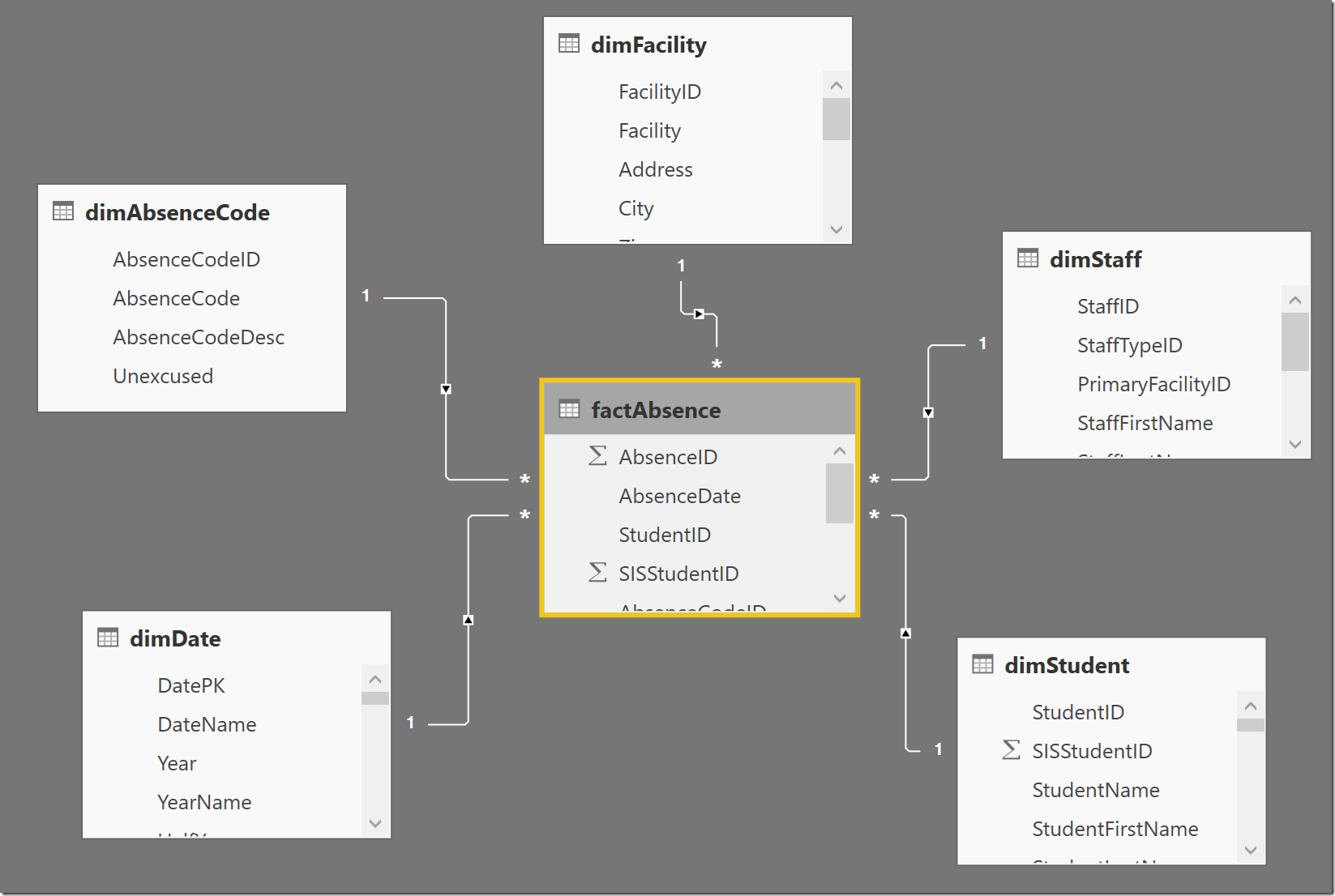

A star schema refers to the design of the data warehouse. The design is called a “star” because of the shape the diagram often makes, as seen in the screenshot below.

Using a star schema shaped design provides a few benefits compared to other more normalized database designs. First, a star schema design is very easy to understand. Normalized data models are often very confusing but a denormalized design such as a star schema is very simple and requires very few joins to produce a meaningful query.

Second, because of the simplicity of the model and the lack of joins requires, a star schema often facilitates better performing queries than a normalized model. The goal of a data warehouse is to provide large volumes of data to a user for analytical reporting and a simple, optimized star schema helps us achieve this goal.

Also, a star schema design works very well with SQL Server Analysis Services. SSAS works best with a star schema data warehouse because of the simplicity of the relationships between the objects and the optimization for high performance read operations. A snowflake design may sometimes be necessary, but this can cause challenges for SSAS, which you can read more about here.

Resources

Here are some additional resources that I think you’ll find helpful.

If you’re into data warehouse design, are part of a data warehouse design team, or will be undertaking a data warehouse project in the future, you need these two books without a doubt:

- The Data Warehouse Toolkit by Ralph Kimball and Margy Ross

- Star Schema The Complete Reference by Christopher Adamson

Watch the recording of a webinar I did on designing a data warehouse.

Feedback

I hope you found this useful. Let me know what you think! What do you think about these tips? What should I have included in the list. Leave me a comment down below and let me know.

Also, stay tuned for the follow up to this blog post for the remaining five data warehouse design tips. Thanks for reading!