Slow database performance

-

December 31, 2020 at 7:30 am

Hi All,

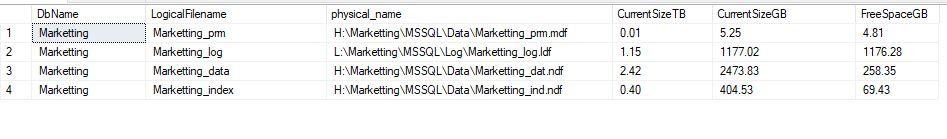

We are seeing serious I/O contentions issues on one of our database called "MarketingDB".

Looking for some suggestions on how we can improve I/O demands for this database. Enabled Instant file initialization for data files to get some performance gain. All data files are in one drive and log file is in a separate drive. Excluded Antivirus scans on all SQL Folders. All drives are formatted with 64k blocksize.

We tried archiving some data but reverted back. Since database is big, the deletes are taking a lot of time and ending up filling up the txn log file and we have a lot of LOB data types inside the tables.We tried with batch deletes as well nothing but major improvements.

The database files are not equi sized. Can anyone share thoughts on how to go about equi-sizing all the data files . We have a dedicated files data and index file.

The "Marketing_data" data file is becoming a HOT SPOT very frequently. What is the approach to distribute the data within the file into separate filegroups. What approach should be taken to distribute the tables?

Do we need to collect table sizes and distribute them as all big tables into one data file and all small tables into separate data file in a separate drive? what are the best practices ? partitioning ? Please share your thoughts.

Autogrowth settings

====================

data files autogrowth 100MB , Unrestricted growth

log file autogrow by 1GB, unrestricted.

Best Regards,

Sam

-

January 1, 2021 at 8:10 am

Thanks for posting your issue and hopefully someone will answer soon.

This is an automated bump to increase visibility of your question.

-

January 2, 2021 at 3:23 pm

I could be wrong but, from your description, it seems to me that you've not identified what the I/O contention is being caused by, yet. I/O contention is usually a symptom of something else. In every case I've seen so far, the contention has always been caused by the code being played against the database.

Don't get me wrong... all the steps that you've taken so far are good steps but you need to find out what is actually causing the I/O contention and it's usually code sometimes exasperated by design problems in the database. I recommend starting off by looking at the top 10 most read/write (emphasis on reads) intensive queries. Notice that I did NOT say the top 10 longest running queries. You could (probably do) have a relatively small but inefficient set of queries that run thousands of times per hour that are the cause of your I/O contention.

I agree that having decent hardware and having it setup correctly is important but, once that's done, performance (CPU, I/O, etc) will be almost entirely dependent on the code. I've also found that if you fix the top 10 items in the area of reads, it's very common for all of you I/O problems (including contention) to simply vanish. If you get real lucky, that might occur somewhere in the top 5.

Bottom line is, you need to find and fix your top 10 worst queries.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

January 4, 2021 at 5:12 pm

I agree with Jeff here - find the problem queries and you will find the performance problems. fix the problem queries and you will likely fix the performance problems.

The exception to that is if the problem queries are ad-hoc queries sent by an application you don't have the source code to. Then things can get complicated. We had a system like that where it would take 30+ minutes for the 3rd party application to pull any data from the database. Watching it in profiler (SQL 2008 R2), it was doing a SELECT * on a bunch of tables and joining some of those and just grabbing a whole buttload of data. then once it had the data, it would filter it down on the application side. This database was 2 TB in size, and the application pulled most of that data to the client before filtering it. Not the best tool for the job, but it was the tool we had. The tool also had no way to "cancel" a query once it had been issued, so if an end user asked for data then decided the 30 minute wait was too long, they would force-close the app and the database would happily churn that data and send it out to nobody once it finished.

Our solution was to take a full backup of the system, isolate out the "important" data. Next, we identified the "large" important tables and from those, we tossed that important data into backup tables, drop the existing tables and rename the backup tables. All of this done during a downtime window with the database in simple recovery mode (to reduce load on the log file). The unimportant tables were truncated, then we flipped it back into full recovery and did a full backup, then sent out a notice that the maintenance had been completed. Then in the next maintenance window, we did a database shrink as we went from 2 TB down to roughly 200 GB in the end and didn't want to waste disk.

This approach MAY not work for your scenario, but for us it worked great. When you have a small set of tables to work with and inside them, you only have a small set of "important" data (we saved 2 years of data in the database out of the 20-ish that was in there).

So, the problem was definitely what Jeff had said - we had bad queries (there is no good reason to pull that much data to client side and let the client to do processing), but we had no way to fix them (queries were hard-coded into the client side application). So our fix was to reduce the data the client would pull. As the data to keep was a MUCH smaller set than the data to remove (and recover via database backup if needed), our approach was a "save the important, drop the unimportant" approach rather than a "delete the unimportant". Downside to our approach is indexes needed to be re-created, permissions needed to be set up again and we needed 2 downtime windows (one to remove the data, one to shrink the database), and we had to restore some data from the backup as some data was mis-represented as "unimportant" when it was "important". But removing 90% of the data is a pretty good accomplishment and the downtime was just a few hours. The shrink was not critical to the project, but why have a 2 TB database with 90% of that space empty if you don't need it to be? I'd much rather shrink that bad boy down to 500 GB and know it'll be good for a few years and use the disk for something else.

The above is all just my opinion on what you should do.

As with all advice you find on a random internet forum - you shouldn't blindly follow it. Always test on a test server to see if there is negative side effects before making changes to live!

I recommend you NEVER run "random code" you found online on any system you care about UNLESS you understand and can verify the code OR you don't care if the code trashes your system. -

January 5, 2021 at 9:35 am

I completely agree with you Jeff. Thanks for those suggestions. We have identified the top I/O queries (using various dmvs based on query_hash , dm_io_virtual_file_stats) and provided to the DEV Team and their Architect but they don't any action. We have a DPA setup they will just look at the memory indicator like page life expectancy(RED) and waitypes like PAGEIOLATCH_xx, IO_COMPLETION , AYSNC_IO_COMPLETION and send us an email and ask us to look into it. We can identify the problems but we will not able to tweak or re-write their queries as we dont know the business logic and even if try to change the code and help them, later again they will come to us that the code doesn't work anymore because again made some modifications to the code for subsequent code releases. It is obvious that once the code is changed , the plan change and everything else changes.

We try to provide the recommendations like indexes, high cost operators , unnecessary sorts, excessive usage of temp db, no proper filters, SELECT * , minimize the usage LOBS datatypes, we even proposed data archiving mechanism and so on so forth. Despite all this, we take the blame. They say, we are busy with so many projects going on and we don't have that much time to meet their project deadlines and push all that junk code on prod. They say they have tested the code base in QA and UAT with a smaller dataset and once code is put in prod, boom...All sorts of slowness, blocking, I/O issues , high cpu. Because this workload is not the same on UAT and prod. Prod has many concurrent users and connections coming in.

One favor I would like to ask, Could you please let me know what are the perfmon counters we need to take into account so that we come up with a concrete fact based report to management to say that server is in lot of load due to so and so reasons, for example, we the db size , user connections , latencies etc... What processes we need to automate to get such kind of reports and we have a valid facts when getting into such uncomfortable conversations on calls.

I am an accidental DBA so trying to get a better approach of tacking these kind of situations in a corporate world. My knowledge is limited and trying to learn things which I might be missing which needs immediate attention.

Thank you.

Sam

-

January 5, 2021 at 10:10 am

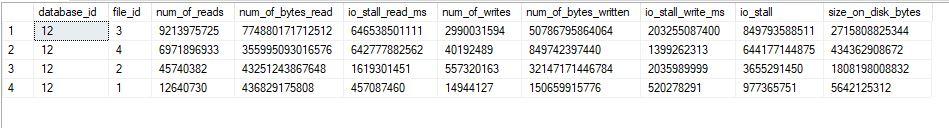

I pulled the I/O information for this database.

SELECT [database_id],

[file_id],

[num_of_reads],

[num_of_bytes_read],

[io_stall_read_ms],

[num_of_writes],

[num_of_bytes_written],

[io_stall_write_ms],

[io_stall],

[size_on_disk_bytes]

FROM sys.[dm_io_virtual_file_stats](NULL, NULL)

where [database_id] = 12

ORDER BY [io_stall] DESC;

GO

Attachments:

You must be logged in to view attached files. -

January 5, 2021 at 10:54 am

I assume you are running that query and outputting onto a table so you can compare changes between runs - those are cumulative values since last instance startup - having this running in intervals (30 m, 1 hour) will give you an idea of evolution of counters - some could be high because one or two particular processes are heavy and that would not be an indication of a problem.

-

January 6, 2021 at 2:28 am

vsamantha35 wrote:...but they don't any action.

Of course not... they're too busy trying to meet a usually impossible schedule...

vsamantha35 wrote:They say, we are busy with so many projects going on and we don't have that much time to meet their project deadlines and push all that junk code on prod.

... but it looks like I'm preaching to the choir there. 😀

vsamantha35 wrote:They say they have tested the code base in QA and UAT with a smaller dataset and once code is put in prod, boom...All sorts of slowness, blocking, I/O issues , high cpu. Because this workload is not the same on UAT and prod. Prod has many concurrent users and connections coming in.

That's kinda your fault. You need to figure out a way to provide your Developers with a more robust development environment and then sell management on the idea of such an environment. That means a cost v.s. ROI proposal and all that goes with it.

You could also start some in-house training to teach them methods that will scale without them having to test for scale.

vsamantha35 wrote:One favor I would like to ask, Could you please let me know what are the perfmon counters we need to take into account so that we come up with a concrete fact based report to management to say that server is in lot of load due to so and so reasons, for example, we the db size , user connections , latencies etc... What processes we need to automate to get such kind of reports and we have a valid facts when getting into such uncomfortable conversations on calls.

"It Depends". How many servers are we talking about here?

vsamantha35 wrote:I am an accidental DBA so trying to get a better approach of tacking these kind of situations in a corporate world. My knowledge is limited and trying to learn things which I might be missing which needs immediate attention.

Been there, done that, and still doing it to this day. Even after working with SQL Server for more than 2 decades, there's still so much more to know. A simple example of that is I don't use AG. The infrastructure team set us up in a simple clustered environment (which seems to work fine and avoids a huge number of headaches) and they take care of all the DR except, of course, for backups, which I take care of. It's fun because it provides a lifetime of learning especially since they keep changing stuff. 😀

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

January 6, 2021 at 7:48 am

Hi Jeff,

How many servers are we talking about here?

2 servers which are specific to one application. Its a 2 node AG cluster.

Even though you mentioned you are an accidental DBA, but the kind of in-depth detailed answers you provide in this forum doesn't seem you are simple dba. There is a lot to learn from each and everyone of you in this forum.

We have provide basic training in the past on tuning best practices and documenting changes within modules (sps, funcs etc..). like SELECT *, avoiding too many indexes, excessive parallelism hints, usage of hints, proper filters, avoiding cross joins, schema suffix before object names etc. the problem in their team is every now and then the team gets changed. Hardly I see 1-2 old faces , remaining dev team they keep moving out and new faces coming in. We provided some videos training as well.

That's kinda your fault. You need to figure out a way to provide your Developers with a more robust development environment and then sell management on the idea of such an environment. That means a cost v.s. ROI proposal and all that goes with it.

I never got engaged in such conversations. I am 3 years old in SQL Server. Can you please elaborate on how such proposals are made and what points generally goes though such meetings. Any such good free tools?

Regards,

Sam

Viewing 9 posts - 1 through 9 (of 9 total)

You must be logged in to reply to this topic. Login to reply