SQL performance on couple queries are better on older compatibility

-

Hello,

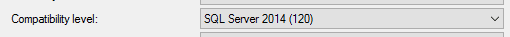

So i have a SQL server 2014, SP3, with 256 gigs of memory, 32 cores, windows 2012 R2 i faced this issue the other day, when the database was on Compatibility level 2014 (120), the query was performing poor, like it would never return. HOWEVER, when i switched it from from Compatibility level 2014 to 2012 (110), or even 2008, the query performs in seconds, what is even weird is, yes the query is a temp table... so in short the query is like this:

select statement with some where clause to filter out 1 million records down to maybe 400k, with a date range and users with certain criteria like active users and certain codes etc., don't want to show the entire code for security purposes, but that gets inserted into a temp table.

THEN, it gets filtered again (I didn't write the query), to filter more out and aggregates it based on users, and gets inserted into another temp table, which then that temp table gets joined onto actual tables, to be displayed.

So the bottle kneck i saw when running was when it was inserting onto the 2nd temp table, which was weird, and checked the tempdb, dbcc checkdb, everything returned fine, I made sure traceflag 1117 and 1118 was enabled. but when i change the Compatibility level from 2014 to 2012 or to 2008, then it works... so sorry for the long novel, but wanted to give some background:

QUESTION: why do some queries work on older Compatibility level than the new? i thought microsoft made the Cardinality Estimator better in the new 2012+ versions? or maybe an update fixes this for SQL server 2014 Sp3?

thanks in advance

-

We went through the same brew-ha-ha when we shifted from 2012 to 2016. It was the cardinality estimator. When we started the trace flag to use the legacy CE server-wide, things went back to normal. We didn't change the compatibility level.

And, just like you, the new CE didn't affect most queries but we didn't (and apparently still don't) have the time to go figure out the gazintas to fix the code so that it runs with the new CE.

Why did this happen? I can summarize... "Change is inevitable... change for the better is not". 😀 I cringe at every CU and version change. We've had to roll back quite a few CUs because it broke older code and those we had to fix. It's sometimes really difficult and pretty expensive because of 3rd party software and they apparently didn't write such things into the paper work.

Another example is that I installed 2017 RTM on my machine because we were thinking about upgrading to that rev. Things like a Tally-table CTE suddenly started running 40 times slower than a While loop because the optimizer decided that it was going to ignore the TOP in the code and would generate all 4 billion rows behind the scenes in an unpredictable manner. They finally came out with a CU that would fix that (and I had written a work-around) but it was too late... they decided against upgrading to 2017. Hopefully, we'll have better luck upgrading to 2019 because it's been out long enough with enough CUs under its belt to not break the whole bloody world. Still, we'll do a side-by-each build and run things in parallel for a while to make sure.

To be honest, I've not gone through a version change yet that wasn't a huge PITA because the new version broke something with the exception of when we went from 2005 to 2012. I'm convinced that was because I saw a whole lot of bad press about 2012 and waited for SP3 to come out and then still waited for a couple of CUs, as well.

Asking "why" MS does is futile. They just do. That doesn't make things easier to swallow about it... I've just learned (through exquisite pain) to be patient and wait.

BTW... thanks to all press about TF 1117 being a good thing for TempDB, MS made it non-optional in 2016, which killed us because of the problem with SET IDENTITY INSERT ON... it causes the entire CI to be sorted in TempDB even when Minimal Logging is active and when you're working on a 250GB Clustered index with 8 files in TempDB, things go real nuts in a hurry. Yeah... I built a work around for that but I'm tired of MS trying to help only to hurt.

Ah... sorry for the rant but you hit a hotspot for me.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

December 20, 2020 at 11:17 pm

I have exactly the same problem. Performance is degrading on compatibility levels from 2014 to 2017 but it gets better on 2019. Jeff has provided a good explanation why that is happening.

-

December 21, 2020 at 8:00 am

QUESTION: why do some queries work on older Compatibility level than the new? i thought microsoft made the Cardinality Estimator better in the new 2012+ versions? or maybe an update fixes this for SQL server 2014 Sp3?

The key word here is 'better'. What actually happened was that they changed the CE, such that it made many, but not all, queries run better. For some queries, the opposite happened.

There are plenty of articles out there showing how to track down those queries and set trace flags to force them to use the legacy CI, allowing you to take advantage of the improvements in features and performance without degrading your system.

-

December 22, 2020 at 6:50 pm

We hit a problem when our 2014 SQL instance went from Standard to Enterprise to enable more Memory,

Everything was slow - but I had been pushing for DBLEVEL changes forever for performance reasons and pushed through the change which made the problem go away - for all but 1 SP that just kept timing out.

Like people have said, forcing that 1 SP to use the old CE resolved the issue - but means having to be aware of it.

So for whatever reason there are outliers, even though generally the new CE improves things.

I am guessing that a lot of people out there never proactively change the DBLEVEL - it just gets dragged 'UP' at some point during an upgrade due to the 3 version support............?

Steve O.

-

December 23, 2020 at 7:18 am

SteveOC wrote:We hit a problem when our 2014 SQL instance went from Standard to Enterprise to enable more Memory,

Everything was slow - but I had been pushing for DBLEVEL changes forever for performance reasons and pushed through the change which made the problem go away - for all but 1 SP that just kept timing out.

Like people have said, forcing that 1 SP to use the old CE resolved the issue - but means having to be aware of it.

So for whatever reason there are outliers, even though generally the new CE improves things.

I am guessing that a lot of people out there never proactively change the DBLEVEL - it just gets dragged 'UP' at some point during an upgrade due to the 3 version support............?

Steve O.

What are you referring to as "DBLEVEL"?

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

December 23, 2020 at 9:21 am

Hello Jeff.

Sorry, I was referring to the Compatability Level of the individual DB - not the version/patch level of SQL.

A lot of the SP code here gets executed from an in-house DB - but against a vendor DB and the in-house DB was at 2008 and using the old CE. I have been getting these changed since doing so has not been part SQL upgrades in the past - they would just get dragged up a level if they fell outside the 3 versions.

Regards

Steve O.

-

December 23, 2020 at 3:36 pm

Thanks, Steve. I thought there was a new setting I didn't know about yet. 😀

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

December 23, 2020 at 3:50 pm

Believe me Jeff I know next to nothing - other than what I learn from you guys - and lose the plot a little sometimes when it comes to the correct terminology.

BTW - I have been using the RBAR term a lot lately in emails - didn't know where I had picked it up from - now I see that maybe I got that from you / your sig?

Regards

Steve O.

-

December 23, 2020 at 4:31 pm

I'm the guy that coined the phrase. I used in a lot in posts but had just started writing articles way-back-when. IIRC, the first time I used it in an article was in my very early article on Triangular Joins. I never intended it but the term took off like wildfire. I'm amazed that it's even made it into people's books and articles. If you know what it means, it really does make it easy to explain the problem with some code.

Thanks for the feedback, Steve.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

December 23, 2020 at 8:36 pm

Jeff Moden wrote:I'm the guy that coined the phrase.

And then spent hours creating an exploding

RBARGIF, I seem to remember!- This reply was modified 5 years, 2 months ago by Phil Parkin.

-

December 23, 2020 at 8:41 pm

Phil Parkin wrote:Jeff Moden wrote:I'm the guy that coined the phrase.

And then spent hours creating an exploding

RBARGIF, I seem to remember!That's the one... too bad SQLServerCentral doesn't work for GIF avatars anymore.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally)

Viewing 12 posts - 1 through 12 (of 12 total)

You must be logged in to reply to this topic. Login to reply