By Steve Bolton

…………In the last series of mistutorials I published in this amateur SQL Server blog, the outlier detection methods I explained were often of limited usefulness because of a chicken-and-egg problem: some of the tests could tell us that certain data points did not fit a particular set of expected values, but not whether those records were aberrations from the correct distribution, or if our expectations were inappropriate. The problem is akin to trying to solve an algebra problem with too many variables, which often can’t be done without further information. Our conundrum can be addressed by adding that missing information through goodness-of-fit tests, which can give us independent verification of whether or not our data ought to follow a particular distribution; only then can we apply batteries of other statistical tests that require particular distributions in order to make logically valid inferences, including many of the outlier identification methods discussed previously in this blog.

…………As I touched on frequently in that series, it is not uncommon for researchers in certain fields to fail to perform distribution testing, which thereby renders many of their published studies invalid. It is really an obvious problem that any layman can grasp: if we don’t have an expected pattern in mind, then it is difficult to define departures from it, which is essentially what outliers are. Goodness-of-fit tests also provide insights into data that are useful in and of themselves, as a sort of primitive form of data mining, which can be leveraged further to help us make informed choices about which of the more advanced (and concomitantly costly in terms of performance and interpretation effort) algorithms ought to be applied next in a data mining workflow. In fact, SSDM provides a Distribution property allowing users to specify whether a mining column follows a Log Normal, Normal or Uniform pattern, as I touched on briefly in A Rickety Stairway to SQL Server Data Mining, Part 0.0: An Introduction to an Introduction. In this series of mistutorials, I will be focusing more on the information that goodness-of-fit testing can give us about our data, rather than on the statistical tests (particularly on hypotheses) it typically serves as a prerequisite to. For all intents and purposes, it will be used as a ladder to future blog posts on more sophisticated data mining techniques that can be implemented in SQL Server, provided that we have some prior information about the distribution of the data.

Probability Distributions vs. Regression Lines

Goodness-of-fit tests are also sometimes applicable to regression models, which I introduced in posts like A Rickety Stairway to SQL Server Data Mining, Algorithm 2: Linear Regression and A Rickety Stairway to SQL Server Data Mining, Algorithm 4: Logistic Regression. I won’t rehash the explanations here for the sake of brevity; suffice it to say that regressions can be differentiated from probability distributions by looking at them as line charts which point towards the predicted values of one or more variables, whereas distributions are more often represented as histograms representing the full range of a variable’s actual or potential values. I will deal with methods more applicable to regression later in this series, but in this article I’ll explain some simple methods for implementing the more difficult concept of a probability distribution. One thing that sets them apart is that many common histogram shapes associated with them have been labeled, cataloged and studied intensively for generations, in a way that the lines produced by regressions have not. In fact, it may be helpful for people with programming backgrounds (like many SQL Server DBAs) to look at them as objects, in the same sense as object-oriented programming. For example, some of them are associated with Location, Scale and Shape parameters and characteristics like the mode (i.e. the peak of the histogram) and median that can be likened to properties. For an excellent explanation of location and scale parameters that any layman could understand, see the National Institute for Standards and Technology’s Engineering Statistics Handbook, which is one of the most readable sources of information on stats that I’ve found online to date. Statisticians have also done an enormous amount of work studying every conceivable geometrical subsection of distributions and devised measures for them, such as skewness and kurtosis for the left and right corners or “tails” of a histogram. Each distribution has an associated set of functions, such as the probability density function (PDF) in the case of Continuous data types (as explained in A Rickety Stairway to SQL Server Data Mining, Part 0.0: An Introduction to an Introduction) or the probability mass function (PMF) in the case of Discrete types. “Probability distribution function” (PDF) is occasionally used for either one in the literature and will be used as a catch-all term throughout this series. Other common functions associated with distributions include the cumulative distribution function (CDF); inverse cumulative distribution function (also known as the quantile function, percent point function, or ppf); hazard function; cumulative hazard function; survival function; inverse survival function; empirical distribution function (EDF); moment-generating function (MGF) and characteristic function (CF)[ii]. I’ll save discussions of more advanced functions for Fisher Information and Shannon’s Entropy that are frequently used in information theory and data mining for a future series, Information Measurement with SQL Server. Furthermore, many of these functions can have orders applied to them, such as rankits, which are a concept I’ll deal with in the next article. I don’t yet know what many of them do, but some of the more common ones like the PDFs and CDFs are implemented in the goodness-of-fit tests for particular distributions, so we’ll be seeing T-SQL code for them later in this series.

…………I also don’t yet know what situations you’re liable to encounter particular data distributions in, although I aim to by the end of the series. I briefly touched on Student’s T-distribution in the last series, where it is used in some of the hypothesis-testing based outlier detection methods, but I’m not yet acquainted with some of the others frequently mentioned in the data mining literature, like the Gamma, Exponential, Hypergeometric, Poisson, Pareto, Tukey-Lambda, Laplace and Chernoff distributions. The Chi-Squared distribution is used extensively in hypothesis testing, the Cauchy is often used in physics[iii] and the Weibull “is used to model the lifetime of technical devices and is used to describe the particle size distribution of particles generated by grinding, milling and crushing operations.”[iv] What is important for our purposes, however, is that all of the above are mentioned often in the information theory and data mining literature, which means that we can probably put them to good use in data discovery on SQL Server tables.

…………If you really want to grasp the differences between them at a glance, a picture is worth a thousand words: simply check out the page “1.3.6.6 Gallery of Distributions” at the aforementioned NIST handbook for side-by-side visualizations of 19 of the most common distributions. Perhaps the simplest one to grasp is the Uniform Distribution, which has a mere straight line as a histogram; in other words, all values are equally likely, as we would see in rolls of single dice. The runner-up in terms of simplicity is the Bernoulli Distribution, which is merely the distribution associated with Boolean yes-no questions. Almost all of the explanations I’ve seen for it to date have revolved around coin tosses, which any elementary school student can understand. Dice and coin tosses are invariably used to illustrate such concepts in the literature on probabilities because they’re so intuitive, but they also have an advantage in that we can calculate exactly what the results should be, in the absence of any empirical evidence. The problem we run into in data mining is that we’re trying to discover relationships that we can’t reason out in advance, using the empirical evidence provided by the billions of rows in our cubes and tables. Once we’ve used goodness-of-fit testing to establish that the data we’ve collected indeed follows a particular distribution, then we can use all of the functions, properties, statistical tests, data mining techniques and theorems associated with it to quickly make a whole series of new inferences.

The “Normal” Distribution (i.e. the Bell Curve)

…………This is especially true of the Gaussian or “normal” distribution, which is by far the most thoroughly studied of them all, simply because an uncanny array of physical processes approximate it. The reasons for its omnipresence are still being debated to this day, but one of the reasons is baked right into the structure of mathematics through such laws as the Central Limit Theorem. Don’t let the imposing name scare you, because the concept is quite simple – to the point where mobsters, I’m told, used to teach themselves to instantly calculate gambling odds from it in order to run book-making operations. Once again, dice are the classic example used to explain the concept: there are obviously many paths through which one could roll a total of six from two dice, but only one combination apiece for snake eyes or boxcars. The results thus naturally form the familiar bell curve associated with the normal distribution. The most common version of it is the “standard normal distribution,” in which a mean of zero and standard deviation of one are plugged into its associated functions, which force it to form a clean bell curve centered on the zero mark in a histogram. The frequency with which the normal distribution pops up in nature is what motivates the disproportionate amount of research poured into it; even the Student’s T-distribution and the Chi-Square Distribution, for example, are used more often in tests of the normal distribution than as descriptions of a dataset in their own right.

…………Unfortunately, one side effect of this lopsided devotion to one particular distribution is that there are far fewer statistical tests associated with its competitors – which tempts researchers into foregoing adequate goodness-of-fit testing, which can also be bothersome, expensive and a bit inconvenient if it disproves their assumptions. Without it, however, there is a gap in the ladder of logic needed to prove anything with hypothesis testing, or to discover new relationships through data mining. This step is disregarded with unnerving frequency – particularly in the medical field, where it can do the most damage – but ought not be, when we can use set-based languages like T-SQL and modern data warehouse infrastructure to quickly perform the requisite goodness-of-fit tests. Perhaps some of the code I’ll provide in this series can even be used in automated testing on a weekly or monthly basis, to ensure that particular columns of interest still follow a particular distribution over time and don’t come uncoupled from it, as stocks, bonds, derivatives and other financial instruments do so frequently from other economic indicators. It is often a fair assumption that a particular dataset ought to follow a normal distribution, but it doesn’t always hold – nor can we say why in many of the cases where it actually does, since the physical processes captured in our billions of records is several orders of magnitude more complex than rolls of dice and coin tosses. Nor can we be certain that many of these complex processes will continue to follow a particular distribution over time, particularly when that most inscrutable of variables, human free will, is factored in.

…………Luckily, there are many goodness-of-fit tests available for the normal distribution, which is fitting given that so much statistical reasoning is dependent on it. Most of the articles in this series will thus be devoted to normality testing, although we may encounter other distributions from time to time, not to mention the tangential topic of regression. I considered kick-starting this series with four incredibly easy methods of normality testing, but one of them turned out to be nowhere near as popular or simple to implement as I believed. The ratio between the min-max range of a column and its standard deviation is listed among the earliest normality tests at Wikipedia[v], but I decided against implementing it fully due to the lack of available comparison values. The concept is quite simple: you subtract the minimum value from a column’s maximum value, then divide by the standard deviation and compare it to a lookup table, but the only reference I could find (in Hartley, et al.’s original paper[vi] from 1954) only went up to 1,000 records and only supplied values for 30 of them. We frequently encountered the same twin problems in the outlier detection series with methods based on hypothesis-testing: most of the lookup tables have massive gaps and are applicable to only a few hundred or thousand records at best, which means they are unsuited to the size of typical SQL Server tables or that popular buzzword, “Big Data.” In the absence of complete lookup tables ranging to very high values, the only alternative is to calculate the missing values ourselves, but I have not yet deciphered these particular formulas sufficiently well yet. Nor is there much point, given that this particular measure is apparently not in common use and might not be applicable to big tables for other reasons, such as the fact that the two bookend values in a dataset of 10 million records probably don’t have much significance. The code in Figure 1 runs fast and is easy to follow, but lacks meaning in the absence of lookup tables to judge what the resulting ratio ought to be for a Gaussian distribution.

Figure 1: Code to Derive the Ratio of the Range to Standard Deviation

CREATE PROCEDURE [Calculations].[NormalityTestRangeStDevSP]

@DatabaseName as nvarchar(128) = NULL, @SchemaName as nvarchar(128), @TableName as nvarchar(128),@ColumnName AS nvarchar(128), @DecimalPrecision AS nvarchar(50)

AS

DECLARE @SchemaAndTableName nvarchar(400),@SQLString nvarchar(max)

SET @SchemaAndTableName = @DatabaseName + ‘.’ + @SchemaName + ‘.’ + @TableName –I’ll change this value one time, mainly for legibility purposes

SET @SQLString = ‘DECLARE @Count bigint, @StDev decimal(‘ + @DecimalPrecision + ‘), @Range decimal(‘ + @DecimalPrecision + ‘)

SELECT @Count=Count(CAST(‘ + @ColumnName + ‘ AS Decimal(‘ + @DecimalPrecision + ‘))), @StDev = StDev(CAST(‘ + @ColumnName + ‘ AS Decimal(‘ + @DecimalPrecision + ‘))),

@Range = Max(CAST(‘ + @ColumnName + ‘ AS decimal(‘ + @DecimalPrecision + ‘))) – Min(CAST(‘ + @ColumnName + ‘ AS decimal(‘ + @DecimalPrecision + ‘)))

FROM ‘ + @SchemaAndTableName + ‘

WHERE ‘ + @ColumnName + ‘ IS NOT NULL

SELECT @Range / @StDev AS RangeStDevRatio’

–SELECT @SQLString — uncomment this to debug string errors

EXEC (@SQLString)

…………Thankfully, we have better replacements available at the same low level of complexity. One of the most rudimentary normality tests that any DBA can easily implement and interpret is the 68-95-99.7 Rule, also known as the 3-Sigma Rule. The logic is very simple: if the data follows a normal distribution, then 68 percent of the values should fall within the first standard deviation, 95 percent within the second and 99.7 percent within the third. This can be verified with a simple histogram of distinct counts, of the kind I introduced at the tail end of the last tutorial series. To implement my version, all I did was tack the code in Figure 2 onto the last Select in the HistogramBasicSP stored procedure I posted in Outlier Detection with SQL Server, part 6.1: Visual Outlier Detection with Reporting Services. I also changed the name to HistogramBasicPlusNormalPassFailSP to reflect the added capabilities; for brevity’s sake, I won’t repeat the rest of the code. A @NumberOfStDevsFromTheMean parameter can be added to this code and combined with a clause like SELECT 1 – (1 / POWER (@NumberOfStDevsFromTheMean, 1)) to calculate Chebyshev’s Rule, a less strict test that applies to almost any distribution, not just the normal. In practice, this signifies that half of all the values for any distribution will be one standard deviation from the mean, three-quarters will be within two standard deviations and 87.5 and 93.75 percent will fall within four and five standard deviations respectively. The 3-Sigma Rule is closely to the Law of Large Numbers and Chebyshev’s Rule to its poor cousin, the Weak Law of Large Numbers; if your data fails the first test there’s no reason to hit the panic button, since it might not naturally follow a normal distribution, but failing Chebyshev’s Rule is cause to raise more than one eyebrow.

Figure 2: Code to Add to the HistogramBasicSP from the Outlier Detection Series

WHEN @HistogramType = 4 THEN ‘SELECT *, ”FirstIntervalTest” =

CASE WHEN FirstIntervalPercentage BETWEEN 68 AND 100 THEN ”Pass”

ELSE ”Fail” END,

”SecondIntervalTest” = CASE WHEN SecondIntervalPercentage BETWEEN 95 AND 100 THEN ”Pass”

ELSE ”Fail” END,

”ThirdIntervalTest” = CASE WHEN ThirdIntervalPercentage BETWEEN 99.7 AND 100 THEN ”Pass”

ELSE ”Fail” END

FROM (SELECT TOP 1 CAST(@PercentageMultiplier *

(SELECT Sum(FrequencyCount) FROM DistributionWithIntervalsCTE WHERE

StDevInterval BETWEEN -1 AND 1) AS decimal(6,2)) AS FirstIntervalPercentage,

CAST(@PercentageMultiplier * (SELECT

Sum(FrequencyCount) FROM DistributionWithIntervalsCTE WHERE StDevInterval

BETWEEN -2 AND 2) AS decimal(6,2)) AS SecondIntervalPercentage,

CAST(@PercentageMultiplier * (SELECT

Sum(FrequencyCount) FROM DistributionWithIntervalsCTE WHERE StDevInterval

BETWEEN -3 AND 3) AS decimal(6,2)) AS ThirdIntervalPercentage

FROM DistributionWithIntervalsCTE) AS T1′

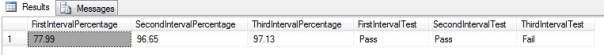

Figure 3: Result on the HistogramBasicPlusNormalPassFailSP on the Hemopexin Column

EXEC Calculations.HistogramBasicPlusNormalPassFailSP

@DatabaseName = N’DataMiningProjects’,

@SchemaName = N’Health’,

@TableName = N’DuchennesTable’,

@ColumnName = N’Hemopexin’,

@DecimalPrecision = ‘38,21’,

@HistogramType = 4

…………The results in Figure 3 are child’s play to interpret: the Hemopexin column (in a dataset on the Duchennes form of muscular dystrophy which I downloaded from the Vanderbilt University’s Department of Biostatistics and converted to a SQL Server table) does not quite fit a normal distribution, since the count of values for the first two standard deviations falls comfortably within the 68-95-99.7 Rule, but the third does not. Whenever I needed to stress-test the code posted in the last tutorial series on something more substantial than the Duchennes dataset’s mere 209 rows, I turned to the Higgs Boson dataset made available by the University of California at Irvine’s Machine Learning Repository, which now occupies close to 6 gigabytes of the same DataMiningProjects database. Hopefully in the course of one of these tutorial series (which I plan to keep writing for years to come, till I actually know something about data mining) I will be able to integrate practice datasets from the Voynich Manuscript, an inscrutable medieval tome encrypted so well that no one has been able to crack it for the last half-millennium – even the National Security Agency (NSA). The first float column of the Higgs Boson dataset probably makes for a better performance test though, given that the table has 11 million rows, far more than the tens or hundreds of thousands of rows in the tables that I’ve currently compiled from the Voynich Manuscript. The good news is that this simple procedure gave us a quick and dirty normality test in just 4 minutes and 16 seconds on my six-core Sanford and Son version of a development machine – which hardly qualifies as a real server, so the results in a professional setting will probably blow that away.

Figure 4: Code to Add to Derive the Ratio of Mean Absolute Deviation to Standard Deviation

CREATE PROCEDURE [Calculations].[NormalityTestMeanAbsoluteDeviationStDevRatioSP]

@DatabaseName as nvarchar(128) = NULL, @SchemaName as nvarchar(128), @TableName as nvarchar(128),@ColumnName AS nvarchar(128), @DecimalPrecision AS nvarchar(50)

AS

DECLARE @SchemaAndTableName nvarchar(400),@SQLString nvarchar(max)

SET @SchemaAndTableName = @DatabaseName + ‘.’ + @SchemaName + ‘.’ + @TableName –I’ll change this ‘ + @ColumnName + ‘ one time, mainly for legibility purposes

SET @SQLString = ‘DECLARE @Mean decimal(‘ + @DecimalPrecision + ‘), @StDev decimal(‘ + @DecimalPrecision + ‘)

SELECT @Mean = Avg(CAST(‘ + @ColumnName + ‘ AS Decimal(‘ + @DecimalPrecision + ‘))), @StDev = StDev(CAST(‘ + @ColumnName + ‘ AS Decimal(‘ + @DecimalPrecision + ‘)))

FROM ‘ + @SchemaAndTableName + ‘

WHERE ‘ + @ColumnName + ‘ IS NOT NULL

SELECT MeanAbsoluteDeviation / @StDev AS Ratio, 0.79788456080286535587989211986877 AS RatioTarget, MeanAbsoluteDeviation, @StDev as StandardDeviation

FROM (SELECT Avg(Abs(‘ + @ColumnName + ‘ – @Mean)) AS MeanAbsoluteDeviation

FROM ‘ + @SchemaAndTableName + ‘

WHERE ‘ + @ColumnName + ‘ IS NOT NULL) AS T1’

–SELECT @SQLString — uncomment this to debug dynamic SQL errors

EXEC (@SQLString)

Figure 5: Results for the Mean Absolute Deviation to Standard Deviation Ratio Test

EXEC @return_value = [Calculations].[NormalityTestMeanAbsoluteDeviationStDevRatioSP]

@DatabaseName = N’DataMiningProjects’,

@SchemaName = N’Physics’,

@TableName = N’HiggsBosonTable’,

@ColumnName = N’Column1′,

@DecimalPrecision = N’33,29′

…………If HistogramBasicPlusNormalPassFailSP is still too slow for your needs, it may be relieving to know that the code in Figure 4 took only two seconds to run on Column1 on the same machine and a mere five seconds on Column 2, which wasn’t properly indexed at the time. The procedure really isn’t hard to follow, if you’ve seen some of the T-SQL code I posted in the last series of the tutorials. For consistency’s sake, I’ll be using many of the same parameters in this tutorial series as I did in the last, include @DecimalPrecision, which enables users to avoid arithmetic overflows by setting their own precision and scale for the internal calculations. As we saw in the Visual Outlier Detection with Reporting Services segment of the last series, this parameter can also be used to prevent a mystifying problem in which RS reports occasionally return blank results for some columns, if their precision and scale are set too high. The first four parameters allow users to perform the normality test on any numeric column in any database for which they have adequate access, while the next-to-last-line allows users to debug the dynamic SQL.

…………In between those lines it calculates the absolute deviation – i.e. the value for each record vs. the average of the whole column, which was encountered in Z-Scores and other outlier detection methods in the last series – for each row, then takes the average and divides it by the standard deviation. I haven’t yet found a good guide as to how far the resulting ratio should be from the target ratio (which is always the square root of two divided pi) to disqualify a distribution from being Gaussian, but I know from experience that Column1 is highly abnormal, whereas Column2 pretty much follows a bell curve. The first had a ratio of 0.921093 as depicted in Figure 1, whereas Figure 2 scored 0.823127 in a subsequent test, so the ratio converged fairly close to the target as expected.[vii] In its current form, the test lacks precision because there is no definite cut-off criteria, which may have been published somewhere I’m unaware of – especially since I’m an amateur learning as I go, which means I’m unaware of a lot that goes on in the fields related to data mining. It is still useful, however, because as a general rule of thumb we can judge that the abnormality of a dataset is proportional to how far the ratio is from the constant target value.’

Climbing the Ladder of Sophistication with Goodness-of-Fit

I’m fairly sure that the second float column in the Higgs Boson Dataset is Gaussian and certain that the first is not, given the shapes of the histograms provided for both in Outlier Detection with SQL Server, part 6.1: Visual Outlier Detection with Reporting Services. Histograms represent the easiest visual test of normality you can find; it make take someone with more statistical training than I have to interpret borderline cases, but any layman can detect at a glance when a distribution is definitely following some other shape besides a bell curve. In the next installment of the series, I hope to explain how to use a couple of other visual detection methods like probability plots and Q-Q plots which are more difficult to code and reside at the upper limit of what laymen can interpret at a glance. I had a particularly difficult time calculating the CDFs for the normal distribution, for example. After that I will most likely write something about skewness, kurtosis and the Jarque-Bera test, which are also still within the upper limit of what laymen can interpret; in essence, that group measures how lopsided a distribution is on the left or right side (or “tail”) of its histogram. I wrote code for some of those measures long ago, but after that I will be in uncharted territory with topics with imposing names like the Shapiro-Wilk, D’Agostino’s K-Squared, Hosmer–Lemeshow, Chi-Squared, G, Kolmogorov-Smirnov, Anderson-Darling, Kuiper’s and Lilliefors Tests. I have a little experience with the Likelihood Ratio Test Statistic, Coefficient of Determination (R2) and Lack-of-Fit Sum of Squares, but the rest of these are still a mystery to me.

…………This brings me to my usual disclaimer: I’m publishing this series in order to learn the topic, since the act of writing helps me digest new topics a lot faster and forces me to think about them more explicitly. I mainly teach through misadventure; my posts often end up as cautionary tales that amount to, “Don’t go about this the way this guy did.” There’s still some value in that, but always take the word of a professional over mine if I say anything that contradicts them; my word may carry weight in topics I have expertise in (such as foreign policy history, which I could teach at the graduate school level at the drop of a hat) but data mining and the associated statistics are definitely not among them (yet). Hopefully by the end of the series I will have learned more about probability distributions and their associated tests and made some contributions towards coding them in T-SQL; I may post a coda at the end with a use case map that can help DBAs differentiate at a glance between the various goodness-of-fit tests and their proper applications for particular distributions. At present I plan to end the series with a group of six goodness-of-fit test with wickedly cool names like the Cramér–von Mises, the Deviance, Focused, Hannan-Quinn, Bayesian and Akaike Information Criterions. The last two of these are mentioned frequently in the information theory literature, which will help provide another springboard towards a much more interesting series I’ve been planning to write for some time, Information Measurement with SQL Server. I already built two bridges to this potentially useful but really advanced series at the tail end of Outlier Detection with SQL Server, with my posts on Cook’s Distance and Mahalanobis Distance. My earlier tutorial series on A Rickety Stairway to SQL Server Data Mining also served as a bridge of sorts, since some of the algorithms are related to the methods of information measurement we’ll touch on in that future series. Understanding probability distributions and goodness-of-fit is a prerequisite of sorts to cutting edge topics like Shannon’s Entropy, Minimum Description Length (MDL) and Kolmogorov Complexity that I’ll deal with in that series, which may be quite useful to miners of SQL Server data.

For a discussion, see the Wikipedia article “Probability Density Function” at http://en.wikipedia.org/wiki/Probability_density_function. I have seen “probability distribution function” used to denote both mass and density functions in other data mining and statistical literature, albeit infrequently.

[ii] See the Wikipedia article “Characteristic Function” at

http://en.wikipedia.org/wiki/Characteristic_function_(probability_theory)

[iii] See the Wikipedia article “Cauchy Distribution” http://en.wikipedia.org/wiki/Cauchy_distribution

[iv] See the Wikipedia article “List of Probability Distributions” at http://en.wikipedia.org/wiki/List_of_probability_distributions

[v] See the Wikipedia article “Normality Test” at http://en.wikipedia.org/wiki/Normality_test

[vi] See David, H. A.; Hartley, H. O. and Pearson, E. S., 1954, “The Distribution of the Ratio, in a Single Normal Sample, of Range to Standard Deviation,” pp. 482-493 in Biometrika, December 1954. Vol. 41, No. 3/4. I found the .pdf at the web address http://webspace.ship.edu/pgmarr/Geo441/Readings/David%20et%20al%201954%20-%20The%20Distribution%20of%20the%20Ratio%20of%20Range%20to%20Standard%20Deviation.pdf but it is apparently also available online at the JSTOR web address http://www.jstor.org/stable/2332728. I consulted other sources as well, like Dixon, W.J., 1950, Analysis of Extreme Values,” pp. 488-506 in The Annals of Mathematical Statistics. Vol. 21, No. 4. Available online at the Project Euclid web address http://projecteuclid.org/euclid.aoms/1177729747 and p. 484, E.S. Pearson, E.S. and Stephens, M. A., 1964, “The Ratio Of Range To Standard Deviation In The Same Normal Sample,” pp. 484-487 in Biometrika, December 1964. Vol. 51, No. 3/4. Published online at the JSTOR web address http://www.jstor.org/discover/10.2307/2334155?uid=2129&uid=2&uid=70&uid=4&sid=21105004560473

[vii] I verified the internal calculations against the eight-value example at the MathBits.com page “Mean Absolute Deviation,” which is available at the web address http://mathbits.com/MathBits/TISection/Statistics1/MAD.html