Index maintenance in availability groups

-

I have a 4 node always on availability group setup across two datacentres with synchronous replication from node to node in the same site and asynchronous from nodes site to site.

I am setting up Index maintenance using Ola Hallengren's scripts and am very wary of the fact of rebuild/reorg indexes in an always on setup and the problem of the log files filling up.

Are there any recommendations you setting up the jobs parameters to help minimise the potential for the log files to explode. I have thought of using @SortInTempdb = 'Y' and @TimeLimit = 3600 but am unsure as to what else I can do.

I am planning to run something like this -

EXECUTE dbo.IndexOptimize

@Databases = 'ALL_DATABASES’,

@FragmentationLow = NULL,

@FragmentationMedium = 'INDEX_REORGANIZE,INDEX_REBUILD_ONLINE',

@FragmentationHigh = 'INDEX_REBUILD_ONLINE',

@FragmentationLevel1 = 50,

@FragmentationLevel2 = 80,

@SortInTempdb = 'Y',

@LogToTable = 'Y',

@TimeLimit = 3600

Thanks

-

February 18, 2020 at 1:36 pm

Minimize the amount of index reorgs you do. Most of them, most of the time, are actually unnecessary. Instead, focus on getting the right page free space and let the indexes arrive at a natural fragmentation state.

However, do then focus very hard on ensuring that you have good statistics maintenance. A lot of people skip stats because they're constantly rebuilding their indexes so they get new stats for free (effectively).

"The credit belongs to the man who is actually in the arena, whose face is marred by dust and sweat and blood"

- Theodore RooseveltAuthor of:

SQL Server Execution Plans

SQL Server Query Performance Tuning -

February 18, 2020 at 3:54 pm

To Grant's point, if you're going to REORGANIZE at 50 and REBUILD at 80, I wouldn't even bother with index maintenance. Not that I would recommend it for everyone but, as a broad, very interesting, incredibly fruitful experiment, I stopped doing ANY index maintenance on my main production system because of problems that index maintenance was actually causing (long story there but always resulted in massive blocking on the day after index maintenance). Performance actually got better (CPU usage dropped from an average of 22% to 8% over a period of 3 months and stayed there for almost 3 years on 16 core/32 threads and, yes... I have two 1TB databases and a shedload more smaller ones).

I say it may not be for everyone because of the other thing Grant spoke of... Page Density (% of page fullness), which affect memory usage and disk usage. If you have wicked tight memory (not much memory and huge amounts of data that should be in memory), you might have to watch your P's & Q's when it comes to memory and, therefore, Page Density.

The key for my success of not doing index maintenance was to be fairly aggressive about rebuilding statistics on a regular basis. If you don't do that, then even if you have index maintenance in place, you will fail because index maintenance doesn't deal with column statistics nor any statistics that are only REORGANIZEd.

I'll also state that REORGANIZE isn't the tame little kitty that everyone thinks it is when it comes to the log file and that, although it does follow the FILL FACTOR, it's not capable of making any new pages and so does NOT work the way that most people think it does. Because of the way it doesn't work (it doesn't actually work anything close to like REBUILD does), I was able to determine that ...

- It was actually responsible for a large amount of the blocking I experienced AND, because of the way it doesn't work, actually perpetuated and made page splits worse.

- It's not a tame little kitty. I ran an experiment on one of my larger tables of (at the time) 140GB. It has an "ever-increasing" key for the PK and so data always went in at the logical end of the index. Then, they would delete about 60% of the data because it was "duplicated" (don't ask... long and still silly story). The only reason why logical fragmentation occurred was because whole pages of data were frequently deleted (wide rows, only 9 per page). For the experiment, I'd restore a backup to a test system so that I was always dealing with exactly the same conditions.The PK (Clustered Index) only had 12% logical fragmentation and it was all at the logical end of the index. When I did a reorganize, the log file exploded from 20GB to a whopping 227GB and took an hour and 21 minutes! An ONLINE REBUILD did the same damned thing! And OFFLINE REBUILD took almost as long but the log file "only" grew to 140GB. And OFFLINE REBUILD in the BULK LOGGED Recovery Model (not possible for those with AG or anything else that relies on the log file) only took 12 MINUTES and the log file only grew to 37GB. I later discovered that was because I had some stuff "trapped" in the log file because I wasn't doing backups during the experiment and there was actually less than 20GB of log file generated by the BULK LOGGED ONLINE REBUILD.

We use a form of SAN replication, which means that I don't have to agonize over preserving log files but that also means that I also don't have to use REORGANIZE to do index maintenance. To wit, I NEVER use REORGANIZE unless I need to do some form of LOB compaction and even that doesn't work all in one shot. I've had to run REORGANIZE up to 10 times on the same Clustered Index to get LOB compaction to be totally successful for out of row stuff and it actually does very, very little to help if you've made the mistake of not forcing LOBs to always go out of row because of "trapped short rows" (my simple description of the problem) that will be filled to as little as something less than 1%.

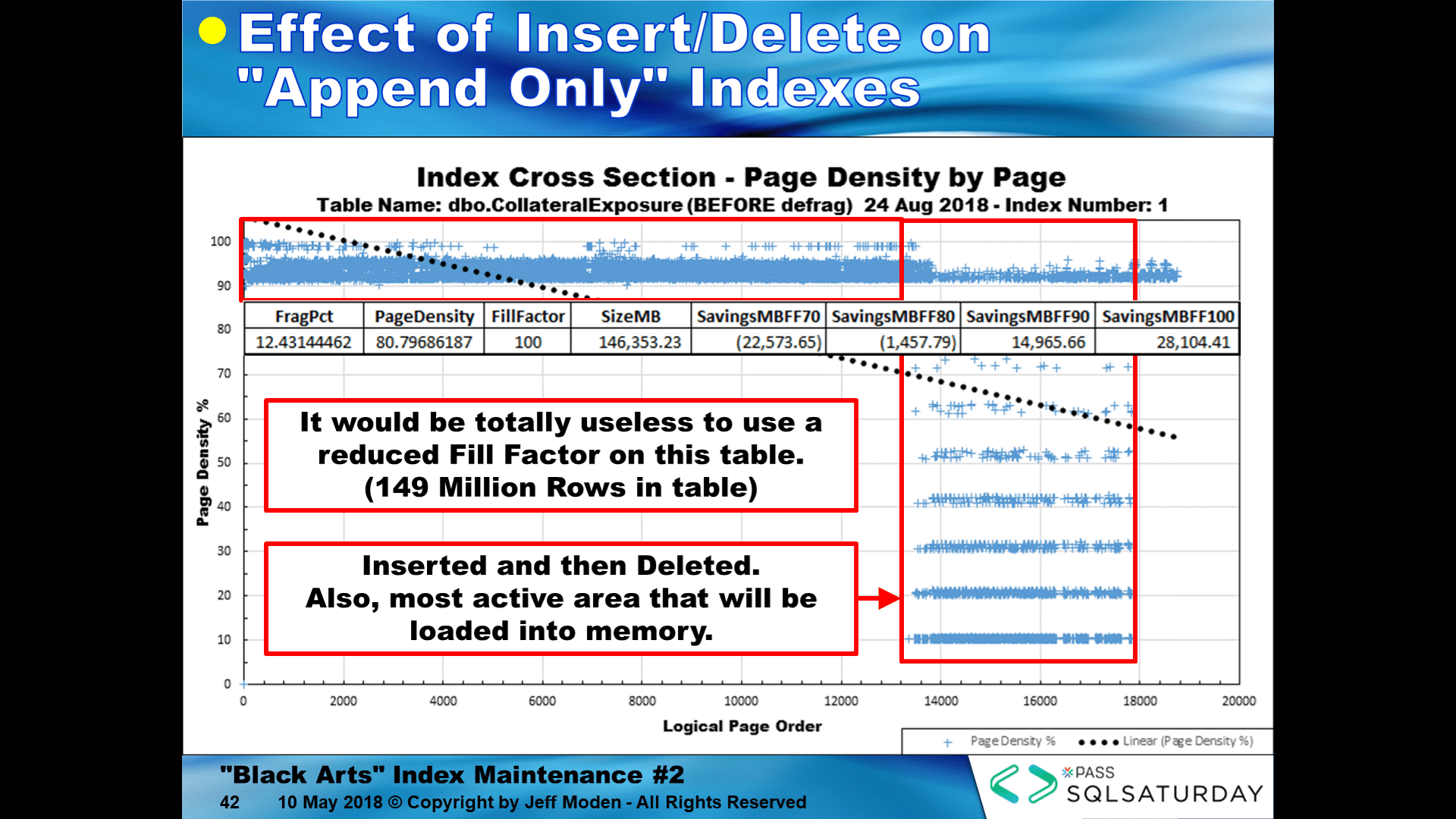

My recommendation is to be really careful, especially about doing any form of index maintenance on indexes that have a "0" FILL FACTOR (which is identical to a 100% FILL FACTOR) because you can end up with a huge number of pages splits, rampant log file usage, and the resulting huge amounts of blocking on "the morning after" you do such index maintenance. In other words, if you haven't taken the time to determine the right FILL FACTOR for an index, then you simply don't know what the index is going to do to you if you REBUILD or REORGANIZE it. If you need to recover a lot of space because the page density has dropped to 50% or less, then you MIGHT want to REBUILD it at 82%. The "2" reminds you that you have something "to do" to determine what's going on with the index and that the only reason for REBUILDing it was to recover memory and disk space. Even then, you have to be careful... here's an example of that 146GB index and why I don't rebuild it at 82%. It would be a total waste (the numbers in parenthesis are negative numbers)...

I'm also not a deep study of Ola's good code but, IIRC, it doesn't do any maintenance based on Page Density, which is something that you absolutely need to consider in any form of index maintenance.

And, yeah... REBUILDing very large indexes is going to need a trick with FileGroup swapping to keep you from having huge amounts of unwanted unused space in your MDF/NDF files.

Like what happened to me with the huge amount of blocking I experienced, index maintenance shouldn't be done in a "general purpose" manner because of all the things I've said here and much more. If you don't know what it is, consider not doing anything with it until you do. Spend your time rebuilding stats, instead.

And, no... I'm NOT saying to stop doing index maintenance. What I'm saying is to stop damaging your database by doing index maintenance on indexes that you know nothing about. Figure out what your indexes are doing and what their insert and update patterns are so that you can maintain them correctly.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

February 20, 2020 at 9:40 am

As far as I know Index maintenance has not been undertaken at my workplace for a long period of time if at all and I would be setting it up to run for 'general purposes'. No-one has complained about slow applications but if index maintenance has never been done I'd guess they wouldn't know any better.

Very little development work is actually undertaken on the SQL databases, it is the case that off the shelf products are bought with a SQL database backend that needs to go somewhere and no development is undertaken on them afterwards apart from upgrades.

I have never changed the fill factor on an instance or index level, so it will be using the default of 100% (or 0%), unless the database/indexes are setup with a different fill factor by the creators of the product. And because of the off-the-shelf nature of the products I don't know about the indexes insert/update patterns so couldn't make a call about this.

I have on occasion rebuilt or created indexing on specific databases in specific circumstances when things where runnign vastly slower than bfore or there was a blocking issue. I also recently setup to run statistics rebuild job overnight on the instances.

I think I am going to not setup a 'general purpose' rebuild/reorg indexing job at the moment and continue to run the stats maintenace and any ad-hoc indexing mainatence that is needed. I am not sure that implementing a rebuild job would be a benefit as it could cause far more issues than problems it fixes.

-

Given this situation, yes, statistics maintenance should be the top of your list. Also, adjustments to the default settings of SQL Server. They're not always great. Cost Threshold for Parallelism is set to 5, a ridiculous number, and should be adjusted upwards. Optimize for Ad Hoc is disabled and ought to be enabled on the vast majority of systems. Max memory settings are frequently not set and should be. Finally, a CPU max should be set as well (do some research on how to pick that number, it's complicated). All this stuff will help a lot more than sweating index fragmentation.

"The credit belongs to the man who is actually in the arena, whose face is marred by dust and sweat and blood"

- Theodore RooseveltAuthor of:

SQL Server Execution Plans

SQL Server Query Performance Tuning -

February 20, 2020 at 2:30 pm

garryha wrote:I have never changed the fill factor on an instance or index level, so it will be using the default of 100% (or 0%), unless the database/indexes are setup with a different fill factor by the creators of the product. And because of the off-the-shelf nature of the products I don't know about the indexes insert/update patterns so couldn't make a call about this.

Unless all the indexes are based on Random GUIDs, I strongly recommend that you don't change the default of "0/100" because, again, unless you know what an index is doing, it could cause a huge amount a totally ineffective waste of both memory and disk space.

garryha wrote:I have on occasion rebuilt or created indexing on specific databases in specific circumstances when things where runnign vastly slower than bfore or there was a blocking issue. I also recently setup to run statistics rebuild job overnight on the instances.

Both of those are the keys to success, especially keeping up with statistics on really active boxes, especially if a lot of or most of the Clustered Indexes are based on some sort of ever-increasing key such as an IDENTITY, Date/Datetime, or Sequence. Remember that rebuilding indexes is a really expensive way of rebuilding stats and (for me anyway) I won't use REORGANIZE on about 99% of the indexes.

garryha wrote:I think I am going to not setup a 'general purpose' rebuild/reorg indexing job at the moment and continue to run the stats maintenace and any ad-hoc indexing mainatence that is needed. I am not sure that implementing a rebuild job would be a benefit as it could cause far more issues than problems it fixes.

That sounds like a great plan and is basically what I've been doing for about the last year with great success, especially for troublesome indexes that end up with inappropriately low page densities (especially in the most active areas of the indexes) and those that are causing a large number of page splits. That and checking/changing the settings that Grant pointed out above.

- This reply was modified 6 years ago by Jeff Moden.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

February 21, 2020 at 7:24 am

Thank you both for your help.

-

January 30, 2022 at 5:19 pm

Bonjour ,

1/svp est ce que c'est recommandé de changer de mode synchrone vers asynchrone avant de lancer la commande pour la maintenance des index ?

sqlcmd -E -S $(ESCAPE_SQUOTE(SRVR)) -d MAINTENANCE_SQL -Q "EXECUTE dbo.IndexOptimize @Databases = 'AVAILABILITY_GROUP_DATABASES, @FragmentationLow = NULL,@FragmentationMedium = 'INDEX_REORGANIZE,INDEX_REBUILD_ONLINE',@FragmentationHigh = 'INDEX_REBUILD_OFFLINE',@ FragmentationLevel1 = 5,@FragmentationLevel2 = 30,@TimeLimit = 3600,@LogToTable = 'N'" -b

-

January 31, 2022 at 6:56 am

I don't use availability groups but I can tell you that you're using what people recommend as supposed Microsoft "recommended" "Best Practices" for index maintenance. In fact, most of the whole world uses that 5/30 method. The trouble is that it actually isn't a "Best Practice", was never meant to be a "Best Practice", and is, in fact, a worst practice that perpetuates daily page splits, the resulting fragmentation, and is the cause of some really serious "morning after" blocking and log file usage and people haven't figured that out in over 2 decades. They actually updated the page in the MS documentation on the 20th of April, 2021 and removed all that 5/30 stuff. Unfortunately, they replaced it with something that most people won't do because of it's complexity.

A short term fix is to learn what REORGANIZE is really doing to your indexes and your log files. REORGANIZE is so bad that it's the actual reason (along with some really bad testing by people and a whole lot more supposed "Best Practices" that are also actually worst practices) for people thinking that Random GUIDs are the worst choice for index keys even though they operate in a manner that is the epitome of how everyone and their brother thinks an index should behave as.

For example, what would you say if I told you that you can insert 100,000 rows per day into a Random GUID clustered index for 58 straight days, with no index maintenance, and have less than 1% fragmentation until the 58th day. That 5.8 MILLION Random GUID rows being inserted with less than 1% fragmentation.

Here's how to do it and why people really need to sit down and science out their indexes instead of using that bloody 5/30 method and the willy-nilly use of REORGANZE. It only takes less than 90 minutes and you really need to watch it to begin to understand why all that is killing your indexes.

https://www.youtube.com/watch?v=rvZwMNJxqVo

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally)

Viewing 9 posts - 1 through 9 (of 9 total)

You must be logged in to reply to this topic. Login to reply