With origins from the world of “Submarine ‘Dolphin’ Qualification” questions, an “Oolie” is a difficult question to answer, or the knowledge or fact needed to answer such a question, that may or may not pertain to one's duties but tests one's knowledge of a system or process to the limit.

The Coveted "Dolphin" Pin

The Coveted "Dolphin" PinIntroduction

There are a lot of different costs associated with building or rebuilding an index. The following is a “short list”. You can get much more detailed information at the URLs contained in the References section (Ref01 and Ref02) of this article.

Simple List of Space Costs

- If an index is larger than 128 extents (that’s just 1,024 pages or 8MB), the old index or heap will remain until the new index is built and committed. So, for starters, you need about twice the space of an index to rebuild the index or convert a heap to a Clustered Index Table.

- There’s also an approximately 20% space overhead needed for sorting. We’re up to 120% more space than the current size of the index.

- If we have the Enterprise Edition of SQL Server, we can do the index rebuild using the ONLINE option. That means that a temporary object (Temporary Mapping Index) will be built to handle any transactions while the index is being rebuilt and that also takes extra space, although I’ve not researched how much. Of course, ONLINE rebuilds are fully logged so your transaction log file could grow a lot, even if you’re in the Bulk _Logged or Simple Recovery Models.

- If either the current or assigned Fill Factor of the rebuild is less than the current average page density (avg_page_space_used_in_percent from sys.dm_db_index_physical_stats), new pages will need to be created to increase the empty space in the existing pages and those new pages will also contain empty space according to the Fill Factor.

Temporary Costs

Items 1 through 3 above are mostly temporary costs. Once the index has been rebuilt, the space used reverts to being free space in the data file or TempDB. If you already had enough free space to accommodate those requirements, then the data file won’t actually get larger. Of course, if you didn’t have the required space, then the file will get larger and you’ll end up with extra, but free, space in the file that can be used by other objects. Your log file could fill up and expand, but that’s also a temporary problem.

While these temporary costs are important, they’re not covered further in this article.

Permanent Costs

The extra space requirements of Item 4 (reducing Fill Factors) are, however, a permanent cost. The extra pages produced can only be used by the index that occupies them. There are several important reasons to calculate the size of the extra space required.

- If it’s a particularly big index or you plan on reducing the Fill Factor for a large number of indexes, the rebuild will increase the size and the time it takes to do backups, restores, and index maintenance. You might also need to increase the disk space of wherever you’re storing your backups.

- It could have a drastic effect on performance on machines with small amounts of memory because queries will now require more pages to be loaded into memory to do the same job it did before. This could drive other indexes out of memory which means they’d need to be retrieved from disk when they’re used again later. Repetitive retrieval from disk, even if the storage medium is SSDs, will cause performance issues. Of course, the retrieval from SSDs will be faster than from “spinning rust” but it still takes additional time compared to just using what is already in memory.

A Way to Justify Outages for Rebuilds

Conversely, if you have a badly fragmented index, there’s usually a good chance that the pages of the index have been split, which lowers the average page density, sometimes seriously. There’s also a good chance that rebuilding the index, using whatever the current Fill Factor is, will save some space. If you have large fragmented indexes or a large number of smaller badly fragmented indexes, you can sometimes save a huge amount of disk and memory space by doing index rebuilds instead of using REORGANIZE.

For example, I have a 146 GB Clustered Index where the active portion of the index was wildly fragmented. I determined that rebuilding the index would save almost 40 GB. I also ran the same analysis on the rest of the indexes and REORGANIZE just wasn’t recovering the space effectively. I calculated that rebuilding them would save an additional 40 GB in the database. At the time, the total of 80 GB in space savings represented a whopping 10% of an 800 GB database.

If you have the Standard Edition of SQL Server, then you know that you can’t do an ONLINE rebuild. If the database is used 24/7, you may have to justify an outage for index rebuilds and part of such a justification will be to identity how much disk space can actually be saved.

The important part here is that you need to produce some reasonably accurate numbers to include in your justification or management may not buy-in to the outage.

A More Specific Question

A common question on the forums and in real life is what to do about fragmentation on a given index. Right or wrong (heh… and it’s usually wrong), a common answer is to “Decrease the Fill Factor”. So let’s ask a more pointed question than the one asked in the title of this article. And, yes, I ask this question when I’m interviewing people that claim to be a “Performance Tuning Expert”.

You have a massive non-compressed, non-partitioned, non-LOB, row-store Clustered Index. Your index maintenance is currently setup to rebuild it using a Fill Factor of 100. You want to change it to a Fill Factor of 70. How much extra space will you need for the index?

If you answered “approximately 30% more” (100-70 = 30), you’d be seriously wrong. Let’s find out why.

A Simple Formula (or tl;dr version)

Cutting straight to the chase, here’s the simple formula to answer the question of “How much will it cost or save to rebuild that index”?

SavingsMB = (PageCount-(AvgPageDensity/FillFactor*PageCount))/128

If the number returned is positive, then it’s a savings. If the number returned is negative, then it’s a cost.

Explaining the Formula

Following the processing order of the formula, we first start with…

Calculating the “Growth Factor”

Not to be confused with the “Growth Factor” for data or transaction log files, we need to determine how much the index will grow or shrink that we can use as a multiplier in subsequent parts of the formula. Here’s the part of the formula that determines that “Growth Factor”.

AvgPageDensity/FillFactor

The AvgPageDensity comes from the avg_page_space_used_in_percent column of sys.dm_db_index_physical_stats. Think of this value as the “current effective Fill Factor”.

The FillFactor can be one of two things. If we’re rebuilding an existing index with the same Fill Factor, this value needs to come from the fill_factor column of sys.indexes. If we’re creating the index or changing the Fill Factor of an existing index, then this will be the Fill Factor that you’re using in either the CREATE INDEX (or ADD CONSTRAINT for adding PKs and the like) or ALTER INDEX statement to rebuild the index.

If we realize that this “Growth Factor” is actually a ratio and that ratios can also be expressed as percentages, we can now get the correct answer for the question where we rebuild a 100% Fill Factor index at 70%. And, remember, the “100.0” is NOT the current Fill Factor. It’s the current page density and we’re assuming from the question that it’s actually 100%, which is actually very rare.

100.0/70 = 1.429 (rounded to 3 decimal places just for readability)

1.429 -1 = 0.429 (subtracted 1 to remove the existing size)

0.429 * 100 = 42.9% (multiplied by 100 to convert the ratio to percent)

As you can see, “30%” wasn’t just the wrong answer but it was also quite a bit wrong by almost 13%. If you’re working with something like a 100 GB index, it means that you were off by almost 13 GB!

Calculate the Size of the Rebuilt Index in Pages

Next, we simply multiply the “Growth Factor” by the current size of the index in pages. That information comes from the page_count column of sys.dm_db_index_physical_stats and is expressed in the formula as *PageCount.

If we make the substitutions in the formula for changing an index from 100% to 70% and we assume that there are 1000 pages in the index, we end up with the following expression and answer;

100.0/70*1000 = 1429 (rounded for ease of viewing)

Conversely, if we have an index with an average page density of only 70% and we want to rebuild it to 100%, we end up with the following expression and answer:

70.0/100*1429 = 1000 (rounded for ease of viewing)

Calculate the Savings or Cost (in pages)

If we subtract what we’ve explored in the formula, so far, from the current page count, we end up with the total number of pages the rebuild will either save us or cost us.

Again, assuming that we’re changing an index with a 100% page density to a Fill Factor of 70% and assuming there are 1000 pages in the index, we end up with a formula and answer like this;

1000-(100.0/70*1000) = -429 (rounded for ease of reading)

The negative number means it’s a negative savings, which is actually a cost.

Once again, if we do the converse using the numbers we previously established…

1429-(70.0/100*1429) = 429 (rounded for ease of reading)

This positive number shows how much we saved in pages.

Sidebar: For you math whizzes out there, I totally agree. The parentheses are not actually necessary here because of the rules of algebraic hierarchy. I’ve included the parentheses only for additional clarity as to the order of execution and logical grouping for the various parts of the formula.

Convert the Savings or Cost in Pages to Mega Bytes

In the progression of the formula above, we ended up with either a savings of 429 pages for the 100 to 70 conversion or a cost of -429 pages in the 70 to 100 conversion. All we need to do now is divide those results by 128 to come up with the savings (+) or cost (-) in Mega Bytes.

Where does the “128” come from? 1 Mega Byte is equal to 1024^2 or 1,048,576 bytes. A page contains 8,192 bytes. If we divide 1,048,576 by 8,192, we end up with 128 pages per Mega Byte.

So, our two final answers are

For the conversion from 100 to 70:

(1000-(100.0/70*1000))/128 = -3.348 (cost in MB because it’s negative)

For the conversion from 70 to 100:

(1429-(70.0/100*1429))/128 = 3.349 (savings in MB because it’s positive)

The slight difference between the two is because the number 1429 was calculated and rounded for display purposes. The actual value is 1,428.5714285714285714285714285714 and now you can see why I rounded it.

Accuracy of the Formula

The formula gets really close to the truth but isn’t “spot-on” accurate. The reason is because, depending on the length and consistency of the lengths of rows, a table that’s rebuilt to a given “Assigned” Fill Factor may not end up with an average page density ("Effective" Fill Factor) that actually matches the “Assigned” Fill Factor.

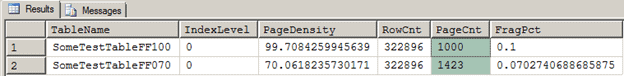

For example, in the following tests, the table that has been rebuilt to a 100% Fill Factor has an average page density of 99.7084259945639% and the table that has been rebuilt to a 70% Fill Factor has an average page density of 70.0618235730171%. On top of it all, almost all of the numbers are averages and I also don’t actually round the final calculated savings or cost of pages up to the nearest whole page.

In real life, my 146 GB table has some pretty wide rows with only 9 rows per page. The average width of the rows is 833 bytes. If we do the math, 833 bytes per row times 9 rows only equals 7,497 bytes, which is a far cry (only about 93% full) from having full pages and the simple formula doesn’t account for that. For example, we can calculate the savings of converting a 93% average page density to a 100% Fill Factor and it’ll never be accurate because it’s not actually possible to hit the 100% mark for page density for such an index. We could write a more complex formula to solve for such a thing but, for me, it was a mostly unnecessary complication because I determined long ago that large and odd indexes needed a more personal touch.

Proving the Formula

With the idea that “One good test result is worth a thousand expert opinions” (Wernher von Braun), Let’s run a test that will prove the formula is correct and accurate enough for what we need it to do. We’re going to build a table with 1,000 pages in the Leaf Level at a 100% Fill. You can do it with any number of pages but I wanted it to match the examples we’ve used.

Then, we’ll make a copy of that data in another table that has a Clustered Index of 70% and do a simple comparison of page counts to see if we came close to the numbers predicted by the formula.

The 100% Fill Factor Table

To summarize what this code does, it first checks to see if the test table exists and drops it if it does. Then it creates the table with a PK Clustered Index of 100%. Then, using the “Pseudo-Cursor” formed by a CROSS JOIN as a “Row Source” to replace a loop, it inserts the 322,896 rows necessary to have 1,000 pages in the Leaf Level of the index.

Here’s the code to build the 100% Fill Factor table.

/*********************************************************************

Create the table that has a 100% Fill Factor and populate it.

If everything goes perfectly, the LEAF Level of the Clustered Index

will contain 1000 pages on the mark.

*********************************************************************/

--====================================================================

-- If the test table already exists, drop it.

--====================================================================

IF OBJECT_ID('dbo.SomeTestTableFF100','U') IS NOT NULL

DROP TABLE dbo.SomeTestTableFF100

;

--====================================================================

-- Create the test table with a Fill Factor of 100 on the CI.

--====================================================================

CREATE TABLE dbo.SomeTestTableFF100

(

RowNum INT IDENTITY(1,1)

,SomeDT DATETIME

,SomeINT INT

CONSTRAINT PK_SomeTestTableFF100

PRIMARY KEY CLUSTERED (RowNum)

WITH (FILLFACTOR = 100)

)

;

--====================================================================

-- Populate the test table.

--====================================================================

--===== Do the inserts using a “Pseudo-Cursor as the row source.

INSERT INTO dbo.SomeTestTableFF100 WITH (TABLOCK)

(SomeDT,SomeINT)

SELECT TOP (322896)

SomeDate = RAND(CHECKSUM(NEWID()))

* DATEDIFF(dd,'2000','2020')

+ DATEADD(dd,0,'2000')

,SomeInt = ABS(CHECKSUM(NEWID())%100)+1

FROM sys.all_columns ac1

CROSS JOIN sys.all_columns ac2

;

The 70% Fill Factor Table

As we did in the 100% Fill Factor table, we first check to see if the table exists and drop it if it does. Then we create the table. The ONLY difference here is that the PK Clustered Index has been assigned a 70% Fill Factor instead of a 100% Fill Factor.

Then we copy the data from the 100% Fill Factor table into the 70% Fill Factor table. This is so no one can claim that the data made a difference even though we’re using fixed-width data-types.

Sidebar: We’re using the trick of doing a very special “First Insert” technique that, contrary to popular belief, DOES actually follow the Fill Factor as surely as if we had rebuilt the index That trick is an “SQL Oolie” itself. Please see the article titled “Some T-SQL INSERTs DO Follow the Fill Factor! (SQL Oolie)” for more information on how and why that trick works.

Here’s the code to build the 70% Fill Factor table.

/*********************************************************************

Create the table that has a 70% Fill Factor and populate it with the

data from the table that has a 100% Fill Factor. Since most people

think we're only adding an extra 30% to the table, they think it

should only expand to 1300 pages, which is incorrect.

*********************************************************************/

--====================================================================

-- If the test table already exists, drop it.

--====================================================================

IF OBJECT_ID('dbo.SomeTestTableFF070','U') IS NOT NULL

DROP TABLE dbo.SomeTestTableFF070

;

--====================================================================

-- Create the test table with a Fill Factor of 70 on the CI.

--====================================================================

CREATE TABLE dbo.SomeTestTableFF070

(

RowNum INT IDENTITY(1,1)

,SomeDT DATETIME

,SomeINT INT

CONSTRAINT PK_SomeTestTableFF070

PRIMARY KEY CLUSTERED (RowNum)

WITH (FILLFACTOR = 70)

)

;

--===== Do the inserts using a “Pseudo-Cursor as the row source.

-- This uses the "First INSERT" “SQL Oolie” technique to make

-- the first insert to the table follow the 70% Fill Factor.

INSERT INTO dbo.SomeTestTableFF070 WITH (TABLOCK)

(SomeDT,SomeINT)

SELECT SomeDT,SomeINT

FROM dbo.SomeTestTableFF100

;

Checking the Page Counts

The following code checks the Page Counts and the Page Density (“Effective” Fill Factor) of each of the two indexes. It also proves that other “SQL Oolie” mentioned in the sidebar does actually work.

/*********************************************************************

This code does the comparison of page counts that we need to prove

that the formula of

EstNewPageCount = 100.0/NewFillFactor*PageCountAt100Percent

provides a fairly accurrate estimate.

Note that we use the "DETAILED" parameter here for accurate counts

and that we filter to only display the Leaf Level of each index.

*********************************************************************/

SELECT TableName = OBJECT_NAME(object_id)

,IndexLevel = index_level

,PageDensity = avg_page_space_used_in_percent

,RowCnt = record_count

,PageCnt = page_count

,FragPct = avg_fragmentation_in_percent

FROM sys.dm_db_index_physical_stats

(DB_ID(),OBJECT_ID('dbo.SomeTestTableFF100','U'),1,NULL,'DETAILED')

WHERE index_level = 0 --To display only the LEAF Level of the index

UNION ALL

SELECT TableName = OBJECT_NAME(object_id)

,IndexLevel = index_level

,PageDensity = avg_page_space_used_in_percent

,RowCnt = record_count

,PageCnt = page_count

,FragPct = avg_fragmentation_in_percent

FROM sys.dm_db_index_physical_stats

(DB_ID(),OBJECT_ID('dbo.SomeTestTableFF070','U'),1,NULL,'DETAILED')

WHERE index_level = 0 --To display only the LEAF Level of the index

;

Here are the results. You can see in the highlighted column that the formula worked. The reason why we didn’t come up with 1429 for a PageCnt in the results below is because of things like not having 100% full pages as we did in the example and the 70% rows fill just a little more than 70% because they fill until a given row meets or beats the Fill Factor. If you do the math on the page counts, we actually used 42.3% more memory and disk space and that’s still a lot more than the 30% that a lot of people think.

Plugging the actual numbers from the 100% table into page count part of the formula, we get

(99.7084259945639/70*1000) = 1424.4060856366271428571428571429

…and discounting the fact that the values in the row slightly exceeded the “Assigned” Fill Factor, that’s certainly close enough and in the same order of magnitude (the 1’s place in the number). If we were able to predetermine the overage on the 70% Fill Factor and plug it into the formula…

(99.7084259945639/70.0618235730171*1000) = 1423.1491689714538305485933935112

… we see that we actually do get extremely close to the 1423 pages that the test actually came out to.

If we finish with the rest of the formula, we see that the conversion from the 100% table to the 70% table will cost us 3.315 MB to accomplish. Remember, the negative sign in the result indicates a cost.

(1000-(99.7084259945639/70*1000))/128 = -3.3156725440361495535714285714286

Usage Example

Here’s a snippet of code that uses the formula. The details are in the comments in the code. And, apologies for the line wraps. It didn’t make sense to complicate the code by splitting the formulas up just to fit them on this page. It’ll look much better when you copy and paste it into SSMS.

/**************************************************************************************************

Purpose:

Display some things about indexes that we’d normally like to know plus it calculates how much

extra space would be saved or used after a REBUILD for the current Fill Factor and for the 70, 80,

90, and 100% Fill Factors.

Progammers Notes:

1. Keep in mind that these are all based on averages and so should be considered as approximations.

Also, indexes with very wide rows where there are less than 20 or so rows per page can cause

less accurate approximations.

2. It would be quite easy to turn this into a system stored procedure that could be executed from

any database.

3. Note that this has only been tested for non-partitioned rowstore indexes.

Revision History:

Rev 00 - 21 Jul 2019 - Jeff Moden

- Borrow parts of a much larger stored procedure that I use.

**************************************************************************************************/

WITH cteStats AS

(--===== This CTE is used mostly to rename some of the very long names in the DMF.

SELECT DBName = DB_NAME()

,ObjectID = ips.object_id

,IndexID = ips.index_id

,FragPct = CONVERT(INT,ips.avg_fragmentation_in_percent)

,AvgFragSize = avg_fragment_size_in_pages

,PageDensity = ips.avg_page_space_used_in_percent

,PageCnt = ips.page_count

,RowCnt = ips.record_count

,CurSizeMB = ips.page_count/128 --Integer math produces whole numbers here.

FROM sys.dm_db_index_physical_stats (DB_ID(),NULL,NULL,NULL,'SAMPLED') ips

WHERE ips.index_id > 0 --NOT a HEAP

AND ips.page_count > 128 --This is 1 MB or 16 Extents and could be parameterized.

)

SELECT stats.DBName

,SchemaName = OBJECT_SCHEMA_NAME(stats.ObjectID)

,ObjectName = OBJECT_NAME(stats.ObjectID)

,stats.ObjectID

,IndexName = idx.name

,stats.IndexID

,CurFillFactor = idx.fill_factor

,stats.FragPct

,stats.AvgFragSize

,stats.PageDensity

,stats.PageCnt

,stats.RowCnt

,stats.CurSizeMB

,SavingsMBCur = CONVERT(INT,(stats.PageCnt-(stats.PageDensity/ISNULL(NULLIF(idx.fill_factor,0),100)*stats.PageCnt))/128.0)

,SavingsMB070 = CONVERT(INT,(stats.PageCnt-(stats.PageDensity/ 70*stats.PageCnt))/128.0)

,SavingsMB080 = CONVERT(INT,(stats.PageCnt-(stats.PageDensity/ 80*stats.PageCnt))/128.0)

,SavingsMB090 = CONVERT(INT,(stats.PageCnt-(stats.PageDensity/ 90*stats.PageCnt))/128.0)

,SavingsMB100 = CONVERT(INT,(stats.PageCnt-(stats.PageDensity/100*stats.PageCnt))/128.0)

FROM cteStats stats

JOIN sys.indexes idx

ON stats.ObjectID = idx.object_id

AND stats.IndexID = idx.index_id

;

Conclusion

I hope you take a couple of things from this article…

The first is, of course, is having to do with the subject of the article… It takes a fair bit more disk and memory space to reduce the Fill Factor than a lot of people may expect, especially when it comes to large indexes or a lot of smaller indexes. Hopefully, you now know how to make a “close enough” order-of-magnitude estimate.

The other thing is to not trust things that are new to you. Always do a test to prove that something is true especially if it’s a formula or technique. One of my favorite quotes is attributed to Wernher von Braun who reportedly said, “One good test result is worth a thousand expert opinions”.

Last but not least, I used a different “SQL Oolie” when I populated the 70% table and the INSERT followed the Fill Factor thanks to a hidden “offline index build” that SQL Server can do. If you haven’t seen the article on that one, it’s worth a read. It’s located at the URL for Ref03 in the References section below.

Thanks for listening, folks.

References:

Ref01: https://docs.microsoft.com/en-us/sql/relational-databases/indexes/how-online-index-operations-work

Ref03: https://www.sqlservercentral.com/articles/some-t-sql-inserts-do-follow-the-fill-factor-sql-oolie

© Copyright by Jeff Moden - 23 July 2019 - All Rights Reserved