Today I am starting a new series of short posts designed to help SQL Server administrators with virtualized SQL Servers twist the mind a bit and think about virtualization in a different light. I call it “Smart Moves with SQL Server VMs“.

Virtualization technologies for non-VM-admins usually result in just P2V’ing a system and then forgetting about it. Once virtual, the world can change (for the better, I promise) in ways not usually considered, and you – the data manager – can dramatically benefit from embracing the new technology.

The first post in this series discusses a trick with using virtual machine proximity when dealing with large volumes of data movement, such as nightly ETL processes, application server data handling, or database backups.

First, we’ll be using a free utility called iperf to demonstrate the raw performance differences here. You can read more about how to use it at this blog post.

So the scenario is as follows. You have two servers – a SQL Server VM that runs a nightly ETL process, and an application server VM where you are pulling large quantities of data from. The traffic occurs over the physical network.

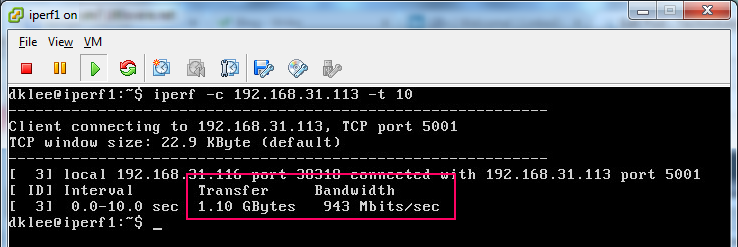

A quick iperf test can show the total possible network bandwidth between the two servers.

This test shows that we’ve got just about the maximum possible bandwidth between the two servers on this 1Gb network. At this point, the data that you will transfer is most likely limited by this bandwidth bottleneck.

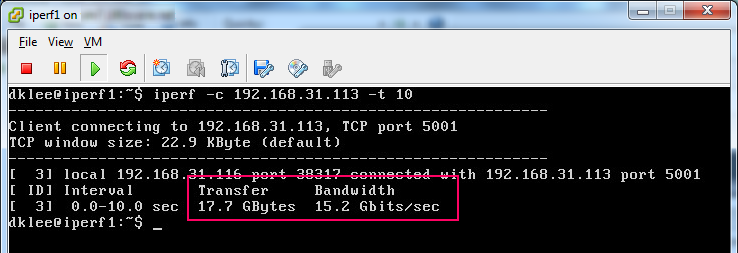

Now, what if you were to place both of these VMs on the same physical machine? If the networking is set up properly, the situation can change. You can rerun the same iperf test and see the following results.

We get an over 15x performance boost in this scenario.

Your network traffic now is passing through the backplane of the physical machine and not even touching the physical network. All of it is transparent to the SQL Server, operating system, or application code. There are no code changes, no funny tricks with Windows Server networking…. just a very dramatic performance boost.

Think about this performance difference for a moment. With the networking stack out of the picture, your large data movement bottleneck could now be the storage speed reading from disk, or it could be the CPU scalability of the ETL process itself. Both of these bottlenecks have much higher thresholds than the networking stack’s performance.

Your large volume data transfer process is virtually sure to experience a performance improvement, and therefore cut down the run time of the process and resource consumption on the networking stack.

The best part is that both VMware vSphere and Microsoft Hyper-V have PowerShell interfaces into the hypervisors, and scripting out a command to co-locate these two VMs on the same host is a relatively trivial task. All you need are simple permissions from the virtualization admins to achieve this. It can be programmed to execute as a prerequisite step in the ETL processes or jobs that perform these tasks.

Go forth and improve performance! More tips and tricks like this are coming in the following weeks!