Frequently I am asked how to properly use SQL Server Integration Services to handle ragged flat files, an example of which is shown below. In this small sample file, you can see that we are dealing with medical data that has an inconsistent format. As shown, the rows in the pipe-delimited file can have as few as seven and as many as eleven columns.

Although you and I can look at this file and interpret its meaning, previous versions of SSIS cannot (see note below regarding SSIS in SQL Server 2012). You can see that we’re not only missing the data but the delimiters as well, which is the root of the problem. This type of format where delimiters are simply left off when values at the end of the row are missing or NULL is, unfortunately, a relative common event that ETL developers must address.

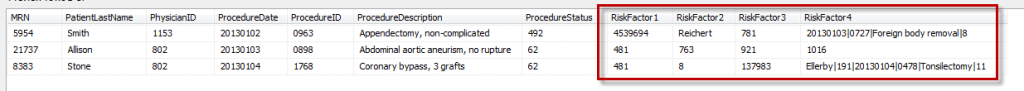

With a file like this, SSIS will continue to consume data for each field until it find the specified field delimiter – even if that means that the data is “gobbled up” from the next line in the file! Let’s assume that the outputs on my Flat File Source component define 11 fields (the largest number of fields found in this file snippet). When SSIS processes the first row of data, it’s going to get to the end of that row still expecting to find a pipe delimiter to indicate the end of that field. Rather than assuming the end of the line of data (represented by an unprintable newline character) is actually the end of the column, the Flat File Source component will continue to consume data until either it finds the specified delimiter or the length of the data consumed exceeds the width specified for that column. In this example, the eighth field would not be the literal “453” at the end of the line in the file, but would contain a newline character followed by the first value of the following line of data. Then the next field, which should be NULL, would contain “Reichert”, followed by the incorrect value of “781” and so forth. As shown below, this slip would cascade through the remainder of the file.

In a case like this, the very best you can hope for is that the data length would overflow the allowed field width, or a data type conflict would cause an error and fail the package. In a worst-case scenario, SSIS could actually consume the entire file with this misalignment as shown above, which may not raise an error in the package but would almost certainly cause downstream issues in the destination systems.

Scripting to the rescue!

Never fear – there is a solution to this problem. By using just a bit of code in SSIS, we can easily get around this issue and process the ragged flat file. The methodology we’ll use is as follows:

- Using the Flat File Source, consume each line of text as one big string (rather than breaking it apart into individual fields)

- Using the Script Component, parse each line of the file. Dynamically populate the output fields with the data in each line of the file, and assign NULLs to those fields where no value is present.

- Send the cleansed output to the destination table

Sounds easy enough, right? Let’s go through this step by step.

Process each line of data

As mentioned, we’re going to trick our Flat File Source component into processing this file by forcing it to handle each line of data as if it were a single text field. As shown below, I’ve configured a single output field, named [Column 0], as a field of type String with a width of 2000 characters. This will include everything on the line of data, including the pipe delimiters, in the output.

Next, we’ll add a script component into the data flow pane. We’ll configure the input of the script component to use the single field generated by the flat file source above.

We also need to add all of the output fields manually, which we will populate from within the script. As shown below, I’ve already added the eleven fields to the script component output, configuring each one with the appropriate data type and length.

Now onto the fun part: The Code. Within the script, our methodology will be as follows:

- Break apart the big text string into its individual data fields, splitting on the specified delimiter (in this case, the pipe symbol).

- If there are mandatory fields (i.e., any fields which must be present for a valid row of data), assign these to the appropriate output columns.

- For the optional output columns, process the values retrieved from the input string, in order, assigning each one to the next output column until no other input values exist.

First things first – let’s grab and shred the input text. As shown below, I’m going to split the input on the pipe delimiter, resulting in an array of strings, each with a single value.

| // Break apart the input string into its atomic elements string[] allValues = Row.Column0.Split(‘|’); |

Next, we’ll work through all of the fields that are expected to be present in all valid rows of data. In situations where there are mandatory fields which should always be present, no dynamic processing is required on those values, so we can simply assign them in order. Note that I’ve used an incrementing counter variable to work through the array of input data – this is simply a shortcut step to allow me to copy/paste the same code rather than typing the cardinal numbers for each element.

| // Counter int i = 0;// Address the values known to be present in every row Row.MRN = allValues; Row.PatientLastName = allValues; Row.PhysicianID = allValues; Row.ProcedureDate = allValues; Row.ProcedureID = allValues; Row.ProcedureDescription = allValues; Row.ProcedureStatus = allValues; |

Now we move on to the dynamic portion of the processing. From this point, all columns are optional, so we need to go through the remaining values, one by one, and assign them to the final four output columns (all related to risk factor codes in this example). As shown in the code snippet below, I’m continuing to work through the string array created earlier process all of the values. As long as values remain in the array, we’ll continue assigning those values to the next risk factor code in order; when we run out of values, all remaining risk factor columns will be populated with a NULL string.

| // The next four values are variable. We may have zero to four of these, so // we’ll add them dynamically as they appear Row.RiskFactor1 = allValues.Length > i ? allValues : null; Row.RiskFactor2 = allValues.Length > i ? allValues : null; Row.RiskFactor3 = allValues.Length > i ? allValues : null; Row.RiskFactor4 = allValues.Length > i ? allValues : null; |

We only have four possible additional columns in this scenario, but this pattern can easily be applied to similar scenarios with more than just a few optional elements.

Consume the cleansed data

Finally, we just need to route the cleansed data out to our destination. As shown below, we’re sending the processed data to a SQL Server table.

SQL Server 2012 changes

As mentioned earlier, this is a design pattern that I recommend for versions of SSIS prior to 2012. If you are using SSIS with SQL 2012, this workaround is unnecessary: the Flat File Source was updated in this latest version so that it allows easy processing of these types of ragged files.

Conclusion

Ragged flat file processing can be a pain when working with older versions of SQL Server Integration Services. This post demonstrates how, with just a few lines of code, you can avoid issues with ragged files by dynamically “peeling off” values and assigning them to the appropriate outputs.

Note: The package and sample data file I used for this demonstration are attached here.