Introduction

Recently, I have found that my Microsoft SQL Server skills may not be sufficiently marketable. Essentially, my Microsoft expertise over the past three to four years may no longer match up with the better jobs in Data Analytics because the marketplace has evolved so much.

As a result, I now ask myself: What can I do to fill the gap and build more marketable skills? What are the latest Silicon Valley database trends? How do they apply to me, as a Microsoft database engineer?

I have been working with Microsoft database technology for more than a decade. I always wanted to work with the large databases, and be a data architect in a strong, dynamic company. Keeping that in mind, I have been applying to job postings that include both “SQL Server database" as a main technical skill and "NoSQL" database as a “desired” skill. Surprisingly, I was receiving no responses!

My conclusion: The knowledge of NoSQL databases is now a requirement, not an option!

But, I cannot possibly learn every NoSQL database those databases are too numerous. So, what database is the most popular and relevant to me as a database engineer? I have concluded that most job postings frequently require familiarity with the Hadoop ecosystem. So, where and how should I start my learning journey? My goal is to learn how to use Hadoop components.

Which version of Hadoop should I learn?

First, Hadoop2 is the latest version of Hadoop, with many important updates. That means that a lot of Hadoop books published before 2014 may be obsolete, since they describe the prior versions of Hadoop.

Another problem is that many books either talk about Hadoop from the business perspective, or from software engineer prospective. Moreover, these books utilize software languages that are unfamiliar to me, a Microsoft developer, such as Python, Pig Latin and Java. I find all of this to be somewhat overwhelming, as my goal is to learn how to use Hadoop components and not how their capabilities may be extended or customized.

After studying many components of the Hadoop ecosystem, I have found out that the following have the most relevance to me a Microsoft database engineer/DBA.

- Most commonly, Hadoop is installed on Linux. (Even though Microsoft has its own version of Hadoop on Windows, called HDInsight, I have not found job requirements that require the knowledge of HDInsight.)

- Hadoop stores data in its own file system called HDFS.

- To move data between Microsoft SQL server and Hadoop, there is a component known as Sqoop.

- To quickly move web server log files from specific directory (spool) into Hadoop, there is a component known as Flume.

- And finally, to use SQL statements with Hadoop, there is a component known as Hive. Hive is built on a top of HDFS and internally translates SQL statements into MapReduce jobs. (MapReduce is the heart of Hadoop. It permits massive scalability across hundreds or thousands of servers in a Hadoop cluster.)

To summarize, the following components of Hadoop have attracted my attention as the simplest to understand while still getting the job done: HDFS, Flume, Sqoop and Hive.

Installation tips and learning resources

I have no problems installing these Hadoop components on my home desktop PC with Windows 8.1, 500GB HDD and 16 GB of RAM. In order to install Linux, I downloaded a free virtual machine called VMWarePlayer 7 from vmware.com.

The easiest way to quickly install the simplest Hadoop implementation is to download it from Cloudera.com. ”Cloudera QuickStart VM” provides the complete image of VM (Virtual Machine) with preinstalled Linux (CentOS) and a full set of the Hadoop components.

Once the “Cloudera QuickStart VM” with Hadoop was installed, I started running the sample code and other meaningful examples from the books set forth below.

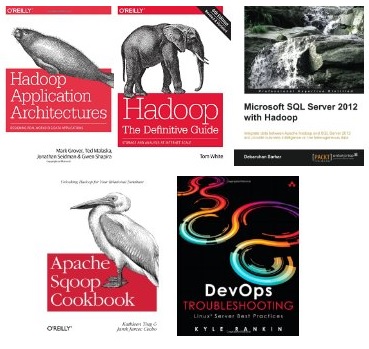

Here is the list of 5 books that I found to be most useful (all of them can be purchased on amazon.com):

- Linux: DevOps Troubleshooting: Linux Server Best Practices by Kyle Rankin - Provides very practical examples of how to monitor and troubleshoot Linux.

- Hadoop: The Definitive Guide 4th Edition by Tom White - Overview of Hadoop2 ecosystem, including Flume.

- Hadoop Application Architectures by Mark Grover - How, when and where’ to use Hadoop2 components.

- Apache Sqoop Cookbook by Kathleen Ting - Provides Sqoop overview with examples.

- Microsoft SQL Server 2012 with Hadoop by Debarchan Sarkar - Provides the best overview of Hive sql in comparison with T-SQL. It also includes practical Sqoop examples.

I did not read the above books from cover to cover; instead I reviewed the most relevant chapters.

Conclusion

The entire exercise helped me to understand the following:

- how Hadoop works internally on conceptual level

- how Hadoop may complement Microsoft SQL Server functionality

- which Hadoop component is used to import/export data ( Sqoop, Flume)

- how to use Hive and HiveQL, the Hadoop version of sql

I highly recommend this learning path to other Microsoft SQL Server developers and DBAs.