|

| Featured Contents |

| Question of the Day |

| The Voice of the DBA |

AI Thoughts on the Build Keynote If you haven't seen the Build 2023 keynote, it's, well, interesting. At a surface level, it's focused on AI and delivers some demos that many of us might find to be useful and intriguing. I didn't attend the event (or watch it live), but I did see it a bit later and I made some notes, pausing the 30-minute talk a few times to think about what I'd seen. The opening lightly glosses over some of the AI enhancements to development tools and the environments that can be created quickly in GitHub or Azure. Some of us will like those, and maybe they'll grow on me, but I tend to prefer a development environment on my own hardware, where I have unlimited compute power at a fixed cost. The first big announcement is then showing CoPilot technology, essentially some ChatGPT-like abilities, embedded into Windows 11. The demo shows asking Windows where settings are, with the response including buttons to take actions, like setting dark mode. Minorly useful, though I think Windows search works fine. I can type "env" and get the "edit environment variables" in the results. I still have to click through to change things, but this doesn't seem like a better use of AI, especially if I need to type "set dark mode" instead of "dark". To be fair, the demo has the user asking for ways to adjust the system to get more work done. The suggestions are for dark mode and a focus timer. I knew about the former, but not the latter. Perhaps being able to ask for general assistance with tasks is useful as there are likely lots of features I know nothing about and wouldn't even think to look for. There is also the option to drop a document, like a PDF, in the chat and Windows asks if the user wants the system to "explain", "rewrite", or "summarize" the document. The user clicks summarize and gets a summary of the document. There is also a demo with plugins that developers can write for Bing, such as one that uses a legal package to make a change to a document. While lawyers might be worried about their practices (or paralegals about job prospects), I'm more worried about a fundamental problem that many of us data professionals have seen in the past: garbage in, garbage out. In this case, if the AI model isn't well-trained, can I really trust it to summarize a PDF or change a legal document? How can I tell if it's wrong, or slightly off? In some sense, this reminds me of a high school report. It might summarize some text at an A level, or a D level. It's up to me to judge that, and I can't assume the results are good or bad. The important thing to keep in mind, however, is that we aren't in that place with AI. We can't just trust the AI. We are in a time when AI is an assistant, where it can help us complete a task or get something done a little quicker. We are still responsible. We still have to verify and do some work, but if the CoPilot can automatically launch Jira and navigate to a ticket, or attach a document and create a short message to our team, that saves us time. It saves us tedium. It can make our jobs easier. We are still needed, but we don't do all the heavy lifting. I do worry about some of the opportunities for plugins that developers will write strictly to monetize their efforts. If I want a shopping list, I don't want it to go to Instacart. I want a list I can use. I realize that doesn't necessarily make Microsoft or a developer any money, but not all the tasks and advances are about profit. Or at least, I hope they all aren't. I hope some are here to just make the world better. For a quick view of what that could be, watch the keynote closing video. Steve Jones - SSC Editor Join the debate, and respond to today's editorial on the forums |

| Featured Contents |

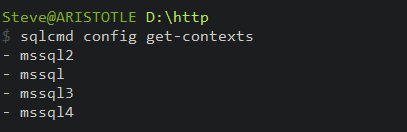

How can I determine which context is active?

How can I determine which context is active?