Introduction

One of the biggest performance gains built into SQL Server is the stored

procedure. SQL Server has the ability to cache the execution plan for

re-use at a later time. While SQL Server 2000 has improved tremendously in

caching execution plans for ad-hoc SQL queries, it still handles stored

procedures best because they happen to be database objects that can be referred

to definitively. However, improper techniques in building stored procedures can

cause these cached execution plans to be missed on the initial lookups.

Improper techniques can even cause multiple recompilations of stored procedures,

thereby causing unexpected performance loss. In this article we'll look at

the following:

- The procedure cache

- DBCC commands useful for testing

- syscacheobjects system table

- Setting up Profiler to capture execution plan

re-use

- Typical stored procedure compiling and execution

plan re-use

- Problems with using sp_

- Issues with not specifying the owner

- Recompiles due to coding methods

The Procedure Cache

The procedure cache is part of the larger memory pool for SQL Server.

Starting with SQL Server 7.0, individual parts of the memory pool are

dynamically controlled by SQL Server with no documented option for the DBA to

specifically configure a percentage of the memory pool just for the procedure

cache. SQL Server will do its best to balance all factors in order to

ensure a high buffer cache hit ratio for data as well as a high procedure plan

cache hit ratio. Obviously the more memory available to SQL Server for the

given system the larger the memory pool and correspondingly, the larger the

individual caches. The various parts of the SQL Server Memory Pool are

listed in Figure 1 below.

Figure 1: SQL Server Memory Pool Components

SQL Server, if configured to dynamically handle memory, will

interact with the operating system to request memory as needed and also will

release memory as other processes require more. This can and will affect

the various caches. For instance, I had run a couple of stored procedures

on my workstation's install of SQL Server and verified their execution plans

were cached and being reused. I needed to start up a memory intensive

application and after I had, I went back to check the cache. As expected,

my procedure execution plans had dropped out (their cost was extremely

small). Executing them again, I saw the Cache Miss events which confirmed

the execution plans previously cached were no longer available. This

demonstrates the need for adequate memory on a SQL Server system.

DBCC DROPCLEANBUFFERS and DBCC FREEPROCCACHE

In a production system, it's generally not a good idea to tinker with the

buffers and cache because one might cause performance issues (standard Microsoft

warning). However, to return the buffers and the cache to an "upon

startup" environment, Microsoft has given us two DBCC commands so that we

don't have to stop and restart the SQL Server service each time we want to test

without the data and/or execution plans being cached, because the two different

states could result in a large performance difference. Let's look at each

of them:

DBCC DROPCLEANBUFFERS

DBCC DROPCLEANBUFFERS serves to empty the data cache. Any data loaded

into the buffer cache due to the prior execution of a query is removed.

This DBCC command permissions default to members of the sysadmin group.

While not 100% relevant to our discussion of the procedure cache (we're more

concerned with the execution plan), I present it for completeness.

DBCC FREEPROCCACHE

A DBCC command that was formerly undocumented, this DBCC command serves to

empty the procedure cache. If observing through SQL Profiler, one can

watch the Cache Remove events occur as DBCC FREEPROCCACHE goes to work. We

use this command to return to the un-cached state. There can be a

tremendous performance difference between the first and future times a stored

procedure is run merely because of the compilation that must take place prior to

execution.

syscacheobjects

The system table syscacheobjects is where

information on cached execution plans is stored. The column listings are

in Books Online, so I'll only focus on a few of them of particular interest:

| Column Name | Description |

| cacheobjtype | This is the type of object in the cache. We're interested in the following with respect to user stored procedures:

|

| objtype | This is the type of object. Since we're speaking of stored procedures, we're looking for Proc entries. |

| objid | This corresponds to the id field in sysobjects (except in the case of ad hoc or prepared SQL queries, where it is an internally generated number) from the database containing the stored procedure. We can use this field to match up an entry in the aforementioned sysobjects with one of our stored procedures. |

| dbid | Since objid refers to the id in sysobjects, we need to be sure we're looking at the sysobjects table in the correct database. This is where dbid comes in. It corresponds with the dbid from sysdatabases. |

| uid | This indicates the owner or user who executed the object. It matches the uid in the sysusers table for the respective database. When talking about stored procedures, it corresponds with the owner. If we're looking at ad hoc queries, the uid is either the value -2 or the user id of the user executing the query (the creator). A -2 value indicates that the plan can be used by anyone and is not dependent on name resolution. |

| sql | The actual text (up to 128 characters) of the SQL command executed or simply the name of the stored procedure (without parameters). |

By querying against this system table we can verify that our plans are

getting cached. The actual execution plan that's stored in cache isn't

visible through any documented means, but this table serves to help us not only find

out what's being cached, but also understand a bit more about how caching

works. For the most part, however, we'll rely on Profiler to tell us how

we're doing with respect to our execution plans.

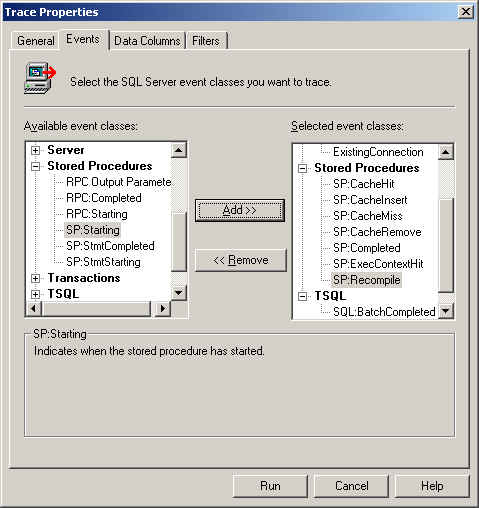

Profiler Template Configuration

With SQL Server 2000, Profiler comes with events geared to allow us to understand more about caching and compilation of our stored procedures.

Starting with the default template, we can simply add the SP:Cache events,

SP:ExecContextHit, and SP:Recompile events. Because I'm operating locally

to my SQL Server install, I've removed the RPC: Completed event and instead

added the SP: Completed event. If we're looking to see exactly when cache

miss and recompile occur, we might also add SP:Starting and the two SP:Stmt

events. When we consider undesired recompiles later, those events will

need to be monitored. However, for basic monitoring, a template similar to

this is sufficient:

This should let us know if a particular stored procedure's execution plan

cannot be found (though the stored procedure has been executed and the plan

should be cached), or if because of various factors a recompile is in order.

Compilation and Execution Plans

When any stored procedure is called for the first time, it's execution plan

must be generated. This is what is meant by compiling an execution plan,

and while it's not the same as compiling a bit of VB code or even just-in-time

compilation that can occur in other languages such as Java, SQL Server is taking

our stored procedure and building an intermediate stage, the execution

plan. This execution plan tells what indexes to use, what steps to break

out in parallel, etc. Going through this process consumes resources, so if

we can take an already existing execution plan "off the shelf" and

re-use it, we'll save resources better used elsewhere.

The first time a stored procedure is called, SQL Server does a check in the

procedure cache (at a high level) to see if there is an already existing

execution plan. Since it is the first time the stored procedure has been

called, no match will be found. SQL Server will then prepare for the

compilation process and issue a [COMPILE] lock on the stored procedure.

It'll then do a more extensive search of the procedure cache, trying to match up

based on an object id. Since it's the first time, this too will result in

a miss and SQL Server will proceed to compile a new execution plan, place it in

the procedure cache, and carry out the execution plan.

But what about the second time around? When a stored procedure is

called a second time, the procedure cache is again checked for a match just as

we saw the first time. Again, this is a relatively high-level check, and

it'll get a cache hit if the stored procedure can quickly be matched up based

on database and owner. More on this a bit later. In any case, if it

finds an execution plan it can use without recompiling the stored procedure and

regenerating a new execution plan, it will do so. If the high level check

should fail to return a match, SQL Server will begin the compilation process by

once again obtaining the [COMPILE] lock. It'll then begin that more

extensive search, which, if there is a matching execution plan that for whatever

reason didn't get picked up in the first search, should now be found.

It'll take that execution plan and carry it out. If internal SQL Server

procedures (the lazywriter) have "aged" the execution plan, the

"cost" of the execution plan will be restored to the actual

cost. This ensures that frequently used or particularly costly execution

plans stay in the procedure cache while those that aren't used so often and are

fairly simple to recompile are moved off the procedure cache (if necessary).

Problems with using sp_

Stored procedures beginning with sp_ are intended to be system stored

procedures in SQL Server. System stored procedures should by default

reside in the master database. However, I've seen several

development efforts where the programmers chose sp_ as their prefix for all

stored procedures. These stored procedures would reside in user created

databases and not the master database. The issue with placing sp_

named stored procedures in any database other than master is that we'll

always receive a cache miss event, a [COMPILE] lock will always be placed, and

the second, more exhaustive search will take place. The reason all this

occurs is because of how SQL Server goes about looking for the location of sp_

stored procedures with regards to the procedure cache.

SQL Server looks for the sp_ stored procedure in the following order:

- In the master database.

- Based on any qualifiers (database and/or owner).

- Owned by dbo in thr current database in the absence of any qualifiers.

Even if we qualify the location and owner of the sp_ stored procedure, SQL

Server will still go to the master database first. When it checks

the procedure cache, it first does a scan for an execution plan against the master

database, hence the SP:CacheMiss event. Here is a trace which shows an sp_

stored procedure being executed for the second time (I've added the

SP:StmtStarting and SP:StmtCompleted to show the SP:CacheMiss is occurring when

the stored procedure is first called):

Notice the initial SP:Cache Miss event. This is because

the stored procedure sp_CacheMiss is not located in the master

database. As a result, SQL Server went ahead and obtained the [COMPILE]

lock and did the more exhaustive search. Upon performing that second

search, SQL Server was able to find the actual execution plan, which is why we

then see the SP:ExecContextHit event. However, we needlessly took up

additional time and resources to locate the execution plan. It should also

be noted that a [COMPILE] lock is an exclusive lock. During the recompile,

the stored procedure actually becomes serialized and could serve as a potential

bottleneck to our system.

Because of the way SQL Server looks for sp_ named stored

procedures, best practices say to choose another naming convention. I've

seen quite a few, including usp_ (user stored procedure) and proc_ (procedure), but as long as we stay

away from sp_ in user databases, we should be fine. The key is to develop a

workable standard other than sp_ and stick with it.

Specify the Owner

SQL Server gives us a great deal of flexibility, but sometimes we have to

manage this flexibility properly to ensure we don't create performance issues

for ourselves. One area where we can get burned is with the ability to

name database objects the same thing, so long as they belong to different

owners. When calling a stored procedure, SQL Server looks to see if we've

specified an owner. If we haven't, it'll do the initial procedure cache

search looking for a procedure match where the owner is the user calling the

stored procedure. So if we're logged into SQL Server using a non-dbo

account, such as SQLUser, and the stored procedure belongs to dbo, we'll find

that we will get a SP:CacheMiss event, just as with using sp_ in user

databases. Here is another trace which demonstrates the cache miss if the

owner isn't specified:

Notice the stored procedure was executed without specifying the owner. I

had logged into SQL Server using a SQL Server user account that did not have dbo

rights. As a result, the initial scan against the procedure cache looked

for a stored procedure usp_CacheHit belonging to that owner. Hence the

SP:CacheMiss event. If we explicitly specify the owner by using a

two-part naming convention, we get an SP:ExecContextHit immediately. This

means no exclusive [COMPILE] lock and no second scan of the procedure

cache. Here's the trace with the owner specified:

All the difference is made by adding "dbo." in front of the stored

procedure. We often don't talk about specifying the owner when calling a

stored procedure, but it is a good idea to do so. Normally we have stored

procedures belonging to dbo as a best practice, but if we are using non-dbo

accounts, we need to specify the owner or we'll get the SP:CacheMiss event and

the performance issues already discussed.

Unwanted Recompiles

There are several reasons we may see a stored procedure recompile occur, and

for a few of them the recompile is a good thing. For instance, if data has

changed significantly in a particular table, a previously chosen execution plan

may not be efficient based on the current state of the data. As a result,

we may want the recompile. Another case is when we've manually executed

the sp_recompile stored procedure to force our stored procedure to

recompile. Or we could have executed the stored procedure WITH RECOMPILE

option, usually because the parameters we're going to pass to the stored

procedure differ from what is normally passed. But there are other reasons

a stored procedure may recompile on us, and the recompile is unwanted.

Interleaved DML and DDL Statements

One such case is if we interleave DDL (Data Definition Language) and DML

(Data Manipulation Language) within a stored procedure. Switching back and

forth between the two types of SQL statements will surely cause our stored

procedure to recompile, even during execution. For instance, let's take a

look at the following stored procedure which I've built in the Northwind

database:

CREATE PROC usp_Build_Interleaved

AS

-- DDL

CREATE TABLE A (

CustomerID nchar(5) NOT NULL CONSTRAINT PK_A PRIMARY KEY CLUSTERED,

CompanyName nvarchar(40) NOT NULL,

City nvarchar(15) NULL,

Country nvarchar(15) NULL)

-- DML

INSERT A

SELECT CustomerID, CompanyName, City, Country

FROM Customers

-- DDL

CREATE TABLE B (

OrderID int NOT NULL CONSTRAINT PK_B PRIMARY KEY NONCLUSTERED,

CustomerID nchar(5) NOT NULL,

Total money NOT NULL)

-- DML

INSERT B

SELECT O.OrderID, O.CustomerID, SUM((OD.UnitPrice * OD.Quantity) * (1 -

OD.Discount))

FROM Orders O JOIN [Order Details] OD ON O.OrderID = OD.OrderID

GROUP BY O.OrderID, O.CustomerID

CREATE CLUSTERED INDEX IDX_B_CustomerID ON B (CustomerID)

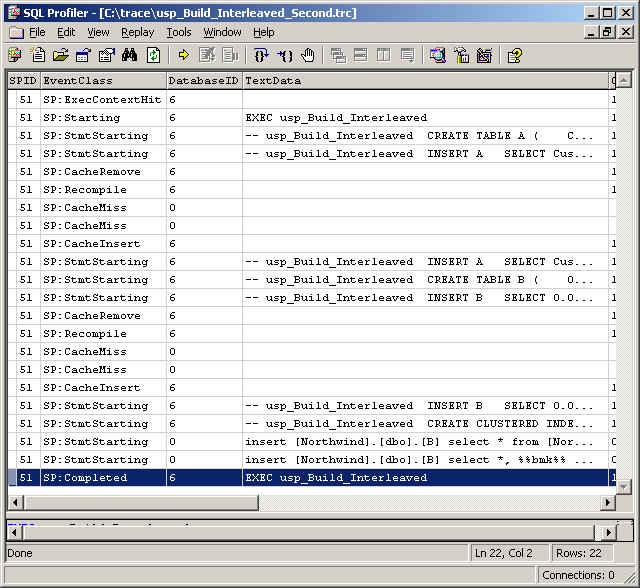

As can be seen by my comments, I have knowingly interleaved DML and DDL

statements. To see the effect, let's look at the Profiler trace:

We already had a cached execution plan, as demonstrated by the

SP:ExecContextHit. However, because of the interleaving, we've generated

two SP:Recompile during the execution of the stored procedure. Because

this stored procedure has both DML and DDL statements, we won't be able to get

rid of recompile altogether. However, we can reduce the number from 2 to 1

by simply moving all the DDL statements to the head of the stored procedure:

CREATE PROC usp_Build_NoInterleave

AS

-- DDL

CREATE TABLE A (

CustomerID nchar(5) NOT NULL CONSTRAINT PK_A PRIMARY KEY CLUSTERED,

CompanyName nvarchar(40) NOT NULL,

City nvarchar(15) NULL,

Country nvarchar(15) NULL)

CREATE TABLE B (

OrderID int NOT NULL CONSTRAINT PK_B PRIMARY KEY NONCLUSTERED,

CustomerID nchar(5) NOT NULL,

Total money NOT NULL)

-- DML

INSERT A

SELECT CustomerID, CompanyName, City, Country

FROM Customers

INSERT B

SELECT O.OrderID, O.CustomerID, SUM((OD.UnitPrice * OD.Quantity) * (1 - OD.Discount))

FROM Orders O JOIN [Order Details] OD ON O.OrderID = OD.OrderID

GROUP BY O.OrderID, O.CustomerID

CREATE CLUSTERED INDEX IDX_B_CustomerID ON B (CustomerID)

Rewriting the stored procedure, we now see both CREATE TABLE statements at

the front. Only after these tables are created will we execute the DML

statements to populate the tables and build the clustered index. If we run

a trace, we'll see that we were able to remove one of the SP:Recompile events:

We can't completely get rid of the SP:Recompile event, but we can reduce the

number of times we see it by writing our stored procedures accordingly.

Using sp_executesql

I don't generally use sp_executesql within stored procedures, however, it can

solve some recompile problems. The main issue with sp_executesql and the

EXECUTE statement is any SQL statements we pass to be executed by either of

these two methods has their security contexts rechecked. If we're using

stored procedures to limit access to our databases, sp_executesql will cause us

some headaches.

In SQL Server, if we have a stored procedure that's owned by a particular

owner, say dbo, and it accesses objects also owned by the same owner, the only

time SQL Server checks rights is when looking at permission to execute the stored

procedure. If a given user has permission to execute the stored procedure,

SQL Server will assume the owner intended to give permissions to the database

objects within the context of the stored procedure, so long as the objects

referenced also belong to the owner

of the stored procedure (hence the reason we often put all database objects

under the ownership of dbo). This ownership chain method of security works

well, because it allows us to create stored procedures which access data in our

databases in a controlled fashion while preventing broad SELECT, INSERT, UPDATE,

or DELETE permissions on tables and views.

However, if an sp_executesql or EXECUTE statement is passed a SQL string to

execute, SQL Server will automatically recheck the security. So if we want

to use sp_executesql to say get data from a table, we have to grant anyone who

needs to see that data SELECT permissions when normally we wouldn't have done

so. Unfortunately, there's no way around this issue. So why then

would we use sp_executesql? Let's look at a sample stored procedure.

This one follows after either of the two previously given, accessing tables A

and B:

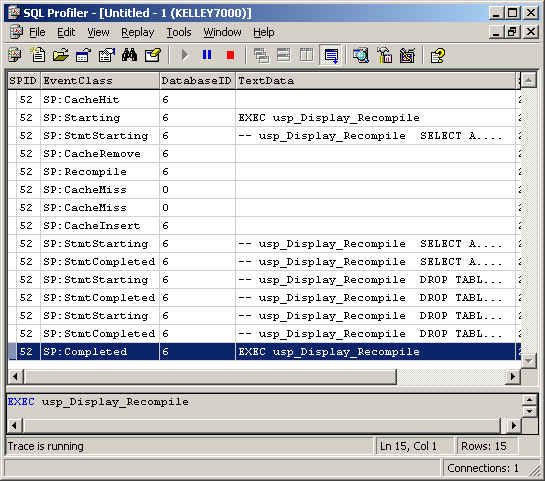

CREATE PROC usp_Display_Recompile

AS

SELECT A.CompanyName, A.City, A.Country, B.OrderID, B.Total

FROM A JOIN B ON A.CustomerID = B.CustomerID

DROP TABLE A

DROP TABLE B

One of the things I mentioned above is if data has changed considerably in a

given table, a stored procedure recompile is very likely.

usp_Display_Recompile relies on two tables which are created by a previous

stored procedure. As a result, each time the usp_Display_Recompile stored

procedure is run, data will have significantly changed because the tables will

have been dropped, recreated, and repopulated! Looking at a profiler trace

confirms the recompile:

We see that upon hitting the SELECT statement, SQL Server calls for a

recompile of the stored procedure. One of the things we can do is to pull

out the SELECT statement. The reason a recompile is being called for is

because of the data being accessed by this particular statement has changed. If

we run it using sp_executesql, we will see that the statement itself will be

re-evaluated, but the stored procedure itself will not go through a

recompile. Let's take a look at the same stored procedure rewritten:

CREATE PROC usp_Display_NoRecompile

AS

EXEC sp_executesql N'SELECT A.CompanyName, A.City, A.Country, B.OrderID, B.Total

FROM A JOIN B ON A.CustomerID = B.CustomerID'

DROP TABLE A

DROP TABLE B

Our select statement is now within the context of sp_executesql. It'll

mean that the security context will be rechecked, but if we have SELECT

permissions on the two tables, we'll be able to avoid the recompile of the

stored procedure. Our final trace confirms that the recompile is

avoided. The particular SQL statement is affected with respect to the

cache, but the stored procedure is untouched:

We see an SP:CacheInsert event with respect to the SELECT statement, but no

SP:Recompile events! By using sp_executesql, we've avoided our recompile

entirely.

Concluding Remarks

We've taken a look at stored procedures and caching in SQL Server. The

procedure cache is part of the memory buffer pool and dynamically controlled by

SQL Server. As of SQL Server 7.0, there are no documented ways of

controlling the size of the cache. However, SQL Server's internal

procedures work to keep the most used and the most costly execution plans in

memory. We can see which plans are in memory by querying the system table syscacheobjects.

However, for the most part, we can rely on SQL Profiler to tell us how execution

plans are being used.

With the version of Profiler released with SQL Server 2000 come several new

events which help us see if execution plans are being found and reused.

SQL Server goes through an initial search to find an execution plan which is

very reliant on matches on the database and owner. For stored procedures

with the prefix of sp_ in user databases, this will always result in a cache

miss. In the cases where a non-dbo owner calls a dbo-owned stored

procedure without specifying the owner, we'll see the same result because SQL

Server will default to a search based on the user. From that point a

[COMPILE] lock is obtained, and a more thorough search is done. If the

execution plan is found, it'll be used, otherwise SQL Server will go through and

build an execution plan.

Once we have an execution plan, there are several factors than can force a

recompile. The most avoidable is the interleaving of DML and DDL

statements. While we may not be able to completely prevent recompiles due

to interleaving, we can minimize them by moving all DDL statements to the front

of a given stored procedure. Another cause for recompiles is where data in

referenced tables have changed significantly. We can avoid a recompile by

using sp_executesql, though this does bring up some security issues. As

always, performance and security balances must be determined based on each case.

What I've covered here only scratches the surface of stored procedures, the

procedure cache, and execution plans. For instance, with respect to

recompiles, we've not even touched on temporary tables. I'll close by listing a

few additional references which discuss the issues in more detail.

Further Reading

- SQL

Server 2000 Books OnLine

- Inside SQL Server 2000 by Kalen Delaney (Ms. Delaney's web site: http://www.insidesqlserver.com)

- INF:

SQL Blocking Due to [[COMPILE]] Locks (Q263889)

- INF:

Troubleshooting Stored Procedure Recompilation (Q243586)

- SQL

Server Magazine: Kalen Delaney's Articles

- SQL

Server Magazine: Brian Moran's Articles